Hello,

I am trying to solve an H.264 encoding problem that I just can't seem to figure out and hope that maybe someone here knows an answer or where to look for one:

I want to encode my 3D animation (for web distribution) that features main characters with round corners, so basically cylinders flying around. The problem seems to be that no matter how high I set the bit rate the edges of those cylinders (e.g. against a bright sky/halo stars) never look good, always like an image with no antialiasing applied, basically little, visible steps (also visible when the movie is playing) (see attachment).

So far I always had good results with H.264 and normal video footage and thought that the codec could encode anything in any quality depending on the bit rate. But there seems to be a real problem with certain geometrical patterns (and also sharp edges), is this a know limitation...? And what workarounds are there?

In the blender (3D) forum someone suggested to reduce the value for the colours, since this seems to be the solution for similar MPEG-2 encoding problems. So far this does not solve the problem for me, but what I noticed: when I encode in b/w the problem is gone! So this is somehow related to the use of colours...

Any ideas how-to work around this problem in ffmpegX? I just tried different filters (incl. two-pass encoding), tried deinterlace, very very high bit rates but so far this is all not making any difference.

And one other, smaller problem I have:

When I compare x264 and QuickTime (Amateur) encoded H.264 I find that the x264 is noticeable darker than the H.264 one. Someone mentioned that this once was a codec related bug that had been solved a while ago. But it still seems to be a problem for me (using the latest ffmpegX on Leopard).

Thanks for any input on this or links that could help to find out more!

h264%20round%20corners%20problem.png

Results 1 to 10 of 10

-

-

h.264, like most MPEG based encoders, uses 4:2:0 (4:1:1 ratio) subsampling. The color planes are stored at half the resolution of the luminance plane. This will cause blurring at sharp colored edges and is why you don't see the problem with grayscale images. I don't know if you can force 4:4:4 (the best) or 4:2:2 subsampling instead. <edit> I checked it out -- according to Wikipedia the h.264 spec does support 4:2:2 and 4:4:4 subsampling. I don't know about ffmpegx</edit>

In addition to this, I think you have the famous chroma upsampling error somewhere in your chain:

http://www.hometheaterhifi.com/volume_8_2/dvd-benchmark-special-report-chroma-bug-4-2001.html

The color difference is probably a matter of rec.601 vs rec.709 colorspaces. Or maybe a disagreement on whether the luma range should be converted between the 16-235 range used in video, and the 0-255 range used on computers.

http://en.wikipedia.org/wiki/Luma_(video)

-

Thank you for this detailed answers! I think I'm starting to understand what might be going here, at least now it looks like there is a solution to the problem... I'll work through the articles you linked, do more tests and will post a solution/workaround once I'm happy with the result.Originally Posted by jagabo

Thanks again!

-

Thanks...! I have done a couple of tests, maybe you can explain what the results might mean...? I am also still trying to understand this whole topic in all its details, interesting for me would be practical things I can do, apart from trying to understand the theory behind it...Originally Posted by jagabo

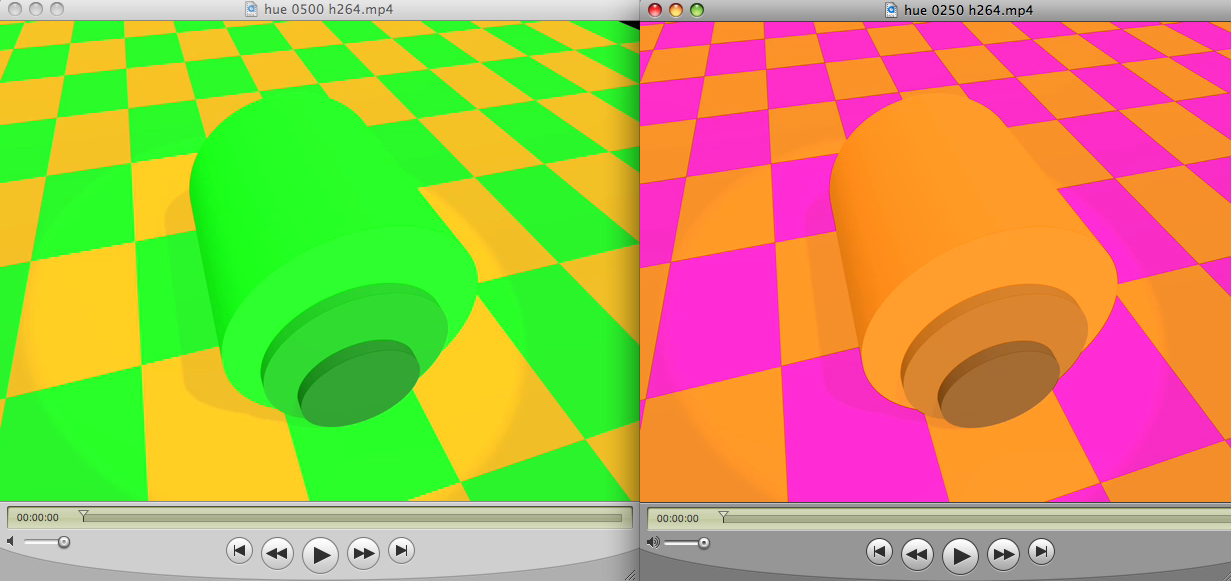

Ok, attachment "hue changes and h264.png" shows something interesting:

in blender (open-source 3D app) I have a nodes system, a bit like in Shake. When I change the hue factor to its half (0.250 instead of 0.500) I get of course the wrong colours, but the H.264 encoding almost works as you would expect! Only "almost" because the rectangles on the floor from the wrong coloured one (right side) still are not ideal, but much, much better than the ones in the original (left side).

I also tested other hue changes and found the more it gets towards 0.250 the better it gets. All other changes (less than that or more than 0.500) also give different results, most seem better than with 0.500, but the best value for hue seems to be 0.250. And the worst results happen to give my original colour combinations with hue set to 0.500...

What does this mean...?

The other thing I tested: in Final Cut I applied the "Colour Smoothing 4:2:2" filter and then did the H.264 encoding. But: no visible difference. I also tried the 4:1:1 filter and de-interlaced before using colour smoothing...

What else could I try...?

Here is my workflow:

The video is rendered in blender as a series of single 640x480 Targa Raw (.tga) frames (with a pixel aspect ratio of 100:100 which seems to be the right one) and in RGB. The single frames are then combined in blender to a .mov with the same codec and without re-compressing them and also the video is finalised in the blender NLE (dissolves etc.). There is one more step necessary after that to combine audio and video since the current OS X version of blender can't really do that, but basically that's it and after that I encode to H.264 and have the problem as described in the first post...

What I would like to get is a 640x480 H.264 file of a reasonable size (maybe 30-50 MB, the video is 4 min. long) and of course a more satisfying encoding result for the round corners. It really looks like that there is something wrong with the colours and that I have to make some changes to them before encoding to H.264...

-

-

I think all that's happening when you change the hue setting is that you're shifting to colors where the problems are a less obvious. All the problems are still there.

I don't know Final Cut but I suspect the "Colour Smoothing 4:2:2" filter is interpolating RGB values to compensate for the way YUY2 is often converted to RGB (duplicating U and V values). Doing this before h.264 encoding wouldn't make much difference.

Simply converting from RGB to YV12 and back doesn't generate artifacts as badly as in your samples. Could you supply a short h.264 video for me to look at? And a sample source image?

-

Thanks for having a look at this! Here the two attachments: a still image rendered in blender and then a 1 sec. x264 encoded sample where the first frame is the same as the attached still... (The still is also not quite perfect when you look closer at the round corners, I'm still working on that one, but it is nowhere near as bad as in the h.264 encoded sample.)Originally Posted by jagabo

testrender.tga

8192%20kbits%20x264%20testrender.mp4

-

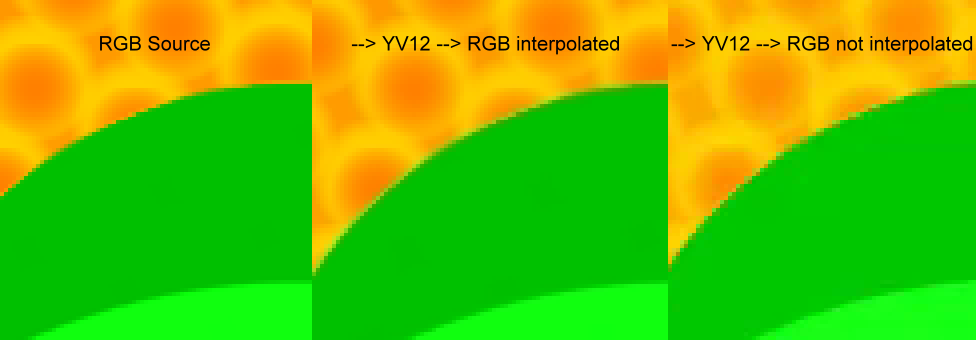

OK, you have two issues. As mentioned before, the conversion from RGB to YV12 is causing bluring of the colors. Then your conversion from YV12 back to RGB us using a point resizing algorithm. This is causing the jagged edges.

(4x point resizing for clarity)

The left image is the original RGB source. The middle image is the source converted to YV12 then converted back to RGB, interpolating the U and V values. The right image is the source converted to YV12 then converted back to RGB replicating the U and V values.

When compressing with MPEG codecs an RGB source is usually converted to the YUV colorspace and then the chroma channels are reduced to half size (both dimensions). This is called 4:2:0 subsampling (4:1:1 ratio). So a 640x480 image has a 640x480 Y (luma or gray scale) plane, and 320x240 U and V (chroma or color) planes.

When you convert that YV12 image back to RGB the U and V planes have to be resized back up to the same size as the Y plane. As with any image resizing this can be done by a simple point resizing algorithm (duplication) or by a smoother, more sophisticated methods (interpolation, bilinear, bicubic, etc.).

If you are distributing this to others these YV12-->RGB conversions steps are not under you control. They will get whatever their players give them. For your personal playback you can look to see if your player has any control over the YV12 to RGB conversion.

Encoding with 4:4:4 subsampling rather than 4:1:1 would eliminate both these problems. But I'm not sure how widespread the support for 4:4:4 h.264 is. There is also the issue of which software is converting RGB to YV12 for compression. An RGB source might be converted to YV12 by the editor before being passed to the h.264 encoder. In this case 4:4:4 encoding would only eliminate the jagged edges. Or the editor may pass RGB to the h.264 encoder, in which case you will get the full benefit of 4:4:4 encoding (assming playback support for 4:4:4 h.264). I don't know the software you are using (I'm working under Windows) so I can't give you specific help on that.

As you've noticed, using different colors can make the artifacts less noticable. And using different intensities for the different objects may help a lot too.

-

Many thanks for your analysis of the problem - I am sure this will now help me to find a solution!Originally Posted by jagabo

Anyone reading this knows how-to do this ("Encoding with 4:4:4 subsampling rather than 4:1:1 would eliminate both these problems.") with ffmpegX or with any other Mac software? Some kind of known workflow from similar cases would be really helpful now. Any ideas anyone...?

And thanks again jagabo for taking your time to look at this and for all your explanations!

Similar Threads

-

cannot get results from autogk as good as from fairuse

By octeuron in forum Video ConversionReplies: 8Last Post: 11th Jun 2010, 20:27 -

Good Results on Video to Photo Logo Removal?

By Disco Makberto in forum EditingReplies: 28Last Post: 14th Dec 2009, 20:40 -

LCD TV shows white glow in corners

By Grunberg in forum DVB / IPTVReplies: 5Last Post: 18th Dec 2008, 13:24 -

"Upside down converter you turning me, inside out and round and round

By Blå_Mocka in forum Video ConversionReplies: 2Last Post: 18th Feb 2008, 13:23 -

What is are some good mac programs that convert AVI to H.264

By VidHunter in forum MacReplies: 5Last Post: 18th Jan 2008, 07:17