I'm wondering if there is a "standard" color correction that should be used on DV footage? I view my footage on a calibrated Sony Trinitron monitor and all of my footage looks washed out. Blacks don't look black and it basically looks like all the footage could use a little saturation. I used to monitor my footage with a consumer TV and all of my colors looked great. I've read that consumer TV's use circuitry that changes my original signal to make it look better and broadcast monitors show the native signal with no enhancements. If this is true, my DV footage then isn't the most vibrant (which probably has something to do with its color sampling). I've got an array of color correction tools at my disposal, so I'm wondering if there is a industry standard that should be applied to all of my DV footage.

Thanks in advance.

+ Reply to Thread

Results 1 to 17 of 17

-

-

Explain your capture methods and source.Originally Posted by bsuska

A DV consumer camcorder transferred over IEEE-1394 should have solid blacks but hot to clipped whites. You would tone down whites from 255 to nominal 235, then contrast should look fine.

You seem to be describing an analog capture that places analog IRE 0 (blanking level) at digital 16 thus placing NTSC 7.5 IRE blacks up at digital 32 (a gray).

Explain what you are doing and we'll zero in on the problem.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

When you say calibrated Sony Trinitron monitor, is that a computer monitor or a studio monitor?

If the former, that is why.John Miller -

I agree that you must properly calibrate (set levels) for the monitor first. Then you evaluate sources.

Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

No, I'm talking about my DV footage captured over IEEE-1394 interface. If I understand the IRE 0 -7.5 issue, I should have my setup level set to 0 if I'm editing footage that was transferred into my system via the IEEE-1394 interface (i.e. DV). If I want my analog footage (i.e. footage I captured via analog - like a VCR tape) then I would change my setup level to 7.5 thus giving me the right blacks on my monitor. Please correct me if this is inaccurate. But I am just viewing DV.

-

Are you playing a consumer camcorder directly into a studio monitor (composite or S-Video) ?Originally Posted by bsuska

You need to see this JVC tutorial:

http://pro.jvc.com/pro/attributes/prodv/clips/blacksetup/JVC_DEMO.swfRecommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

No, it is footage that I'm viewing from my Premiere timeline. My Matrox breakout box is connected to my studio monitor via S-Video though.

-

If you are certain of your monitoring then what is this video and how did you capture it?Originally Posted by bsuska

It obviously has incorrect levels.

Do you have known good source to compare?Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

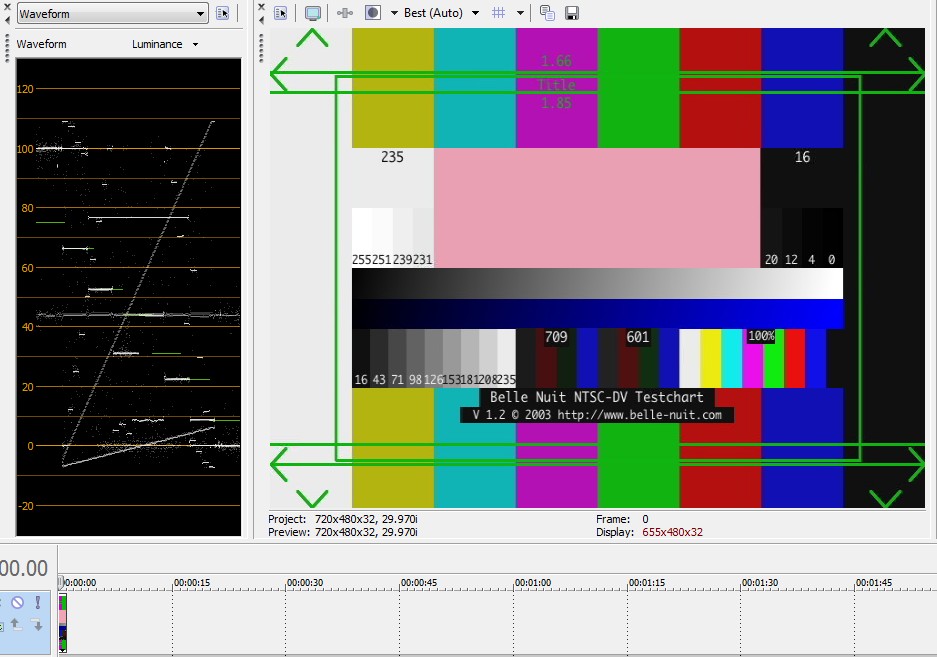

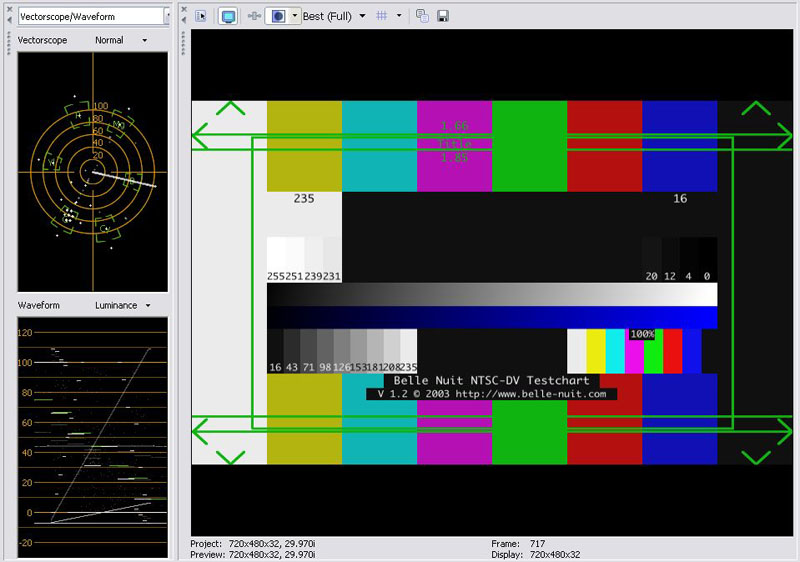

A good test pattern to use for monitor brightness, contrast and gamma setting is the Belle Nuit DV test chart.

http://www.belle-nuit.com/testchart.html

Put it on your timeline and check your monitor path.

Recommends: Kiva.org - Loans that change lives.

Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Be sure you convert those RGB charts to DV correctly. Here's a small DV AVI file with a correct conversion:

https://forum.videohelp.com/topic346864.html#1820664 -

Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Woah, I think that I am now completely confused.

Maybe there is no simple answer, but I was hoping someone could just tell me what my settings should be.

I've got a Matrox card that is has a breakout box for analog output. I'm using S-Video to go from the breakout box to my Sony Trinitron studio monitor. This monitor has been calibrated by dropping color bars on my timeline and then adjusting accordingly.

Inside my Matrox settings I have the ability to change (under playback settings/Analog Setup) to either 0 or 7.5 IRE.

I input my DV footage via a Sony DV deck.

If I understand everything correctly - my black levels are layed down on my tape through my camera. An exactly copy is then transferred to my computer (black levels should be the same 0 IRE or 16) The adjustment that I can make inside my Matrox settings only effects the analog signal that is being pushed out for monitoring purposes. This should be set to 0 IRE to match the signal. 7.5 if it was an analog video tape source.

If everything on my end is correct, the only thing I can think of is that either my studio monitor is not calibrated correctly or a DV signal just isn't very saturated to begin with. -

Are you sure you did this correctly? What was the source of your color bars? If you imported a JPG, BMP, or some other computer graphics based image the default for most programs is to use a rec.609 conversion matrix which will contrast compress the RGB data from 0-255 to luma 16-235. Vegas appear to be the exception to this, defaulting to a pc.609 matrix which leaves the luma levels intact.Originally Posted by bsuska

I believe analog NTSC in the USA and Canada will always be 7.5 IRE. 0 IRE would be for Japan.Originally Posted by bsuska

Note that most consumer and prosumer DV camcorders have incorrect black levels. You'll find black to be around luma 32 instead of 16. They also usually exceed 235 at the high end. -

If this is an NTSC studio monitor, it will be expecting black at 7.5 IRE and white at 100 IRE. Your Matrox card should be set to 7.5 IRE for black. The zero setting should convert digital 16 to analog 0 IRE. This would show as crushed blacks. The 7.5 IRE selection should map digital 16 to analog 7.5 IRE.Originally Posted by bsuska

The easy way to confirm monitoring is set correctly is to play the Belle Nuit color bar above and verify black (level 16) is at black and all sub black 0-16 is black. Level 20 should be visible above black.

At the white end of contrast you should be seeing levels 239, 251 and 255 should be visible as whiter than white.

If that is the case, we can turn to the input side.

If this is a camcorder generated playback imported over IEEE-1394, then camcorder black should be black on your DV timeline. If not something is wrong with the camcorder exposure. Check black with the lens capped.

If this is an analog capture through the camcorder then you may have the the level 32 black issue. You need to describe in great detail how you are capturing analog.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Okay I've figured out my biggest problems. A real big reason that everything was looking washed out was I had my S-Video running from my breakout box to a Dynex switch. I ran the S-Video directly to my production monitor and it really snapped the whites whiter and the blacks blacker. Another setting I found on my production studio Sony monitor is a color temperature setting. From everything I've read D6500 seems to be the correct setting. Things match up better to my color corrected computer monitor with the D9300 temp.

Also, EDTV...if I'm understanding you right, if I'm working with DV footage imported over the IEEE1394 interface I should have my Matrox card set to 7.5 IRE? (for monitoring on analog studio monitor). That would seem to be a direct contrast to this info - http://forum.matrox.com/rtx2/viewtopic.php?t=3374&highlight=

Thanks... -

It's my understanding that all YUV codecs (DV, MPEG, maybe not MJPEG) use IRE=0 (luma=16) for black IRE=100 (luma=235) for white, worldwide. The appropriate output from any USA NTSC analog video device should include 7.5 IRE setup.Originally Posted by bsuska

Similar Threads

-

color correction in MeGUI

By codemaster in forum DVD RippingReplies: 1Last Post: 2nd Mar 2012, 21:56 -

mpg2 color correction?

By Mike99 in forum RestorationReplies: 74Last Post: 25th Oct 2011, 23:31 -

General Color Correction

By elmuz in forum Newbie / General discussionsReplies: 1Last Post: 18th Jul 2011, 16:51 -

Increasing color depth and sampling for color correction

By poisondeathray in forum Newbie / General discussionsReplies: 17Last Post: 17th Oct 2009, 10:06 -

Color correction issues.

By ziggy1971 in forum Newbie / General discussionsReplies: 0Last Post: 17th Jan 2008, 23:17

Quote

Quote