When using Vegas 7, after I finish my editing, I render using the DVD Architect template for an MPEG2. Does this automatically provide the optimal bit rate for the amount of video I'm rendering? Or should I use the bit rate calculator and make custom adjustments?

I just want to know if I am getting the best results that I possibly can. I am kind of lost on the whole variable bit rate and the fixed bit rate, so I never change anything. Thanks.

Jeff

+ Reply to Thread

Results 1 to 6 of 6

-

-

Vegas defaults to 6Mb/s VBR (as does Premiere and others). This works out to about 90min per DVD. This data rate assumes professional asset acquisition.

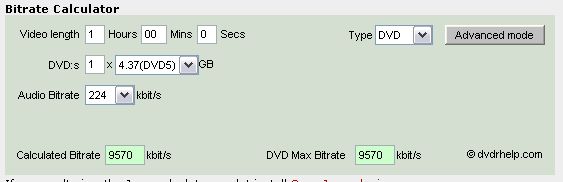

Use the calculator to figure the highest bitrate for the minutes (and audio settings) that you want to use.

https://www.videohelp.com/calc

IMO, 6Mb/s VBR is inadequate for handheld camcorder material. I'd use 8.5Mb/s or more. -

Maximum per the calc is 9.57Mb/s (9750 Kb/s) for video when audio is compressed to 224 Kb/s. CBR should be used for higher bitrates.

-

edDV,

Thanks for your reply. Does it matter if I select VRB vs. CBR? What determines which I should select? Thanks for the info.

Jeff -

Yes it does. Here's an example. Bit rate calculators return bit rates based on CBR. Let's say I have 150 minutes (2.5 hours) of movie to encode. My bit rate calculator tells me to use a bit rate of 3489 Kbps. This is CBR. You should always go under what it says. Probably in this case I'd use 3300 or 3350. The bit rate value you get will completely fill a DVD which is not what you want. If you use 3489 and the bit rate at some point in the encode spikes to 3800 (this can easily happen), you will have more video than can fit on a DVD. That is why you go under the bit rate calculator value. However, in this case, instead of using CBR, I could use VBR with an average bit rate of 3350, a maximum bit rate of 4350 and a minimum bit rate of 2350. VBR tells the encoder to use higher bit rates on more complex parts of the video, which will improve quality, and to use lower bit rates on less complex parts of the video, say, something that is mostly black, which won't need a lot of bit rate to encode. By using VBR, you have improved the quality of your encode by using more bits when you need and less when you don't. However, it is IMPOSSIBLE (I capitalize this so you won't miss the point) to predict the end result of a VBR encode and you can get too much video to fit on a DVD, in which case you have to reduce the VBR settings and try again. Basically as a very general rule of thumb, I would say consider using VBR for any encodes of 90 minutes or more and CBR for anything less than 90 minutes. At high bit rates, there's not really much difference to most people between, say, 7500 Kbps and 8500, so you might as well just stick to CBR as VBR won't really get you anything.Originally Posted by Jeff_NJ

-

My theory is higher bitrate CBR should be used for non professional hand held camcoder input. The video is shakey (in x-y and rotation) plus fast pans and zooms are common. This drives the VBR bitrate averaging software crazy in the algorithm sense causing wild bitrate swings. CBR forces a constant bitrate.

Similar Threads

-

Question about Bit Rate and Quality

By T375 in forum Newbie / General discussionsReplies: 4Last Post: 20th Sep 2010, 10:31 -

Question about way too high bit rate video from digital camera

By jimdagys in forum Video ConversionReplies: 12Last Post: 16th Dec 2009, 16:14 -

How to achieve the maximum bit rate in variable bit rate mode ?

By Sxn in forum Newbie / General discussionsReplies: 42Last Post: 3rd Dec 2009, 13:53 -

Lots of question regarding conversions, bit rate, Xbox 360...

By walterdavid in forum Newbie / General discussionsReplies: 2Last Post: 20th Nov 2009, 23:06 -

Simple bit rate question

By Bully9 in forum Newbie / General discussionsReplies: 5Last Post: 8th Jan 2009, 13:01

Quote

Quote