So using my CCD-trv85 (bult in DNR + TBC), an iodata usb capture to amarec TV, Im comparing images uploaded with...

built in TBC on and DNR on,

https://mega.nz/#!haRnDKyI!qpDcWZ852qoaRBS0Vmii3aGxzwuv9pY7msDC_96vwbM

built in TBC on and DNR off,

https://mega.nz/#!0OBn3LKZ!g6UvVxWzZKvzTccMQ2fK2Z2WB62Sp55eO8H5Co-0jXs

built in TBC off and DNR off.

https://mega.nz/#!VaYmVJza!s3UxKehsvgRwNICIr3Vbf5d5bx3SBJz24uPhEn7tC58

I cant tell a difference between any of them! This is one of the most recent tapes I have, maybe a 30 year old tape would show the differences more clearly. What do the experts think?

+ Reply to Thread

Results 1 to 21 of 21

-

-

Why has your video been resized vertically. You've ruined the interlacing and it can no longer be deinterlaced properly. It also makes it hard to tell how well the TBC is working and makes the other issues harder to see.

But... The time base on the tape/player is pretty clean. So the line TBC isn't improving it by much -- but it is helping. The NR circuit isn't doing a whole lot. -

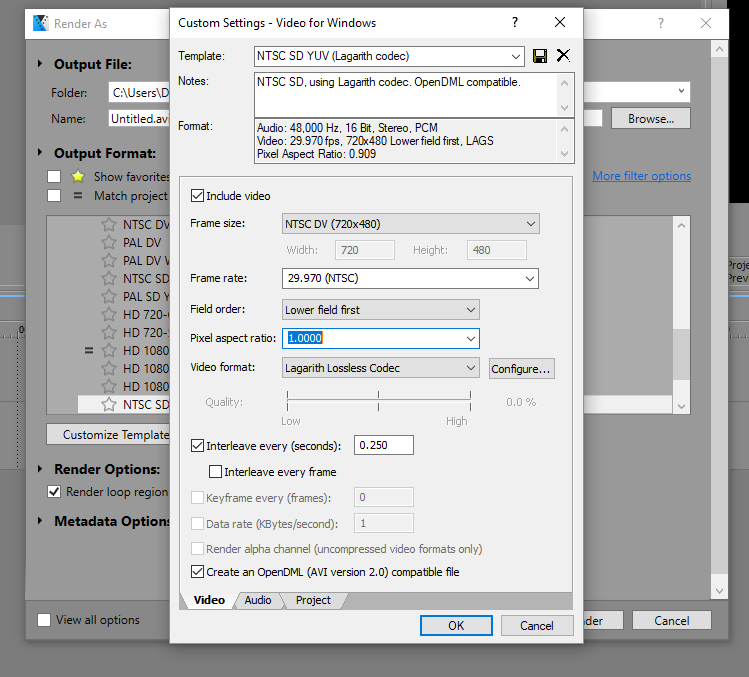

Wow thats impressive that you can tell that. I think I may have found the problem- in Sony Vegas 14 (just downloaded last night, still learning it) I spliced the sample from the raw amarectv avi, and when making a custom template, (using lagarith codec to keep it lossless) the pixel aspect ratio was set to .9091 so I assumed thats how it should be. Heres another sample when set to 1.00, I think the videos is fixed now.

https://mega.nz/#!5bo3AI5Q!YUTwSwaw3vFkDIfgvPT_WF6p9ZtIBR6yxfcL577UqtQ

Obviously I wouldnt know when these videos look best if it hit me in the head!

Also heres a picture of my setting I believe was messed up.

As you can see Im using "NTSC DV" resolution, 720x480, but only because Im importing video in amarec TV as 720x480. It probably doesnt matter, but NTSC standard is 720x486. Should I be importing in that resolution because Im not using DV for anything? Or would that just make 6 more lines I dont need?

And should I upload even video8 films (which only have 240 lines) in that same resolution as well? Thanks a lot.Last edited by videon00b; 15th Jul 2017 at 00:03.

-

The active picture in an analog NTSC signal is 485 lines -- half a line at the top, half a line at the bottom and 484 full scan lines in between. Computers like to in rectangular arrays so the two half lines become full lines, a total of 486. But almost all devices capture only the middle 480 lines now. That's what you want to use.

The sample in post #3 now has two distinct fields.

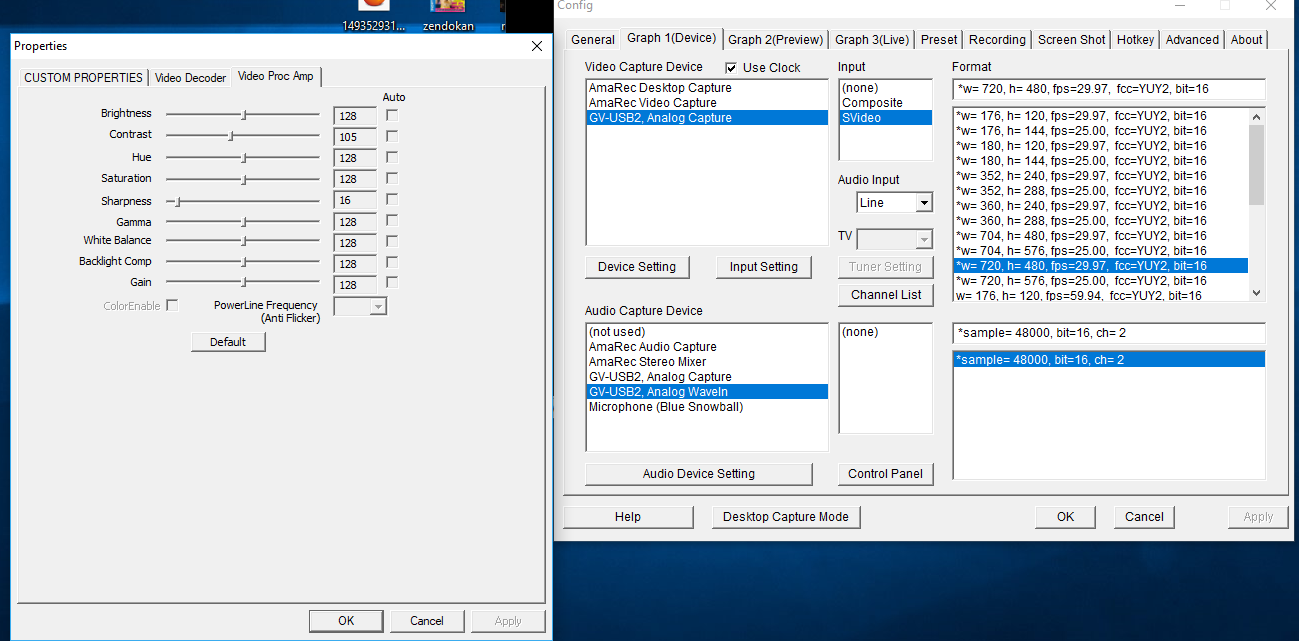

All your samples have been RGB. You should be capturing, saving, ahd uploading YUV 4:2:2 -- usually YUY2 or UYVY or whatever variation your capture device/drivers allow. That is closest to the analog signal and has a little headroom and footroom to help keep brights from blowing out and darks from getting crushed. The clouds in your caps are pegged at RGB=255 so there is probably some detail there that YUV would allow you to recover. -

What do you mean by my video has 2 distinct fields? I assume thats a good thing, but what fields are they? (are they the horizontal lines being interlaced in every other frame?)

Edit: I just realized when I was rendering my videos that I hadnt configured the coloring to be YUY2 instead of RGB in the advanced options. As I thought, my incompetence with Vegas was the problem. I believe this video sample is properly colored at 4:2:2 now, (with 1.0 pixels and re-sampling disabled too) is that right? Thanks a lot. https://mega.nz/#!ZLIj2ACS!DBW4qF2hq5k83QO0gun6xllPsXeaDMI7FZH8rt9IssY

And how do you know where my caps are pegged at, what software are you using? Thanks. I think I need to straighten this out before I get more samples comparing TBC.Last edited by videon00b; 15th Jul 2017 at 20:10.

-

Are you asking how to determine the colorspaces involved? I used a simple AviSynth script, although there are lots of ways. For the first RGB32 pic the script used was:

AviSource("1 TBC DNR ONavi.avi")

Info()

For the second YUY2 pic the script used was:

AviSource("lossless.avi")

Info()

The Info command provides lots of sometimes useful information. MediaInfo can provide the colorspace of the video as well, as can other apps and programs. Here's what MediaInfo had to say about the same RGB video:

Code:General Complete name : H:\Test\1 TBC DNR ONavi.avi Format : AVI Format/Info : Audio Video Interleave File size : 142 MiB Duration : 13 s 347 ms Overall bit rate : 89.3 Mb/s TCOD : 0 TCDO : 133466666 Video ID : 0 Format : Lagarith Codec ID : LAGS Duration : 13 s 347 ms Bit rate : 87.6 Mb/s Width : 720 pixels Height : 480 pixels Display aspect ratio : 3:2 Frame rate : 29.970 FPS Standard : NTSC Color space : RGB Bit depth : 8 bits Bits/(Pixel*Frame) : 8.454 Stream size : 139 MiB (98%) Audio ID : 1 Format : PCM Format settings, Endianness : Little Format settings, Sign : Signed Codec ID : 1 Duration : 13 s 347 ms Bit rate mode : Constant Bit rate : 1 536 kb/s Channel(s) : 2 channels Sampling rate : 48.0 kHz Bit depth : 16 bits Stream size : 2.44 MiB (2%) Alignment : Aligned on interleaves Interleave, duration : 247 ms (7.41 video frames) Interleave, preload duration : 250 ms

-

A frame of interlaced video consists of two half-pictures, called fields. One field occupies all the even scanlines, the other all the odd scanlines (a scanline is one horizontal line of pixels). The two fields, represent two different points in time, separated by 1/60 second in NTSC video. Ie, they are two different pictures. In you're earlier samples the two fields were partially blended together so that neither field could be extracted as a separate image. In the latest video the two fields are intact so they can be viewed and manipulated properly. So, yes, this is a good thing.

I viewed them with a waveform monitor in AviSynth. Vegas has a similar tool. But I don't know if it will work correctly with your Lagarith encoded videos.

https://forum.videohelp.com/threads/340804-colorspace-conversation-elaboration#post2121568Last edited by jagabo; 16th Jul 2017 at 23:06.

-

Ah I see. Thanks for explaining it guys. I will quit messing with Vegas for now and just focus on getting these videos digitized. Looks like its time to learn some avisynth as well.

Would you say that a script like this is still relevant for 8mm home videos? https://forum.doom9.org/showthread.php?t=144271 Thank youLast edited by videon00b; 16th Jul 2017 at 23:48.

-

-

HuffYUV, should be perfect for what you are setting out to do. It's a compressed lossless codec, so there should be no problems with loss in quality. Compared to Lagarith I believe that it offers greater compression also.

As stated above also, you will need to configure your capture device properly to stop recording in RGB and start using YUY2 if you plan on using HuffYUV. -

Actually lagarith offers higher compression than huffyuv

Part of the problem he is having is vegas doesn't treat "lossless" YUV codecs as YUV . They are not lossless in vegas. They get converted using "computer RGB" instead of "studio RGB" , which means values 0-15, 236-255 get clipped . Even if there wasn't clipping or if all his values were "legal" , there would still be colorspace losses from converting YUV to RGB -

In my experience with SD captures, Lagarith gives the best compression of HuffYUV, UT Video and MagicYUV (v1.2 Free). Any of these should be perfectly fine for you as they're all lossless, and unless you're going to be capturing on a single-core relic or a newer, hugely under-powered (but cheap) Laptop or off-the-shelf "Manager's Special" PC then none of them should cause issues with dropped frames etc. I tend to capture using Lagarith because I capture on a different PC than the one I edit and process on, so smaller files are better for storing and copying onto external drive for transfer to the other PC, but it's not a massive saving in space. Having said that, running an Avisynth script through HCEnc on my ageing Intel Core2-Quad desktop, MagicYUV files tend to encode around 12% faster than Lagarith files. (I think UT Video files process around the same speed as MagicYUV files.) For a good compromise between size and speed I'd probably recommend UT Video if only because it's still being developed so, hopefully, should get even better.

-

Lagarith usually gets better compression than Huffyuv. But it's slower. If you find your computer starts dropping frames while capturing you should try Huffyuv instead. Or UT Video Codec.

The other issue, which I made reference to above, is the way Vegas handles various input codecs. With some it converts YUV to RGB right away with a rec.601 matrix. When that happens you lose superbrights (Y>235) and superdarks (Y<16) and you get them back via its filtering. I suspect this is what happened with your earlier Lagarith YUV sample -- the bright sky was clipped at Y=235. You may be able to avoid this problem by adjusting your capture device's video proc amp so that there are no superbrights or superdarks to get crushed. Note that proper rec.601 video should have full black at Y=16 and full white at Y=235. There should not be any significant contente below Y=16 or above Y=235 (a little noise or oversharpening halos etc. is ok).

Attached is a Lagarith AVI with a test pattern with superdarks and superbrights you can use for testing. See if you can bring out any details in the 0-15 and 235-255 bars with Vegas.

This post has an MPEG version of the same pattern:

https://forum.videohelp.com/threads/374734-Superblacks-and-superwhites-question#post2414529

And this one a DV version:

https://forum.videohelp.com/threads/326496-file-in-Virtualdub-has-strange-colors-when-...ed#post2022085 -

-

Im not terribly worried about my computer dropping frames, Im running an i5-6600k, 16 gb corsair vengeance memory, and an evga 980ti, so I think Im ok on that front.

Im not going to mess with Vegas right now, but thanks for the informative link. Its just too complex for me at this stage. when I get more familiar with video editing I will give it a go. Ill try viewing the waveform monitor in avisynth now though.

Heres a picture of my proc amp settings. which settings might I change to ensure there are no superbrights or superdarks? Thank you

-

-

Cool, I just messed with that and got some much better results. But I think it could still be better. I just posted a reply in this thread, thank you

https://forum.videohelp.com/threads/384329-Do-these-settings-look-good-in-AmaRecTV-for...es#post2493000 -

You're brights and darks are a little low now. Some devices/software use different terms, but usually contrast is gain (Y' = Y * contrast) and brightness is a offset (Y' = Y + brightness). So increasing Brightness by about 4 units should get your video just about perfect.

Similar Threads

-

Drastic volume difference between two videos

By lonrot in forum Newbie / General discussionsReplies: 3Last Post: 10th Dec 2014, 15:33 -

Program for editing videos with encrypted segments from videos from satelit

By calriks in forum EditingReplies: 1Last Post: 3rd Jul 2014, 21:33 -

720p and 1080p videos?:SRT files?: PS3 playback?: HD videos?

By vortun in forum Newbie / General discussionsReplies: 9Last Post: 4th Aug 2013, 09:22 -

Problem with Big Videos (Videos with longer duration of 1 hr) On my server

By ninadgac in forum Video ConversionReplies: 2Last Post: 28th Jan 2013, 14:05 -

What's The Difference?

By SeaBird in forum Newbie / General discussionsReplies: 5Last Post: 5th Dec 2012, 16:12

Quote

Quote