in GUI you use your mouse and in "Live_Crop" line you select:

[68:96, 344:398]

then press Ctrl+C, that will copy it into your clipboard

then navigate to your python script and paste that for you first rectangle into this line:

r1 = cv2.cvtColor(img_rgb[68:96, 344:398], cv2.COLOR_BGR2HSV)

+ Reply to Thread

Results 121 to 150 of 172

-

-

Hello:

I think I now understand the coordinates by your Python tools. Now, I want to use your code for a new transit file: Transit_B1.mp4. (I uploaded it in Post #112)

First, I copied Transit_B1.mp4 to the same folder as Comparator.exe.

Then I run the DOS command to launch the tool:

C:\SoccerVideos\Python\Comparator>Comparator.exe transit_B1.mp4

Second, I visit frame #1114, then corp 2 images, you can see the pictures.

Third, I make one Python code, as the following:

C:\SoccerVideos\Python\Comparator>type DetectB1CropFrame.py

Finally, I run my code:Code:import numpy as np import cv2 first_found_frame = -11 def process_img(img_rgb, frame_number): r1 = cv2.cvtColor(img_rgb[104:318, 54:156], cv2.COLOR_BGR2HSV) lower = np.array([30,125,0]) upper = np.array([45,255,255]) mask1 = cv2.inRange(r1, lower, upper) if np.average(mask1) > 140: r2 = cv2.cvtColor(img_rgb[104:322, 612:736], cv2.COLOR_BGR2HSV) lower = np.array([30,125,0]) upper = np.array([45,255,255]) mask2 = cv2.inRange(r2, lower, upper) if np.average(mask2) > 140: global first_found_frame if abs(frame_number-first_found_frame) >10: first_found_frame = frame_number print(frame_number) vidcap = cv2.VideoCapture(r'transit_B1.mp4') frame_number = 0 while True: success,image = vidcap.read() if not success: break process_img(image, frame_number) frame_number += 1 vidcap.release()

C:\SoccerVideos\Python\Comparator>python DetectB1CropFrame.py

0

11

22

33

44

55

66

77

88

99

110

121

132

143

154

165

176

914

938

949

960

979

990

1001

1124

1135

1146

1157

1168

1179

1190

1201

1212

1223

1234

1245

1256

1267

1278

1289

1384

1395

1406

1483

1494

1505

1516

1527

1538

1549

1560

1571

1582

1593

1604

1615

1626

1637

C:\SoccerVideos\Python\Comparator>

The result is NOT correct, the reason is: I donít understand how to set up those variables:

lower = np.array([30,125,0])

upper = np.array([45,255,255])

and:

lower = np.array([30,125,0])

upper = np.array([45,255,255])

Let me know how I can change the above lower, upper values.

Thanks,

[Attachment 61110 - Click to enlarge]

[Attachment 61111 - Click to enlarge] -

yes, crops you have correct in both images,

setting up values for lower and upper ranges:

again first image you check averages for opencv_HSV values in your selection, they are:

90.3, 85.7, 68.5

which means averages are:

Hue=90.3 , saturation=85.7, value=68.5

so accordingly you give it some wiggle room and use these values for upper and lower limits:

for Hue 80 to 100, for saturation 75 to 100, and all values, that mean 0 to 255, so you get this for first rectangle:

second rectangle ranges will be the same because averages are almost the same,Code:r1 = cv2.cvtColor(img_rgb[104:318, 54:156], cv2.COLOR_BGR2HSV) lower = np.array([80,75,0]) upper = np.array([100,100,255])

then running script you get:

Code:1114 2322 >>>

-

To set up those ranges needs a human evaluation, because if that color gradient is a bit transparent, those ranges need to have higher wiggle room as oppose solid color. So in this last case, we could make those color difference smaller perhaps, because color seams to be solid, not much transparent, but whatever works.

Also if you set up three rectangles, more rectangles, those ranges could be higher also, because the odds you hit false positive is smaller. -

Hello:

Thank you so much for your great help. Finally, I got my Python code to work.

The following is the code:

To run it:Code:import numpy as np import cv2 first_found_frame = -11 def process_img(img_rgb, frame_number): r1 = cv2.cvtColor(img_rgb[106:322, 26:166], cv2.COLOR_BGR2HSV) lower = np.array([80,75,0]) upper = np.array([100,100,255]) mask1 = cv2.inRange(r1, lower, upper) if np.average(mask1) > 81: r2 = cv2.cvtColor(img_rgb[98:318, 612:740], cv2.COLOR_BGR2HSV) lower = np.array([80,75,0]) upper = np.array([100,100,255]) mask2 = cv2.inRange(r2, lower, upper) if np.average(mask2) > 81: global first_found_frame if abs(frame_number-first_found_frame) >10: first_found_frame = frame_number print(frame_number) vidcap = cv2.VideoCapture(r'transit_B1.mp4') frame_number = 0 while True: success,image = vidcap.read() if not success: break process_img(image, frame_number) frame_number += 1 vidcap.release()

C:\SoccerVideos\Python\Comparator>python DetectB1CropFrame.py

1114

2322

C:\SoccerVideos\Python\Comparator>

However, I still have the last question to ask:

In my code:

I used this condition:

if np.average(mask1) > 81:

and this condition:

if np.average(mask2) > 81:

I got the first condition from this:

Hue=90.3 , saturation=85.7, value=68.5 =>

Average(Hue, Saturation,Value) = (90.3 + 85.7 + 68.5) /3 = 81.5, to give a little room, so I used:

Average(Hue, Saturation,Value) = 81.

For the second condition from this:

Hue=90.1 , saturation=85.9, value=68.3 =>

Average(Hue, Saturation,Value) = (90.1 + 85.9 + 68.3) /3 = 81.4333, to give a little room, so I used:

Average(Hue, Saturation,Value) = 81.

Let me know if I get it right.

Thanks again! -

Hello:

I am thinking about improve performance.

Here is my plan, I can crop MP4 videos using FFMPEG, like this:

C:\SoccerVideos\Python\Comparator>ffmpeg -i transit_B1.mp4 -filter:v "crop=124:218:612:104" Transit_B1_Crop1.mp4

C:\SoccerVideos\Python\Comparator>ffmpeg -i transit_B1.mp4 -filter:v "crop=124:218:612:104" Transit_B1_Crop2.mp4

However, the cropped videos seems to be much bigger than they should be. Let me know if I made any mistake. Transit_B1_Crop1.mp4 file contains only cropped area in the python code for r1 (r1 = cv2.cvtColor(img_rgb[106:322, 26:166], cv2.COLOR_BGR2HSV); Transit_B1_Crop2.mp4 file contains only cropped area in the python code for r2 (r2 = cv2.cvtColor(img_rgb[98:318, 612:740], cv2.COLOR_BGR2HSV).

Anyway, what I want to say is that: if you can use Python to crop Transit_B1.mp4 into 2 mp4 videos, each one contains only the cropped areas as used in the Python code (r1 and r2).

Then, if you can use Python code to read two videos in, but first, scan only the first video:

Transit_B1_Crop1.mp4

If any matching frame is found, letís say, you find Frame #1114 is a match frame in Transit_B1_Crop1.mp4, then use the second video reader, to directly jump to frame #1114 to check if the np.average(mask2) meets the requirement, if yes, the output the underlying Frame#, which is 1114.

Yet, this idea will bring some overhead, like FFMPEG to crop the videos (or python to crop the videos). However, since the original code does a lot of corp and calculation, which is also rather time consuming. For my new idea, if Python can jump directly to specific Frame number, then the code can skip the corp image process, which I believe is rather time consuming, as I have a lot of 1 or 2 hours MP4 videos.

Let me know what you think?

Thanks, -

That is a nonsense, because mask is a crop with 0 values and 255 values only. Check internet how masks work.

If half of the points fit, you use 128. So you tolerate half pixels not to be in those ranges. It is a tune up number. The higher, less tolerance.

The other case, checking only cropping parts, sure, you run prosess_img() two times with cropped images and if both are successful, you print frame,

I have to run now, post code later. -

Hello:

I want to use the Comparator tool to find the Key Frames from another transit MP4 video: Transit_D1.mp4.

However, I found that at least 3 frames are quite similar.

I donít know where I can crop the frame to find any unique properties to detect.

I found the key frames seem to be rather dark (black), so I think maybe I can use the frameís brightness (V value from HSV) as a standard. But I donít know how to setup the conditions.

Please advise.

See the pictures and Transit_D1.mp4 for reference.

Thanks,

[Attachment 61131 - Click to enlarge]

[Attachment 61132 - Click to enlarge]

[Attachment 61133 - Click to enlarge] -

Make a little rectangle for red and yellow, not both red, because player jerseys could be red. Or add also some blue rectangle. Those rectangles could be really tiny, if you have them more. Also those are solid colors, so wiggle room could be really narrow.

Regarding duplicate frames, this part takes care of it and returns only one frame for a transition:

That makes sure that found frames are 10 frames apart.Code:first_found_frame = -11 def process_img(.... . . . global first_found_frame if abs(frame_number-first_found_frame) >10: first_found_frame = frame_number print(frame_number)Last edited by _Al_; 4th Oct 2021 at 16:40.

-

ok last time, I really think you should get it by now:

Code:import numpy as np import cv2 #green values are pasted from GUI first_found_frame = -21 def process_img(img_rgb, frame_number): r1 = cv2.cvtColor(img_rgb[98:116, 328:350], cv2.COLOR_BGR2HSV) lower = np.array([170,230,0]) #ranges for averages: 174.7, 250.0, 220.2 upper = np.array([180,255,255]) mask1 = cv2.inRange(r1, lower, upper) if np.average(mask1) > 190: #190 , closer to 255, colors are solid, well defined, so mask will have more matches r2 = cv2.cvtColor(img_rgb[140:166, 374:402], cv2.COLOR_BGR2HSV) lower = np.array([20,210,0]) #ranges for averages: 27.6, 245.4, 251.2 upper = np.array([40,255,255]) mask2 = cv2.inRange(r2, lower, upper) if np.average(mask2) > 190: global first_found_frame if abs(frame_number-first_found_frame) >20: #omit following 20 frames if a frame is found first_found_frame = frame_number print(frame_number) vidcap = cv2.VideoCapture('transit_D1.mp4') frame_number = 0 while True: success,image = vidcap.read() if not success: break process_img(image, frame_number) frame_number += 1 vidcap.release()I just increased that interval to ignore frame if first found to 20 frames, because that transition appears on screen for longer that 10 framesCode:1087 1700 >>>

Last edited by _Al_; 4th Oct 2021 at 22:17.

-

Hello:

Thank you very much for your code and explanation.

I still have some questions about the lower/upper limit for different colors.

Refer to this article:

https://pysource.com/2019/02/15/detecting-colors-hsv-color-space-opencv-with-python/

The author uses python code to detect different colors in video.

I see he has the following lower/upper limits:

# Red color

low_red = np.array([161, 155, 84])

high_red = np.array([179, 255, 255])

red_mask = cv2.inRange(hsv_frame, low_red, high_red)

red = cv2.bitwise_and(frame, frame, mask=red_mask)

# Blue color

low_blue = np.array([94, 80, 2])

high_blue = np.array([126, 255, 255])

blue_mask = cv2.inRange(hsv_frame, low_blue, high_blue)

blue = cv2.bitwise_and(frame, frame, mask=blue_mask)

# Green color

low_green = np.array([25, 52, 72])

high_green = np.array([102, 255, 255])

green_mask = cv2.inRange(hsv_frame, low_green, high_green)

green = cv2.bitwise_and(frame, frame, mask=green_mask)

# Every color except white

low = np.array([0, 42, 0])

high = np.array([179, 255, 255])

mask = cv2.inRange(hsv_frame, low, high)

result = cv2.bitwise_and(frame, frame, mask=mask)

I want to know if there is one general rules for this kind of lower/upper limit to detect color.

Unfortunately, the color yellow is missing.

Or we have to give different lower/upper limit depend on our situation?

By the way, how do you get the lower/upper limit for the yellow color in this video?

I found he has last thing called ďEvery color except whiteĒ, so we can use this for yellow?

Thanks, -

-

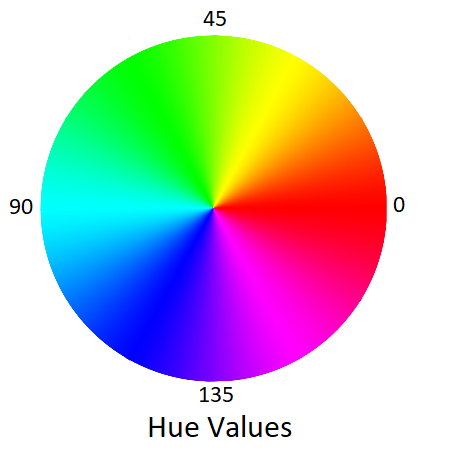

That is what I am explaining for couple of days. The average for H in GUI you get for example 25.5, So you set:

low = np.array([20, xxx, xxx])

high = np.array([30, xxx, xxx])

Do you understand those 20 and 30 values come from? Average is 25.5 so you guess the range. The higher range you likely get false positive.

That is a general rule for a solid color or even slightly transparent, but then you have to give it more range.

-

Hello:

I run the Avs Tools in Post #80, so I can see the following picture.

For your Python code for Transit_D1

lower = np.array([170,230,0])

upper = np.array([180,255,255])

Therefore, the average HUE in Comparator tools is: 174.7, so the lower limit for HUE is: 170, and upper limit for HUE is 180.

Right?

But I can't find how to get the lower/upper limit for saturation from the AVS tools in Post #80.

The lower/upper limit for saturation seem to be different from AVS script in Python.

Please advise!

[Attachment 61146 - Click to enlarge] -

No. The limits depend on the video. You want the range to be wide enough to select most the hues of the area you're trying to match (to avoid false negatives), but narrow enough not to match other similar colors (to avoid false positives).

Those scripts don't deal with saturation. What I usually do is get the hue range first, the adjust the saturation range. Starting with a wide range of saturation, I narrow the range until it just starts cutting into the hue mask. This means changing the script then reloading it, iteratively until I get see range I want. This is where VirtualDub2 comes in very handy. It has an AviSynth script editor. I change the value in the script then press F5 to reload it into the editing window. So it only takes a few seconds per iteration.

Hue and saturation are both different between AviSynth and Python. You can either make similar tools in Python or use the AviSynth tools and do the math to convert the values. -

In OpenCV saturation is Avisynth's Hue/2.

Because opencv works with arrays. They need to be possibly all the same type. Like in our case 8bit integer values (numpy.uint8), which have range 0 to 255 only. But Hue values can go from 0 to 360. That would not work. So they decided to just use hue values from 0 to 180.

So jagabos Hue values, if wanting to use in Python, you'd need to divide his values by 2.

Anyway, I think you want to revel complete workflow of yours, what you actually do. Getting clips of goals from soccer games (possibly from the whole Universe ) , then archiving/posting them, but replay is edited out? Reason, less space? Using ffmpeg commands? Because at the end it might just be a short script in Python at the end, all of it in one script. Defining transition could be done in Python as well, it would just took a bit longer. Every new transition would be defined by rectangles, color ranges, mask_threshold, fine tune number of frames to the left and right (to cut transition-in and transition-out parts). That would just be a sort of library, list, that you can add up transitions , change importance (put them to the top of the list) etc. Different strategy would be set up. Meaning first rectangle would be really small and wider ranges to be sure. That might speed up things to find transition type. Following rectangles could be a bit bigger. Also there could be more of them and possibly spread out to exclude false positives. More rectangles would not mean longer search times at all. If transition is found that same type for the end of replay would be searched.

) , then archiving/posting them, but replay is edited out? Reason, less space? Using ffmpeg commands? Because at the end it might just be a short script in Python at the end, all of it in one script. Defining transition could be done in Python as well, it would just took a bit longer. Every new transition would be defined by rectangles, color ranges, mask_threshold, fine tune number of frames to the left and right (to cut transition-in and transition-out parts). That would just be a sort of library, list, that you can add up transitions , change importance (put them to the top of the list) etc. Different strategy would be set up. Meaning first rectangle would be really small and wider ranges to be sure. That might speed up things to find transition type. Following rectangles could be a bit bigger. Also there could be more of them and possibly spread out to exclude false positives. More rectangles would not mean longer search times at all. If transition is found that same type for the end of replay would be searched.

Except If you deliberately throw a video clip in correct directory with known transition. Not sure what you do.

Then Getting times for those frames and using ffmpeg to cut it.

But I cannot imagine doing it losslessly because I frames do not land strictly where transitions are. Half of the clips would be re-encoded anyway.

But if you re-encode, it can all be done using those frames and vapoursynth (python).

Sometimes, as it happens a lot here, when the problem and workflow is revealed, completely new and better strategies can be developed.Last edited by _Al_; 5th Oct 2021 at 14:47.

-

Hello:

As I have almost all data in C#, so I am now converting most of the Python code into C#. Until now, I have done converting more than half of them, still 5 to go.

However, I found the Transit_C2.mp4/Transit_C1.mp4 are difficult to use Python code. I have no idea how to convert AviSynth script to C#.

Can you find some solution for Transit_C2.mp4 using Python?

Thanks, -

It's more than that:

[Attachment 61149 - Click to enlarge]

[Attachment 61150 - Click to enlarge]

Python has red at hue=0 whereas AviSynth has red at hue=102. I believe the full conversion is:

I don't know if Python accepts negative values for hue. If not, add 180 to convert it to the positive equivalent.Code:PythonHue = (AvisynthHue - 102) / 2

-

If you define a transition in a clip with different resolution, it would not work. Color ranges would be ok but rectangles would need to be transformed

Transit_C1.mp4,Transit_C2.mp4:

Transit_C1.mp4 (only one transition , because there is only one):Code:import numpy as np import cv2 #green text is values pasted from GUI first_found_frame = -21 def process_img(img_rgb, frame_number): r1 = cv2.cvtColor(img_rgb[174:220, 214:234], cv2.COLOR_BGR2HSV) lower = np.array([20,100,0]) # Hue averages: 25.0, 153.0, 167.4 upper = np.array([30,200,255]) mask1 = cv2.inRange(r1, lower, upper) if np.average(mask1) > 190: r2 = cv2.cvtColor(img_rgb[188:212, 302:322], cv2.COLOR_BGR2HSV) lower = np.array([0,0,0]) # Hue averages: 0.0, 0.0, 242.0 upper = np.array([10,20,255]) mask2 = cv2.inRange(r2, lower, upper) if np.average(mask2) > 190: global first_found_frame if abs(frame_number-first_found_frame) >20: first_found_frame = frame_number print(frame_number) vidcap = cv2.VideoCapture('transit_C1.mp4') frame_number = 0 while True: success,image = vidcap.read() if not success: break process_img(image, frame_number) frame_number += 1 vidcap.release()

Transit_C2.mp4:Code:635

Code:1294 1811

Last edited by _Al_; 5th Oct 2021 at 16:51.

-

Also, looking how Hue works, I'd avoid choosing any shade of gray or white or black. I chose it , it worked, but that video could get tinted into some color and that might be a trouble. Hue for any shade of gray from black to white is:

0, 0, 0-255

(0,0,0) is black, (0,0,255) is white, so no hue, no saturation, just value

So you might try it yourself and just choose that one color in couple or more rectangles.Last edited by _Al_; 5th Oct 2021 at 16:59.

-

thanksPythonHue = (AvisynthHue - 102) / 2

I don't know if Python accepts negative values for hue. If not, add 180 to convert it to the positive equivalent. -

Hello:

Thank you very much for your code, I tested on Transit_C2.mp4, it works.

However, when I tested on another one: Transit_C3.mp4, it didn't work.

So, I think another way: in your code, you use Comparator to crop one rectangle in the golden circle, and crop another rectangle in the white color.

I think it could be better to crop 2 rectangles in the golden circle, one is the ond you used in your code, another one is on the other side of the circle.

It's location is: img_rgb[182:232, 488:506]

Here is my code:

To run the script:Code:import numpy as np import cv2 first_found_frame = -11 def process_img(img_rgb, frame_number): r1 = cv2.cvtColor(img_rgb[174:220, 214:234], cv2.COLOR_BGR2HSV) lower = np.array([20, 100, 0]) upper = np.array([30, 200, 255]) mask1 = cv2.inRange(r1, lower, upper) if np.average(mask1) > 250: r2 = cv2.cvtColor(img_rgb[182:232, 488:506], cv2.COLOR_BGR2HSV) lower = np.array([20, 100, 0]) upper = np.array([30, 200, 255]) mask2 = cv2.inRange(r2, lower, upper) if np.average(mask2) > 250: global first_found_frame if abs(frame_number-first_found_frame) >10: first_found_frame = frame_number print(frame_number) vidcap = cv2.VideoCapture(r'transit_C3.mp4') frame_number = 0 while True: success,image = vidcap.read() if not success: break process_img(image, frame_number) frame_number += 1 vidcap.release()

C:\SoccerVideos\Comparator>python DetectC2CropFrame.py

1294

1811

This script works for both Transit_C2.mp4 and Transit_C3.mp4.

Let me know what you think?

Thanks, -

yes, avoid any shades of gray, white and black including , I had selected that for one rectangle, that's not good

good to see that you made it work

also I'd leave default 20 frames , to skip 20 frames if frame found, some transitions could be long:

first_found_frame = -21

and

if abs(frame_number-first_found_frame) >20: -

If you want to use shades of grey you should look at U and V values (~128) and/or intensity, not hue or saturation.

-

thanks

so following this, advice, for that gray shade rectangle, if to select (white and black also, that is just max and min luminosity for gray)

you select that second rectangle like this:

this would work for all C1,C2 and C3 clipsCode:#green values are taken from GUI if np.average(mask1) > 190: r2 = cv2.cvtColor(img_rgb[178:232, 298:324], cv2.COLOR_BGR2YUV) #selection of whitish area lower = np.array([200, 115, 115]) #241.6, 128.0, 130.7 values for YUV average, not HSV average upper = np.array([255, 140, 140]) -

another thing to watch for is selection of red in opencv, because that color wraps around 180 or zero, so if selecting red close to Hue = 0 (or close to 180 from the other side of circle), you are better off inverting image and hunting for cyan range (opposite color in Hue circle).

r2 = cv2.bitwise_not(r2) #inverting img

Hue value +90 (if red is close above zero) or -90 if below 180.

Or better off, using YUV again, for rangesLast edited by _Al_; 6th Oct 2021 at 09:42.

-

Hello:

I don't know what is shades of gray. I found this explanation from wiki:

https://en.wikipedia.org/wiki/Shades_of_gray

Do you mean this shades of gray?

By the way, if I choose the 2 rectangles from the golden circle, then those 2 rectangles are NOT shades of gray (Transit_C2.mp4/Transit_C3.mp4), rigth?

Thanks, -

So it perhaps handles that wrap situation by actually creating two ranges 355-360, 0 - 5. Avisynth does this a lot, where there are specific functions that cover different tweaks or takes care of specifics within a function. Very pleasant for someone who does video only and is not a coder or hates it a lot. OpenCV or Vapoursynth operates more on just basic principles. Example Vapoursynth's ShufflePlanes() is a general function, as oppose to avisynths has more functions that move planes specifically like chroma to one plane, xtoY etc. Simple.

I specifically say this now,because this is a nice example, there is perhaps a module or written function in python that someone already covered or at least there is a code that is googled and is available in couple of seconds (exactly what I did to find out right away that approach to invert red hue and look for cyan range). So when vapoursynth and opencv is used with python, there is a gazillion advice's out there or modules that cover almost every case regarding coding things around a problem using python. If this would be done in Avisynth, functions need to be created or conditions included, then code becomes harder to read, (doom9 is full of avisynth codes like that). But if using python, those basic modules can stay really thin (Vapoursynth) and some other modules could be imported to handle situation or a python code is included that is much more readable than avisyths. -

You did fine, jagabo just mentioned that it could be worked even with those shades of gray if using YUV instead of HSV.

To explain shade of grade in YUV: It could be any luminescence 0 (black) to 255(bright white). But Y and V is always 128. So YUV white is 255,128,128. YUV black is 0,128,128. If you'd have just a shade , something between white and black, you'd have YUV value: 128,128,128. So colors do not wrap up around 0 or 255.

values 128 for U and V means no color.

In HSV white is 0,0,255 and black is 0,0,0. But as soon as you introduce color, like green, very light green, Hue jumps to 40 for example. It is jump difference in value. You cannot hunt for range for a shade of gray, only for a color. As oppose YUV, you'd have those U and V values move just slightly, so you'd caught them in a range.

Similar Threads

-

Need help to find Avisynth solution for Luma issue on video

By Fonzzie31 in forum RestorationReplies: 1Last Post: 15th Jun 2021, 06:07 -

AviSynth: Clips must have same number of channels

By smike in forum EditingReplies: 6Last Post: 12th Sep 2020, 14:26 -

Concatenate clips with as little code as possible in AviSynth+

By miguelmorin in forum EditingReplies: 7Last Post: 22nd Feb 2020, 07:12 -

How to use AviSynth and FetDups with VirtualDub to remove duplicate frames?

By pernicio in forum Newbie / General discussionsReplies: 8Last Post: 20th Jan 2019, 11:37 -

Replace random duplicate frames with black frames (AVISYNTH)

By benzio in forum EditingReplies: 7Last Post: 31st Jan 2018, 16:43

Quote

Quote