Al those formulas are based on assumption that you are using normalized values. so YPbPr (within 0..1, ±0.5) or RGB (within 0..1) - limited quantization squish this range and shift up by some offset - to restore normalized values you need to perform reversed operation (so remove offset - pedestal and later divide value by scaling coefficient - in case of Y signal or RGB limited quantization range 0=16 and 1=235 thus scaling coefficient is 235-16=219). Those things are important.

Whenever i'm not sure how ffmpeg will behave i always forcing ffmpeg to operate in full quantization range - you need to separate quantization range from signalled quantization range.

This should prevent ffmpeg to convert between limited and full quantization range - all data will be considered as full quantization range as such you are responsible for proper signalling - just use codec syntax to control this aspect.Code:ffmpeg -color_range 2 -i file -color_range 2

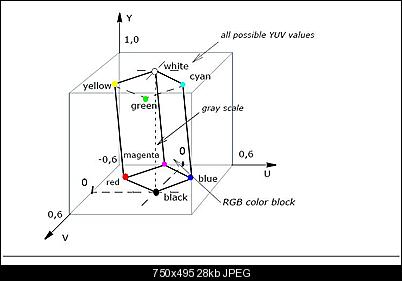

Clamping is mandatory as RGB and YCbCr are only partially compliant colour spaces (YCbCr is artificial colour space).

+ Reply to Thread

Results 121 to 150 of 152

-

-

Yep, that did it, thank you.

I confined r, g and b to 1 - 254 because 0 and 255 are reserved for sync.

Code:rf = (255/219)*yf + (255/112)*vf*(1-#Kr) - (255*16/219 + 255*128/112*(1-#Kr)) gf = (255/219)*yf - (255/112)*uf*(1-#Kb)*#Kb/#Kg - (255/112)*v*(1-#Kr)*#Kr/#Kg - (255*16/219 - 255/112*128*(1-#Kb)*#Kb/#Kg - 255/112*128*(1-#Kr)*#Kr/#Kg) bf = (255/219)*yf + (255/112)*uf*(1-#Kb) - (255*16/219 + 255*128/112*(1-#Kb)) If rf > 254: rf = 254:EndIf: If gf > 254: gf = 254:EndIf: If bf > 254: bf = 254:EndIf If rf < 1: rf = 1:EndIf: If gf < 1: gf = 1:EndIf: If bf < 1: bf = 1:EndIf

-

Only YCbCr and only broadcast equipment that use SDI or similar link and as i wrote earlier due of SAV and EAV codes they are anyway clamped to 1 and 254 internally by design. Anyway when Chroma is within limited quantization range, anything lower than 16 and higher than 240 is unnatural and probably artificially generated and can't be properly represented by RGB colour space (as pure red and pure RGB is 240 and pure yellow and pure cyan is 16) - IMHO they can be safely clamp.

-

Since you're working with floaging point, another way to approach it is to convert your limited range YUV to full range YUV (simple offset and scaling) then use the full range equation.

Real production software (and hardware) doesn't used floating point, of course, it's too slow. It will be done with scaled integers. -

Thanks for the help, guys, but my program isn't working well.

I have two instances of ffmpeg running, one for input and one for output. The basic idea is to read input file -> modify -> write output file. For some reason only alternate frames are being correctly modified. This results in a horrible flicker.

https://batchloaf.wordpress.com/2017/02/12/a-simple-way-to-read-and-write-audio-and-vi...-part-2-video/

The idea was to be able to make adjustments interactively and avoid some of the quirks of ffmpeg. That part works. It's kind of useless if the user can't export the video.

I know about vapoursynth but it uses ffmpeg as I understand it.Last edited by chris319; 30th Nov 2018 at 01:57.

-

Higher end visual effects production (including film / tv/ internet (netflix/amazon) ) , compositing, CG - they typically only use floating point . Some programs (like the industry standard Nuke) actually cannot use anything else for intermediate calculations or processes. Everything is converted to linear light 32bit float, and all operations work in float .

Double check your frame rates. The actual source frame rate, the -r parameter for the 1st and 2nd ffmpeg instance

Or did you mean this is occurring "live" as you're making adjustments ? If it's not a mismatched framerate issue, it might be your filters are too slow for realtime processing for your current HW, and you're dropping frames .Last edited by poisondeathray; 30th Nov 2018 at 02:13.

-

Everything is explicitly set to 59.94.

The user makes his adjustments on a single, frozen frame, then clicks a button which begins the export process and he is no longer able to adjust the video.

That may well be the case. It's not real time but must keep up with ffmpeg.If it's not a mismatched framerate issue, it might be your filters are too slow for realtime processing for your current HW, and you're dropping frames .

There are 1280 x 720 pixels to deal with. If I have it do only rows 0 to 360 there is no flicker but only for the upper half of the picture. At any rate, this may be an insoluble problem which puts the kibosh on the project.

The alternative is to run the file through ffmpeg, look at the file on a scope, go back and make adjustments, run ffmpeg again, and back and forth until the levels are right — very time consuming and far from interactive. -

-

So did you mean you applied filter(s) to only top half, and only top half "flickered" ? Bottom half ok ?

If you read => no filters => write , does it still occur ? (ie just pipe to write)

If you read => modify (but nothing actually modified, apply the filters but set them to "zero" or whatever value that makes it default) => write , does it still occur ?

The way a "slow" filter works in a linear filter chain for ffmpeg, is it acts as a "bottleneck" . But final exported / encoded file should be ok. So you just get slower processing. So I'm wondering if something about the live aspect is introducing the problem

What is the pattern ? You said "alternate frame" - Is it exactly every 2nd frame, or was that just a rough description ? Maybe upload a sample of an exported file to examine -

Yep.So did you mean you applied filter(s) to only top half, and only top half "flickered" ? Bottom half ok ?

Well here's the tricky part. If the image is unmodified between frame writes, the video could be flickering between two identical images and you won't see it flickering. You don't see the flicker unless the image is modified between frame writes.If you read => no filters => write , does it still occur ? (ie just pipe to write)

Interesting thought, but at this point in the program it is not waiting for a window event, e.g. a mouse click, etc.I'm wondering if something about the live aspect is introducing the problem

The latter, but it is logical as it reads/writes one frame at a time.What is the pattern ? You said "alternate frame" - Is it exactly every 2nd frame, or was that just a rough description ?

I tried compiling and running Ted Burke's C code under Linux and still see some weirdness. Remember what I said about it flickering between identical images and you don't see the flicker. -

That won't affect the execution speed of my code. My Sony camcorder has a frame rate of 59.94. I can't control that.It should be 60000.0/1001.0 i.e. 59.94005994005994005994005994006... those numbers are important...

-

What about the specific filters ? What is being used? Do you have logic in some of them ? eg. Many "auto" leveling and legalization filters can introduce flicker , because values are adjusted per frame, instead of across temporal average or range

Try a static application of a filter with a constant value. Maybe a lut to +20 all values or something. Nothing else, no other filters, no clipping or legalization applied. This will help rule out if it's something with your filters or something about the pipe process -

-

-

I'm not sure you understand. For example, with asynchronous writes control is returned to the caller (your program) before the write has completed. If you are using a single frame buffer the next read into that buffer may overwrite some of your processed data before the write has completed. With synchronous writes control won't return to your program until the write operation has completed.

I don't know exactly what you are doing (live camcorder to the screen? file to file?, something else?) and I've never used ffmpeg lib, but I suspect it supports both sync and async reads and writes. -

I tried double buffering. I set up a buffer for reading and another one for writing and no joy. I'll go back and work with it some more.

It's all file based. It reads an input file and writes to another output file. You can make video adjustments interactively on a frozen frame, then click a button and ffmpeg begins importing/exporting.

This is at the core of the program:

https://batchloaf.wordpress.com/2017/02/12/a-simple-way-to-read-and-write-audio-and-vi...-part-2-video/ -

I built (Pelles C) the teapot program but had to make a few small modifications. popen() and pclose() had to be changed to _popen and _pclose(). The read mode for the input pipe had to be changed from "r" to "rb", and the write mode for the output pipe had to be changed from "w" to "wb". These allow for binary input and output -- otherwise non-text characters aren't passed properly.

fread() and fwrite() in C are synchronous so those shouldn't be a problem. But just as a test, try adding a fflush(pipeout); right after the fwrite. See if that makes any difference. -

Could you copy and paste your source code?

I implemented Ted's code using GCC on Ubuntu in a virtual machine.

I'm adroit at doing the GUI for this program in PureBasic but I'm clueless in writing a GUI in C or C++. Maybe Lazarus? I don't know if PureBasic has the equivalents of the changes you made, i.e. if the reading and writing functions are synchronous.

Thank you. -

Here is what PureBasic has:

https://www.purebasic.com/documentation/process/runprogram.html

I am using ReadProgramData() and WriteProgramData(). It looks like they are synchronous?

https://www.purebasic.com/documentation/process/readprogramdata.html

https://www.purebasic.com/documentation/process/writeprogramdata.html -

It's identical to his except for the minor changes I mentioned. No GUI:

Since you are creating a video file, try writing the frame number (no other processing) on each frame before writing it. Open the resulting video verify you're getting each frame once, in order, and no skipped frames.Code:// // Video processing example using FFmpeg // Written by Ted Burke - last updated 12-2-2017 // #include <stdio.h> // Video resolution #define W 1280 #define H 720 // Allocate a buffer to store one frame unsigned char frame[H][W][3] = {0}; int main(int argc, char **argv) { int x, y, count; // Open an input pipe from ffmpeg and an output pipe to a second instance of ffmpeg FILE *pipein = _popen("ffmpeg -i teapot.mp4 -f image2pipe -vcodec rawvideo -pix_fmt rgb24 -", "rb"); FILE *pipeout = _popen("ffmpeg -y -f rawvideo -vcodec rawvideo -pix_fmt rgb24 -s 1280x720 -r 25 -i - -f mp4 -q:v 5 -an -vcodec mpeg4 output.mp4", "wb"); // Process video frames while(1) { // Read a frame from the input pipe into the buffer count = fread(frame, 1, H*W*3, pipein); // If we didn't get a frame of video, we're probably at the end if (count != H*W*3) break; // Process this frame for (y=0 ; y<H ; ++y) for (x=0 ; x<W ; ++x) { // Invert each colour component in every pixel frame[y][x][0] = 255 - frame[y][x][0]; // red frame[y][x][1] = 255 - frame[y][x][1]; // green frame[y][x][2] = 255 - frame[y][x][2]; // blue } // Write this frame to the output pipe fwrite(frame, 1, H*W*3, pipeout); } // Flush and close input and output pipes fflush(pipein); _pclose(pipein); fflush(pipeout); _pclose(pipeout); } -

I tried using this in PureBasic in various arrangements:

https://www.purebasic.com/documentation/process/availableprogramoutput.html

One problem is that the program stalls when it reaches the end of the file because the above function returns 0 and my program thinks it needs to wait for some data. I also tried setting up a loop that would process x-number of frames.

I also went back to my double-buffered scheme. No success so far. It's nice and clean if the read buffer is written to disk which is cheating. The write buffer is supposed to be written to disk. Also, if the program is flickering between two identical images, you won't see the flicker. This is all in progressive scan.

Maybe I need to use a callback for this? I'm in over my head here. -

-

If you have to kill the program it's not surprising the output file is corrupt -- depending on the container. As a test try outputting a transport stream. Those are designed to withstand abuse like that.

Why can't you use a structure like I outlined above? It should avoid your problem you're having with the program locking up. -

-

Similar Threads

-

Help Converting YUV to RGB

By chris319 in forum Video ConversionReplies: 7Last Post: 24th Sep 2018, 19:51 -

RGB to YUV to RGB

By chris319 in forum ProgrammingReplies: 70Last Post: 20th Feb 2017, 17:49 -

ffmpeg/x264 RGB to YUV

By SameSelf in forum Video ConversionReplies: 40Last Post: 14th Nov 2016, 19:40 -

YUV/ RGB problem in Avisynth

By spiritt in forum Newbie / General discussionsReplies: 9Last Post: 6th Sep 2015, 05:31 -

is this YUV or RGB?

By marcorocchini in forum Newbie / General discussionsReplies: 2Last Post: 20th Apr 2014, 11:21

Quote

Quote