I have a video that has an unusual repeated frame pattern (source video):

https://drive.google.com/file/d/1NsH33VTZAv2n-XO-jG2UUAswdpwg2t-w/view?usp=sharing

In the source video, you can see that the 5th & 6th frame are duplicates.

What is unusual is that the 6th frame seems to change alignment.

Almost like it's some sort of zoom in or something.

My habit with large-sized captured source videos is to convert using "Hybrid".

I convert the video to x265 and set the "TDecimate" option to remove repeated frames.

You'd think I could use the default "TDecimate(Cycle=5, CycleR=1)" option.

But this eliminates the 5th frame, leaving the problematic 6th frame in the video.

And this produces jerkiness - because the remaining 6th frame has that weird frame alignment problem.

Here is what it looks like in a sample (converted in Hybrid):

https://drive.google.com/file/d/1npotVyZvsfChfOVBIgrCmVoQv37xWYY6/view?usp=sharing

So I tried using a "TDecimate(Cycle=6, CycleR=1)" option.

This would eliminate the problematic 6th frame, leaving the 5th frame.

This produces a smoother video that I like, visually speaking.

But the problem is that it always throws the audio out of sync.

Here is a sample (converted in Hybrid):

https://drive.google.com/file/d/1j9pgqP9fwRfy-iBPGJmDjjJ4wMW8-sWV/view?usp=sharing

I've tried everything, and can't overcome this audio sync issue.

I've tested with various conversion options & file formats.

For some odd reason, using a "TDecimate(Cycle=6, CycleR=1)" option ALWAYS throws the audio of out sync.

So I'm stuck in a situation where I use "TDecimate(Cycle=5, CycleR=1)" option & get jerky visuals...but proper audio.

Or I use the "TDecimate(Cycle=6, CycleR=1)" option & get smooth video...but messed up audio.

Can anyone give me any advice on how to process this video to get a good result?

Try StreamFab Downloader and download from Netflix, Amazon, Youtube! Or Try DVDFab and copy Blu-rays! or rip iTunes movies!

+ Reply to Thread

Results 1 to 30 of 32

Thread

-

-

-

Cycle=6, CycleR=1 means remove one of every six frames, not necessarily the sixth. It removes the frame that appears to be most likely a duplicate. A frame that's misaligned may not look like a duplicate so TDecimate() may remove another frame. That doesn't change the running time of the video though. Is the frame rate being changed/assumed elsewhere in the script?

If you literally need to remove the last of every group of six frames use SelectEvery(6, 0,1,2,3,4). But that will not adapt to changes in the pattern.Last edited by jagabo; 27th Mar 2018 at 18:49.

-

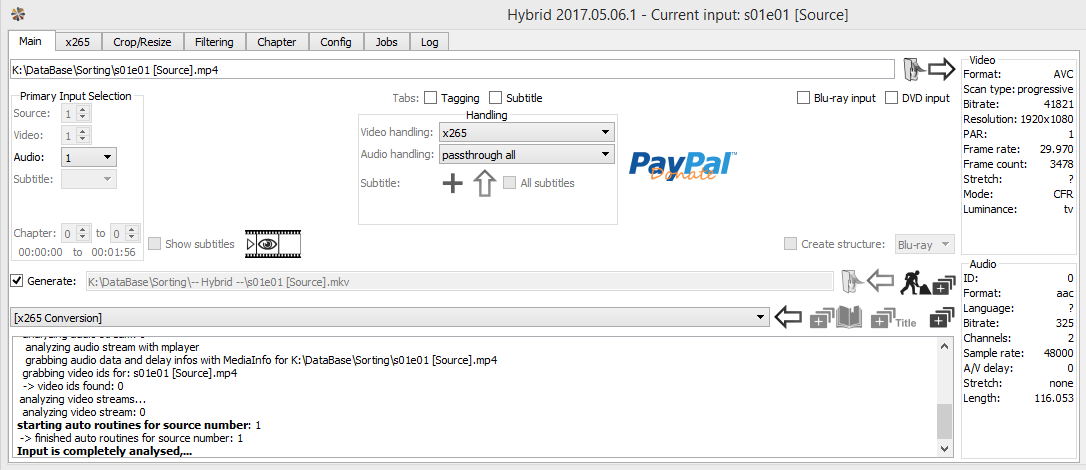

Sorry, I didn't mention this earlier. I use the Hybrid GUI to apply "TDecimate" to the video.

This lets me convert to x265 & decimate in one go.

I'm not really familiar with any other method to accomplish these simultaneous tasks.

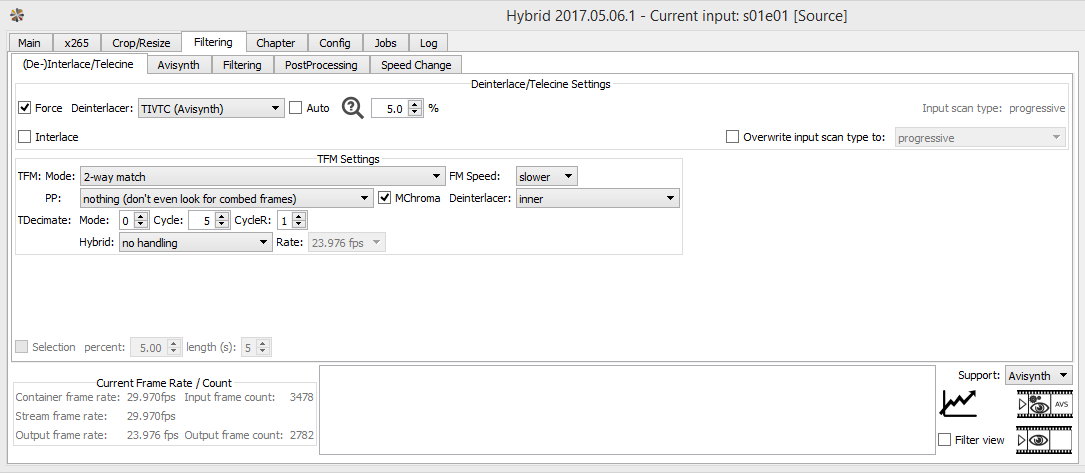

For "TDecimate(Cycle=5, CycleR=1)", I set it like this in Hybrid:

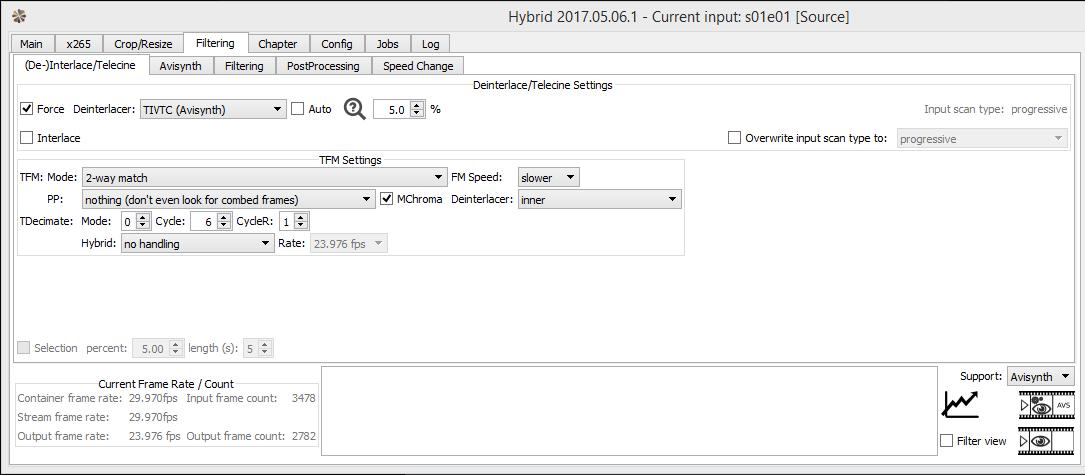

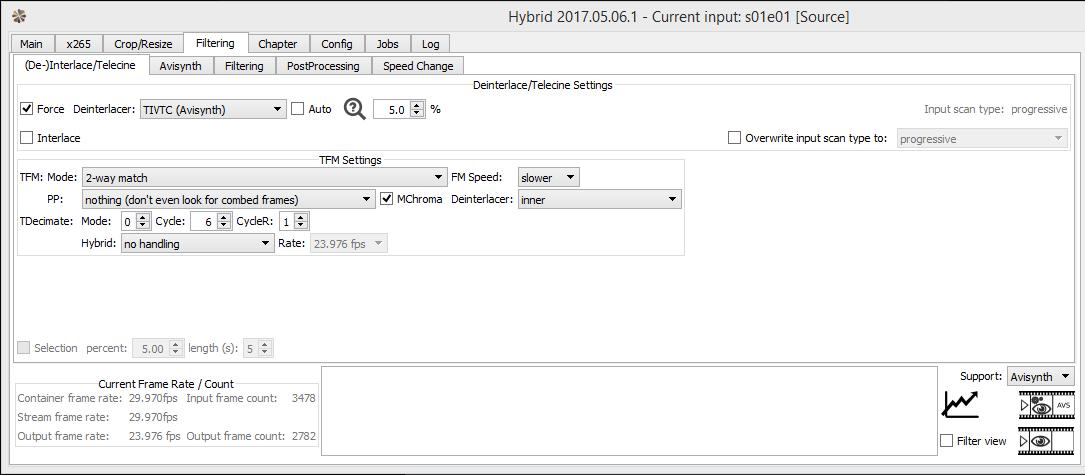

For "TDecimate(Cycle=6, CycleR=1)", I set it like this in Hybrid:

And the video conversion settings in both instances are:

The downloadable samples in the original post are the products of these settings.

I was hoping someone can download the source video & see if it's possible to decimate it properly. -

I was finally able to download the source video. TDecimate(Cycle=6, CycleR) returns the expected 24.975 fps (5/6 of 29.97).

Just saw your most recent post. The problems is that you have the output frame rate set to 23.976, not 24.975. -

Sorry we seem to be overlapping each other in responses.

Okay, but I didn't "set" the "output frame rate".

The options I can set are either drop boxes or fields.

None of these ask for frame rate.

Hybrid seems to display that frame rate info, as a prediction of what my settings result in.

Do you have any suggestions what I can set in Hybrid? Or any other program? -

-

First I used this script:

That gave a 24.975 fps result but there were a few missing frames (seen as jerks, especially during panning shots). So I tried this instead:Code:LSmashVideoSource("s01e01 [Source].mp4") ShowFrameNumber(x=20, y=20) Spline36Resize(width/2, height/2) TDecimate(Cycle=6, CycleR=1)

That got rid of the jerks. I didn't check the entire video though.Code:LSmashVideoSource("s01e01 [Source].mp4") ShowFrameNumber(x=20, y=20) Spline36Resize(width/2, height/2) TDecimate(mode=2, rate=25.0)

I usually encode with x264 CLI with a command line in a batch file that looks like:

You can drag/drop an AVS script onto that batch file to encode the video. I then mux the original audio with the new video with MkvToolNix.Code:x264.exe --preset=slow --crf=18 --keyint=50 --sar=1:1 --colormatrix=bt709 --output "%~1.mkv" "%~1"

-

I don't use any script.

That's the reason I use Hybrid, because you can use a GUI to set all the necessary options.

I use it regularly to "decimate" other videos, without trouble.

But this video is the first that's creating problems.

So just to be clear on what I do...

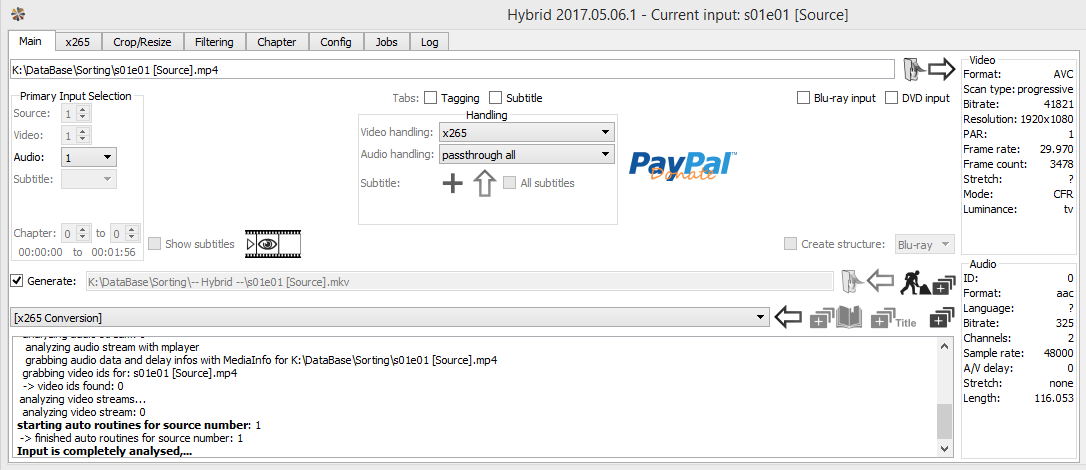

1) I load the video into Hybrid (see top of picture). I set video output format to "x265". Audio is set to "passthrough" (so it's not converted during video conversion). And destination file is set to "MKV".

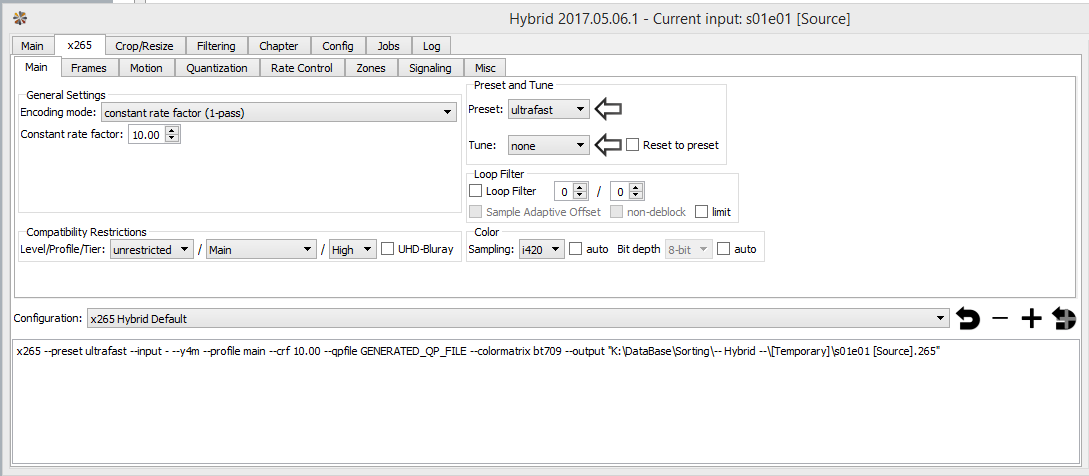

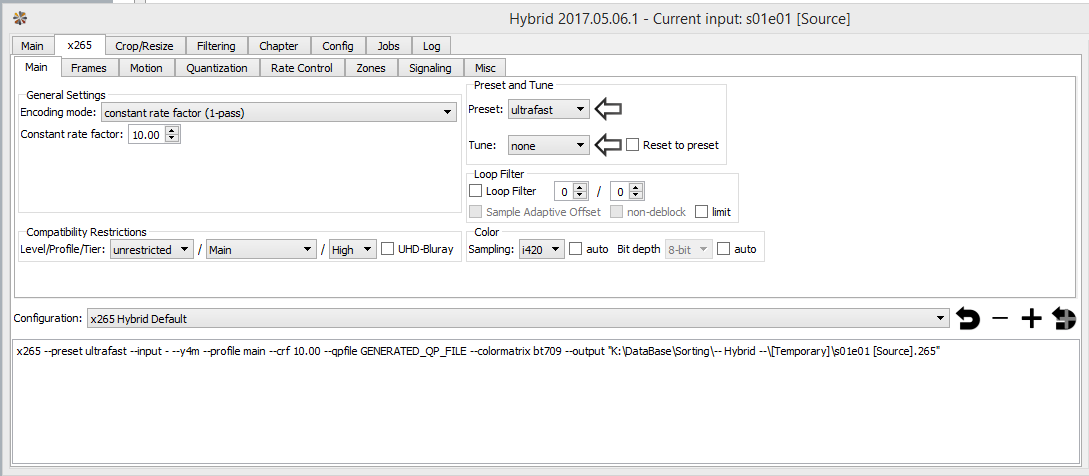

2) Then I set my video encoding options. Basic stuff.

3) And finally, I activate the "TIVTC (AVISYNTH)" option. This lets me set the "Cycle" & "CycleR" values. So that's what I mean by "I don't use any script". This option is supposed to handle the work the script does.

As I said, this method has worked for me without trouble on other "standard" videos. But I'm running into the audio problem described earlier when setting "Cycle" to "6" & "CycleR" to "1".

EDIT: I did try loading this script into Hybrid:

The result was the same. Audio problem.Code:a=LSmashAudioSource("K:\s01e01.mp4") v=LSmashVideoSource("K:\s01e01.mp4") AudioDub(v, a) TFM(slow=2) TDecimate(Cycle=6, CycleR=1) -

Yes, but Hybrid does, at least for Windows machines it does. Anyway, I don't use it. So Selur might have to have a look. If he doesn't show up here, you can find him at Doom9 for sure:

http://forum.doom9.org/forumdisplay.php?f=78

Once again, that IVTC doesn't change the video length and isn't responsible for the audio desynch. Maybe Hybrid added more stuff to the script before encoding. What happens if you were to encode that script in ... say ... RipBot264? You might have to demux the audio and have RipBot 264 encode it separately and then mux it in afterwards. I'm not real sure as I always have it handle my audio separately. -

I did a variation on your second script:

I inputted this AVISYNTH script into Hybrid, and got a good looking sample as a result (no audio or jerky motion problems):Code:a=LSmashAudioSource("K:\s01e01.mp4") v=LSmashVideoSource("K:\s01e01.mp4") AudioDub(v, a) TFM(slow=2) TDecimate(mode=2, rate=25.0)

https://drive.google.com/file/d/1tbOZ4tC8yB-U4lq8wesJLBTJhvMZ97rq/view?usp=sharing

In all the sample videos, there's a panning shot that starts at 1:15.

It's basically a camera shot that goes across a room/library.

- In the new "Mode=-2, Rate=25.0" sample, the panning looks smooth (and the audio's fine).

- In the old "Cycle=6,- CycleR=1" sample, the panning also looks smooth (but the audio's messed up)

- In the old "Cycle=5, CycleR=1" sample, the panning clearly jerks (though the audio's fine)

So it's kind of weird, and a little confusing.

You mentioned before that the audio problem I described was the result of incorrect FPS.

But then...

1) Why do both the old "Cycle=6,- CycleR=1" sample (with 23.976 FPS) & our new "Mode=-2, Rate=25.0" sample (with 25.000 FPS)...both have smooth motion?

I'm probably wrong...but it makes me feel like what's actually happening is that there's a bug in Hybrid.

2) Why do both the old "Cycle=5, CycleR=1" sample (with 23.976 FPS) & our new "Mode=-2, Rate=25.0" sample (with 25.000 FPS)...both have proper audio?

Because if it was just a matter of FPS....both "Cycle" samples should have messed up audio,

But we see "Cycle=5" has proper audio, while "Cycle=6" doesn't.

Like something is inherently messed up when Hybrid processes that "Cycle=6" command.

-------------

Anyways, I also want to acknowledge I'm a total dunce at this stuff.

I barely got the hang of this "Cycle/CycleR" stuff.

And now I have no clue how Jagabo came to his "Rate=25.0" idea.

Like what math or pattern did he see - to go with that solution?

P.S. >>> MAJOR THANKS FOR HOLDING MY NEANDERTHAL HANDS GUYS!

-

I posted at the same time as you, and also posited that something might be buggy in Hybrid.

Or I'm a total dunce, and have messed up something obvious lol!

Thanks for the program suggestion of "RipBot264".

I'm going to download, install, and play around with it tomorrow.

Though I should mention, I've never actually muxed anything before. But guess I gotta learn!

Your remark about audio got me thinking...

I think one of the reasons I ended up attached to Hybrid as a newb was that...in other programs, when I loaded a basic AviSynth script...the audio would never load. I'd end up with converted video...without any audio.

To be sure, Hybrid also doesn't detect the audio when I load an AVISYNTH script. But it's easy to add it to the job.

I have to go to the Audio section, load the source video, and then Hybrid adds the passthrough-audio to the queue.

Anyways, my brain's fried for the night.

Thanks again for the program suggestion!

-

Cycle=6,CycleR=1 is almost smooth because it delivers 24.975 fps. The difference between that at 25 fps is one frame out of every 1001. That is, there will be 1 missing frame out of every 1001. When I ran it on your sample there was an obvious missing frame between frames 59 and 60 (originally frames 70 and 73). So I decided to try mode2, rate=25.0. That got rid of that jerk and I didn't see any other problems on a quick play through the rest of the video. Of course, if there was a missing or duplicate frame in the middle of a still shot I wouldn't have seen it.

What you have is a 25p video that was converted to 29.97i with hard pulldown, then bob deinterlaced to 59.94 fps, and every other frame discarded to give 29.97p. In short 25p was converted to 29.97p by duplicating every 5th frame, except once every 1000 frames the dup is skipped (to make up for the difference between 30 and 29.97).

Cycle=5, CycleR=1 converts 29.97p to 23.976p by discarding 1 of every 5 frames. The resulting frame rate is 4/5 the original frame rate: 29.97 * 4 / 5 = 23.976 fps -- exactly what the program expected. But that removed too many frames, resulting in jerky playback. When you used Cycle=6, CycleR=1 you got 5/6 the original frame rate, 29.97 * 5 / 6 = 24.975 fps, which was then saved as if it was 23.976 fps. The result was smooth because (almost) all the original film frames were there but since it was saved as 23.976 fps the running time was increased (ie, it played too slowly). That appears to be a bug in the program. Try setting the decimation to mode 2. My guess is that will allow you to specify the frame rate. The author may never have come across this type of PAL to NTSC conversion. -

Last edited by jagabo; 28th Mar 2018 at 21:39.

-

-

To stabilize the subs: The basic idea is to replace the subtitles with a blend of multiple consecutive frames so that the edges aren't bouncing around. Of course, if you blindly blend frames together the rest of the picture will be messed up. So you need to isolate the subs. A distinguishing character of the subs is that they are very bright compared to most of the rest of the picture. We can use this feature to isolate them.

I started by cropping the frame down to just the area where the subs appear.

This is a box just big enough to hold the longest lines of text, and two lines of text. You will need to adjust the values if there are longer or more lines elsewhere in the video. Using just this area allows us to limit any changes to just the area where subs might appear.Code:SUBX = 400 SUBY = 864 SUBWIDTH = 1120 SUBHEIGHT = 144 subs = Crop(SUBX,SUBY, SUBWIDTH,SUBHEIGHT)

I decided to reduce the width and height by half to reduce some of the noise:

Code:.Spline36Resize(SUBWIDTH/2, SUBHEIGHT/2)

The use of a resizer that sharpens brightens the white strokes, and darkens the black outlines of the subs, slightly. This helps isolate the white subs. In some frames the dots above the letter i, or the crossbar of the letter e wasn't very bright.

To blend frames together I used Dup().

Code:.Dup(threshold=20, MaxCopies=6, blend=true, show=false)

Dup() looks at several consecutive frames. If they are very similar it blends them together (or repeats one of the frames several times). The Threshold value indicates how similar the frames have to be for blending. You need to adjust this depending on the particular usage. If the Threshold is too low frames won't be blended. If it's too high frames that shouldn't be blended will be blended (the quick transition between two consecutive subs, for example). MaxCopies=6 limits the number of blended frames to at most 6 in a row.

So now we have some subs that don't bounce around much but the background behind them may have blending artifacts that we don't want to see in the final result. Note how the white details in the red dress are blurred in the blended image (the dress was moving in the background). We will use Overlay() along with a mask to overlay only the white text and black outlines onto the original image. When using a mask only the areas where the mask is white are white are overlaid. Areas where the mask is black are not overlaid. Areas that are shades of grey a partially overlaid (blended) with the background image. We are going to use the brightness of the text to isolate it. First a Sharpen() brightens the white text further:

Code:mask = subs.Sharpen(1.0)

Note that this creates a new video called mask, it does not effect the subs video. Then we use a filter that turns everything above a certain brightness to full white, and everything below that to full black:

Code:.mt_binarize(225)

Of course, that's only the white part of the text. We want our mask to include the black outlines as well so we "expand" the white pixels:

Code:.mt_expand().mt_expand().mt_expand().mt_expand()

Then we soften the edges a bit so the subs blend with the background a little better:

Code:.Blur(1.0).Blur(1.0).GreyScale()

The GreyScale() isn't really necessary here (only the luma channel of the mask is used by Overlay()) but I used it to show just the luma channel when I was tuning the filters.

Finally, we restore the subs/mask video back to full size:

And overlay them onto the original video.Code:subs = subs.nnedi3_rpow2(4, cshift="Spline36Resize", fwidth=SUBWIDTH, fheight=SUBHEIGHT) mask = mask.nnedi3_rpow2(4, cshift="Spline36Resize", fwidth=SUBWIDTH, fheight=SUBHEIGHT)

Code:Overlay(last, subs, mask=mask, x=SUBX, y=SUBY)

The final script fragment:

This is a slight simplification of the script I used in the earlier video sample. In that one I built two masks one of blacks and one of whites. I expanded the black mask until it filled in all the area of the white text. Then I multiplied the two masks together (basically a logical AND). That gave me a mask that indicated only where white appeared very close to black. That prevented some other bright parts of the background from looking like text.Code:SUBX = 400 SUBY = 864 SUBWIDTH = 1120 SUBHEIGHT = 144 subs = Crop(SUBX,SUBY, SUBWIDTH,SUBHEIGHT).Spline36Resize(SUBWIDTH/2, SUBHEIGHT/2).Dup(threshold=20, MaxCopies=6, blend=true, show=false) mask = subs.Sharpen(1.0).mt_binarize(225).mt_expand().mt_expand().mt_expand().mt_expand().Blur(1.0).Blur(1.0).GreyScale() subs = subs.Spline36Resize(SUBWIDTH, SUBHEIGHT) mask = mask.BilinearResize(SUBWIDTH, SUBHEIGHT) Overlay(last, subs, mask=mask, x=SUBX, y=SUBY)

Last edited by jagabo; 30th Mar 2018 at 09:54.

-

An incredible post Jagabo.

You should copy & paste it elsewhere as a tutorial.

Though I'd concede, it won't be immediately popular.

In this case, it'd be worth a try because my content doesn't have any disc alternative (DVD, BluRay, etc.) with subtitles.

So these hardcoded subtitles are all that's out there.

But this is the problem. It would be a time-consuming effort to determine the:

- Width of the longest lines of text

- Maximum number of lines of text

Obviously, if you don't get it right...you risk having some key subtitled dialogue missing.

A frightening prospect, considering there'd be no alternative if I deleted the source after video-processing.

But still, an incredible effort by you Jagabo!

-

You'd have to look through the entire video to check.

Nothing will be missing: the original subtitles outside the box will still be there. Of course they'll still be bouncing. You could just enlarge the box to the full width of the video. The downside is that there may be more artifacts when bright non-sub stuff appears in the box.

If you have a better source without subtitles: you can consider using this as a basis for OCR to make soft subs for the other video. Just blacken the original video before overlaying the subs. -

-

You're right, there are a few duplicate and missing frames. I think the bouncing subs and moving black borders at the top and bottom (and maybe the frame numbers) are making it more difficult. I found that works better:

The decimation is based on a cropped version of the video (no subs or borders) but the output is the full frame. And m2pa=true allows for a larger lookahead.Code:LSmashVideoSource("s01e01 [Source].mp4") Spline36Resize(width/2, height/2) TDecimate(last.Crop(0,16,-0,-108), mode=2, rate=25.0, m2pa=true, clip2=last) -

-

That's how you get back the original uncropped but decimated video. It returns 'last' - the original and resized video, but after the decimation and without the cropping.

No, you're processing 2 videos, the one before the decimation (the 'last' one) and the one after.But in this case, as we're processing one video... -

Normally, the same clip is used for duplicate frame detection and to produce the output. When you supply clip2, the first clip is used to detect duplicate frames, but the output comes from the second clip. In my script I cropped away the black borders and subtitle area so they wouldn't interfere with duplicate frame detection. The subs weren't part of the original film, they were added later and behave differently than the film. The duplicate frame detection is based on this cropped clip, but the actual output video comes from the second clip.

-

Hey guys, this is a bit unrelated to the topic.

But I noticed Jagabo's AviSynth code included cropping values.

Can anyone tell me how to measure & determine the exact cropping value required?

Do you guys use a specific program or command?

I don't think you're using rulers on your computer screens...

-

I usually get the crop values using the Resolution Tab in Gordian Knot. Another way is to open the AviSynth script in Virtual Dub, making sure Video is in Full Processing. Then go Video->Filters->Add->Null Transform followed by Cropping and choose the X and Y values. Make sure to crop by multiples of 2 (assuming your video isn't in RGB).

I might use the values VDub gives but do not use it to do the actual crop. Transfer those values to your script properly. And then cancel out the null transform and cropping and put Video to Fast Recompress before encoding.

I'm sure there are other ways. That's just how I do it.

Similar Threads

-

Premiere Pro CC 2015 - "Step Forward 1 Frame" doesn't jump to next frame

By xyron in forum EditingReplies: 1Last Post: 30th Nov 2016, 09:05 -

not sure how to frame question - "sharpen old audio" ?

By hydra3333 in forum AudioReplies: 10Last Post: 21st Nov 2016, 06:03 -

Watermark only one frame and "direct stream copy" the rest in mkv

By ranmafan in forum EditingReplies: 16Last Post: 18th Nov 2016, 10:04 -

How i can encode audio of "REMUX" to "BluRay.720p.DTS" wit handbrake?

By VideoHelp4Ever in forum Blu-ray RippingReplies: 1Last Post: 2nd Jul 2015, 11:41 -

[SOLVED] "--ipratio" "--pbratio"+"--scenecut" "--minkeyint" / "--keyint

By Kdmeizk in forum Video ConversionReplies: 14Last Post: 21st Jun 2015, 07:21

Quote

Quote