I am looking at a file that is designated as 1024x576 DVDrip in the file name. Since the 1024x576 resolution is not part of the DVD spec (as far as I know), I wonder how the person who ripped this DVD arrived at this resolution. The material is not available on Blu-Ray, so it's out of the question that this is a BDRip.

Try StreamFab Downloader and download from Netflix, Amazon, Youtube! Or Try DVDFab and copy Blu-rays! or rip iTunes movies!

+ Reply to Thread

Results 1 to 30 of 36

Thread

-

Last edited by Knocks; 10th May 2015 at 04:02.

-

It's the "square pixel" equivalent of 16:9 PAL DVD

A 16:9 720x576 PAL DVD gets "stretched" to 1024x576 on display (1024 / 576 = 1.77778 or 16:9).

It's not a very "smart" way of encoding from a DVD source, since you unnecessarily increase the dimensions and bitrate requirements. It's usually better to encode it as 720x576 with 16:9 flags -

But it doesn't look upscaled. It looks super clean, in fact it looks HD.

-

Last edited by Keyser; 29th Jan 2015 at 10:13. Reason: Already answered

"The greatest trick the Devil ever pulled was convincing the world he didn't exist." -

Good SD video can look very good, it is often the frame blending, botched telecines, wrong interlacing, unnecessary noise, picture ruining video overlays and compression artifacts that can ruin a perfectly good SD video.

And on the other hand we can also have terrible HD, I won't mention the brain dead choice of limiting 1080 to being interlaced. neither will I mention the brain dead choice of still allowing MPEG-2 for HD.

-

I think,therefore i am a hamster.

-

And to talk about bad choice to allow mpeg2 for HD is idiocy too also, sorry, I could not come up with better word ...

-

It's not upscaled as such. Instead of being stretched from 720x576 to 1024x576 (16:9) on playback, and then upscaled if necessary to fill the screen (well it'd reallyl be done in one step) it's resized to 1024x576 and encoded that way.

The main reason for doing so would be that not all players support anamorphic video in MKV or MP4 files. That is, if it's encoded at 720x576 they won't stretch it to 16:9 on playback and it'll look squished. So if it's "stretched" and encoded that way, it's not an issue.

I've found if you use a sharp resizer when stretching to 1024x576 it can make the encoded video look a bit sharper when it's running full screen than if it was encoded at 720x576, and in fact it can look a bit sharper than the original DVD. -

Last edited by Kerry56; 29th Jan 2015 at 14:02.

-

Maybe he's just referring to HD television but maybe he doesn't understand the HD TV standards support 1080p, even if it's not widely used, and even 1080p50/60. The DVB standard even included Scalable Video Coding with the 1080p/50 update so older devices could still receive and decode it, although at lower frame rates (I'm not sure about ATSC).

And maybe he doesn't realise HD TV has actually been around for quite a while and mpeg2 was chosen for a reason other than not to reduce compression for no apparent reason.

I was reading a bit about DTV where I am (Australia) not so long ago and it seems there's no sign of moving away from mpeg2 in a huge hurry, but it's being discussed. According to what I read, most TVs/receivers produced in the last 10 years should be quite happy decoding mpeg4, but it seems the broadcasters aren't in a hurry as there's still quite a lot of "mpeg2 only" equipment in use.

I think he was actually offering the same opinion as you, but I also agree. I've seen a reasonable amount of mpeg2 video on Bluray (mainly older discs) and there's nothing wrong with the quality. Huge file sizes when it's ripped, but the quality is fine. -

Maybe you can also mention the old argument that it's supposedly impossible for MPEG-1 to produce good video.

MPEG-1 is fine at sufficiently high bit rates. I'm not arguing that anybody use it today, but there's nothing inherently "crappy" about it, although I would really recommend that it not be used for anything above SD video lest the bit rate requirements get crazy.

MPEG-2 is fine too at sufficiently high bit rates. Actually allowing it in HD was probably for various reasons. Some original HD TV broadcasts were in MPEG-2. And encoders were easily available that could output HD using MPEG-2 while early on in BluRay, H.264 and VC-1 were a tiny bit harder to get going. A good number of early release BluRays were done in MPEG-2 just because the tools were already there and people knew how to use them.

Even Divx is fine if you use a high enough bit rate. There's nothing inherently wrong with any of those 3 codecs and nothing that can't be solved by just throwing more bits at it.

1080 is NOT "limited to being interlaced". I wish you would stop saying that. If your point is that US TV standards don't currently allow 1080p broadcasts and you want to complain about that then that is a different animal than your blanket, misleading and wrong statement that "1080" itself doesn't allow progressive video. What do you think most BluRay discs are? 23.976 fps progressive 1080 video. -

He just wants to throw in the "stuffy old engineers" bit, along with "interlaced=bad" bit and the "crappy 50's TV" picture again. Talk about a broken record!

Scott -

-

-

I remember just some years back people even could not play fullHD 24p, H.264 in theirs computers. Folks are still using computers that can barely play mpeg2 even now, so why not , don't know, for themselves could not make mpeg2 disc or videographer in that country had not a choice to make mpeg2, etc., imagine it would not be in specs, you cannot make statements like you do, simply because you live in some country that disposes any box every couple of years. Talking like that you are laughing at those that have completely different priorities than ordering latest electronic box from Amazon every Christmas. One thing is 50p or 60p support but a choice to be able easily play a video at all is another.

-

Seriously why should we cater to the obstinate?

By catering we actually remove the incentives for people to finally upgrade their decade old systems.

A computer a few years old should be able to handle things.

But what about people who have 10 year old computers running 10-15 year old software and still expect that everybody downgrades to their level? Do you think that is reasonable?

Look if their are satisfied with their 15 year old computer running XP fine, they can run CGA quality videos just fine. But I believe we should not compromise standards for those who won't keep up. -

No we don't. We just have a lot of people using equipment that won't work if the standard is suddenly changed.

I find it hard to believe there's so much mpeg2 only equipment in use, but I guess there must be.

Probably..... as long as you're happy to ignore the MPEG4 trial carried out in 2012, while pretending not only was it doing nothing to trial mpeg4, it was also not doing it in 3D.

And while you're at it, you'd need to furiously ignore the world's first Free-to-air 3D mpeg4 broadcast, which was a trial carried out where I live. And that was before the lights went out on analogue.

Things progress. Not always at a blistering pace, but I'm old enough to remember the B&W CRT TV days, or when home video entertainment involved a VHS tape, and I'm pretty sure I'm not currently playing a 1080p movie with a computer and displaying it on a 51" 3D Plasma TV because of inverse proportionality of age and drive to innovate, or thanks to stuffy old engineers, or whatever other rhetoric you might care to offer.Last edited by hello_hello; 29th Jan 2015 at 16:04.

-

How on earth does my running a computer of any age force you to downgrade to my level? What a load of nonsense.

My computer's running XP connected to a Plasma TV.

How about you answer a question, which I know you won't, given you ignored it several times previously, but what are the major advances I'm missing out on? What are the exceptional, wonderful, mind blowing tasks you can perform with your PC I can't perform with mine?

How is my using XP forcing you to compromise your standards?

The oldest software I run is over 15 years old. I started using it in the Win98 days. The last update for it included the ability to run on Win2000. It installs on XP. Getting it run run on Win7 is more of a challenge but it can be done. It's organiser/planner/calendar software. Nothing out of this world by today's standards, but it's still quite good and I like it. I hate Outlook. How's my running my old software effecting your ability to use Outlook?Last edited by hello_hello; 29th Jan 2015 at 15:59.

-

Ahh yes, the seventies and early 80s. That's when the economy was running well and people actually bought new things!

Then they spent literally thousands of inflation adjusted dollars on color TVs and VCRs, tapes, stereos, you name it! But nowadays the (late) baby boomers hardly spend anything and complain that a new computer is a "whopping" $500-$700 so they keep their 10-15 year old computers while they had no problems forking out much more for late seventies color TVs and VCRs (a TV and VRC in the late seventies cost about $2500 each in inflation adjusted dollars).

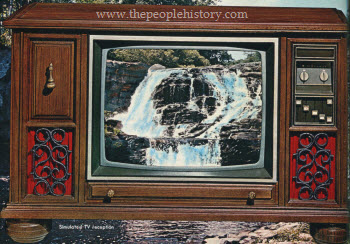

This 1970s top model color TV cost about $4400 inflation adjusted dollars. But for that you did have remote control!

Same with computers, a basic TRS 80 from 1978 was about $1400 inflation adjusted dollars. And this model was totally stripped down.

Last edited by newpball; 29th Jan 2015 at 16:02.

-

@newpball

- Actually the 90's was the "best" decade.

- Also I don't think money have nothing to do with it.

- I pay for a W-7 64bit half of the price (New) & now on days I can get it for 1/4 (New).

The way I see it:

A real Pro should be talking about the old things as it progress into the new stuff.

'Cos by leasing to them: The people somehow will understand better how all thing are created.

- Things are not made on a blink of a night.

- I can't say to my grand dad to shut up, 'cos he have nothing new to say.

are you !?

P.S.

If you no want to cater those who are not at your level:

You just, should restrict yourself to comment to them.

soft & simple. Right !?Last edited by DJ_ValBec; 29th Jan 2015 at 16:33.

-

You can baby boomer meaningless inflation adjusted rhetoric as much as you like but it doesn't change the fact I'm still watching HD video on a 52" Plasma whether grandpa and grandma cough up $700 to send and receive the odd email on a new computer or continue using their old one.

Although if they don't upgrade soon I guess the market for tablets and ipads is going to collapse and UHDTV well never get off the ground, or however you imagine it all works.

Why on earth do you think showing you can buy a lot more technology for your dollar today than you could 35 years ago supports your argument?

And there you go. You ignored my computer questions again. Forgive me if I don't take you seriously. -

Yes, how terribly silly of me to rate the quality of a compressor on how well it compresses for a given quality. What was I thinking!

Using MPEG-2 for HD was simply an incredibly dumb decision, H.264 is far superior. -

right, so tomorrow we will trash all hardware and buy new boxes, phones, players, faster computers because there is superior HEVC out there ... it is incredibly dumb to support H.264 any further ....

Last edited by _Al_; 30th Jan 2015 at 19:01.

-

You are missing the point. There is nothing wrong supporting an older CODEC but not when it is not necessary.

HD was a new standard which required new hardware, H.264 was already around so why would anyone insist still doing it with MPEG-2?

Right, the usual suspects! The same folks who think that 7.5 IRE is still a good idea! The same folks who wanted to disallow 1080 at 50/60p!

-

Because H.264 needs more power to decode. Do you understand when was H.264 Blu-Ray and HD introduced? There was huge amount of hardware then that could not handle H.264 HD and some use it even today.

I never said those. I was one of those who could not understand not accepting 50/60p. -

Nice thread hijack

It's easy to say things in hindsight or look at one viewpoint.

Early AVC encodes actually didn't look that good - they were terrible. Only when AVC encoders became more mature did they start being used more.

The same parallels can be made with HEVC now. On paper, HEVC is "X" % better - but in reality, the gains aren't as high as advertised except on UHD scenarios and some types of content like animation . Similarly, AVC was advertised as "50%" better than MPEG2, but at the time it often looked worse(!) than MPEG2. But for UHD BD, HEVC is a must. AVC cannot compete at UHD even right now at this early stage for HEVC

There are also other factors to consider - the actual hardware costs (encoder and decoder chips) and licensing fees were and are substantially higher for AVC than MPEG2. (MPEG-LA fees) . Even set top boxes cost more that can decode AVC , so you get consumers complaining on top of everything. Sure , broadcasters had to make changes with the HD transition, but the cost would have been substantially higher if North America adopted AVC, like parts of the EU do now.

And ask folks in the UK what they think of the BBC transition to AVC. Most of them say it's terrible because all the channels dropped the bitrates by about 50-75%. Because "on paper" , AVC is "x %" better than MPEG2, so that gives them a "free pass" to drop the bandwidth -

I don't know what's funnier. The way you continually ignore questions in a discussion or the way you twist the facts.

Even if you could argue HD was a new standard due to an increase in resolution, it's still digital television.

http://en.wikipedia.org/wiki/Advanced_Television_Systems_Committee_standards

ATSC, standard finalised 1995, adopted by the FCC in the USA 1996, updated 2008 to support h.264.

http://en.wikipedia.org/wiki/Digital_Video_Broadcasting#Adoption

DVB-S and DVB-C were ratified in 1994. DVB-T was ratified in early 1997. The first commercial DVB-T broadcasts were performed by the United Kingdom's Digital TV Group in late 1998.

http://en.wikipedia.org/wiki/DVB-T2#The_DVB-T2_specification

DVB-T2, around 2009.

When the digital terrestrial HDTV service Freeview HD was launched in December 2009, it was the first DVB-T2 service intended for the general public. As of November 2010, DVB-T2 broadcasts where available in a couple of European countries.

The earliest introductions of T2 have usually been tied with a launch of high-definition television.

http://en.wikipedia.org/wiki/H.264/MPEG-4_AVC#cite_note-JVTsite-2

h264 introduced 2003, Fidelity Range Extensions 2004, Scalable Video Coding 2007, Multiview Video Coding 2009.

You seem to ignore the fact h264 was evolving. Hardware has evolved quite a bit. It was only a few year ago only the high end portable devices could play 1080p30, now the average phone has a camera capable of doing better and it won't be long before you'll find free 1080p60 decoders at the bottom of breakfast serial boxes.

Which folks are they exactly? Imaginary folks? Folks you've invented while hoping nobody will notice?

Or could they be the same folks responsible for the introduction of UHDTV?

http://en.wikipedia.org/wiki/IRE_%28unit%29

Isn't 7.5 IRE an NTSC thing? ie analogue? Composite video signals? Nothing to do with the discussion? Or are there really folk insisting on 7.5 IRE analogue NTSC for HD TV also, or however that'd work? Or are you erroneously referring to the limited/full range levels issue, while forgetting without limited range levels, there'd probably be no xvYCC colour?Last edited by hello_hello; 31st Jan 2015 at 01:05.

-

Indeed, but guess what?

Even at half that rate you run into issues. You can record it 1080p 30fps on a smart phone certainly, but you can't even burn a Blu-ray with it because brilliant engineers decided that's "too high end".

Give me one technical reason why a Blu-ray disk could not hold 1080 at 30 frames per second while it can hold 1080 at 60 fields per second just fine.

Right the answer is of course there is no technical reason whatsoever.

What if we double it? Could a Blu-ray player handle it? It could,it is supposed to handle about 40 Mb/s, certainly that is tight but doable with 1080/60.

Last edited by newpball; 30th Jan 2015 at 23:46.

-

You're probably correct about the right answer.

http://www.x264bluray.com/home/1080i-p

NB: the following two streams are encoded using fake-interlaced mode. This allows the stream to be encoded progressively yet flagged as interlaced.

1080p25

1080p29.97

You're probably getting the time line confused again. Bluray was released way before consumer devices could record at high frame rates.

I imagine you're correct about there being no technical reason "today". It's just not part of the official standard. Have you been testing current players to confirm they won't play it? You must know the players I'm referring to. The ones with USB inputs capable of playing High Profile 4.1 without any offical "bluray" encoder restrictions even though I'm pretty sure USB playback isn't part of the offial Bluray spec. Actually, these days, that'd be pretty much all of them wouldn't it?

Was there any 1080p30 content being produced when Bluray was released?

Yeah, I'd imagine Bluray players contain technology similar to all other electronic devices, giving them almost infinite forward compatibility. That's how it usually works isn't it? Or do you think the upcoming UHD Bluray standard might be able to handle it?

I'd imagine all players should be able to play 1080p60 if the Bluray VBV restrictions are tied directly to the bitrate alone. Is that how it works?

Quote

Quote