Here are a couple of links that show how 4:2:0 is subsampled in PAL DV (different from mpeg-2/dvd 4:2:0).

http://www.afterdawn.com/glossary/terms/dv.cfm (scroll down to 'Chroma Subsampling')

http://www.adamwilt.com/pix-sampling.html

http://www.lafcpug.org/Tutorials/basic_chroma_sample.html

Except for the first link they all seem to indicate that Cb and Cr alternate vertically. So each line would have all Cb or all Cr. But the first link indicates something else - it shows that Cb and Cr are subsampled like 4:2:2 for half of the lines and none at all for the other half.

Which one is correct ?

+ Reply to Thread

Results 1 to 18 of 18

-

-

At this moment, its just gearhead curiosity coupled with an afinity to technical information/knowledge.

Don't have the resources, ability or time right now. But if things change in future, sure. -

I believe the last one (alternating lines of chroma) is closest to the way the data is treated withing the DV stream. Maybe JohnnyMalaria will confirm this. But it is of no practical consequence when using DV codecs. A DV encoder will typically accept YUY2, YV12, or RGB data as input. And produce those as output. So exactly how the data is dealt with internally doesn't matter.

-

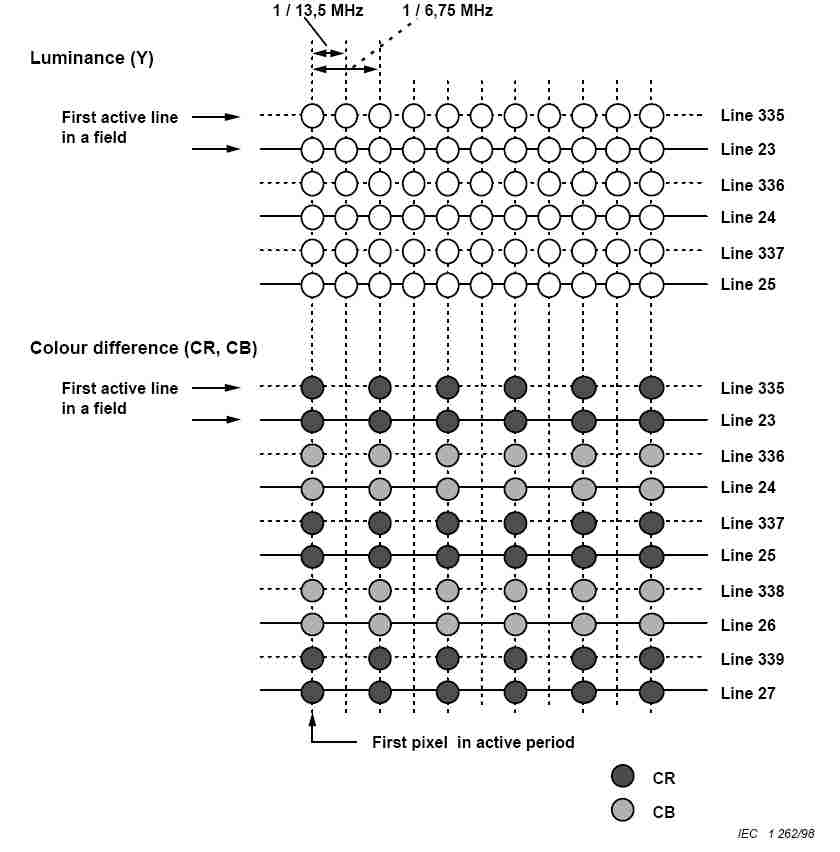

As jagabo typeth, so I findeth. Here is the official PAL DV sampling scheme taken from IEC 61834-2:

I agree that the practical consequences of using one vs. another are minimal.John Miller -

Thanks for posting the info. That clears it up.

What I like about the PAL DV subsampling scheme as compared to NTSC DV(4:1:1) and DVD (mpeg-2/YV12) is that every other pixel has atleast one 'known' chroma value, unlike the other two that either don't have chroma at all for 3 consecutive pixels or have it averaged out between two or more pixels.

My camera (sony minidv) has an option to switch between PAL or NTSC. Would be interesting to see the difference in visual quality, if there appears to be any.

and more than a new 'DV' encoder/decoder specifically, I am interested in pursuing a more balanced chroma subsampling/upsampling scheme in general. -

Just because a particular chroma value lies next to a luma value doesn't mean it's not an average of four original values. Using a point resizing method like that would lead to moire and stairstep artifacts and strobing during motion.Originally Posted by Movie-Maker

That's easy enough to test. Use a chart with sharp edges between colors of the same luma but different chroma.Originally Posted by Movie-Maker

Another thing you have to consider is how the chroma subsamples are treated by the decoder and colorspace converter when when upsampling. Some will simply duplicate chroma samples, some will interpolate. -

From a programming perspective, NTSC DV is a beee-itch. The rightmost macroblocks are different that the rest. 4:1:1 leads to 32 x 8 macroblocks except for 16 x 16 at the right. PAL DV is 16 x 16 everywhere. And the superblocks are a weird L shape - more like a tetris game.

It was one of the biggest headaches for me. Thankfully, MPEG2 isn't screwed up like that. Of course, it's much, much more complicated.John Miller -

you mean the chroma values of a pixel in a specific position indicated in a subsampling scheme may not be the actual real sampled value of that specific pixel ? and may be derived in some way from chroma values of neighbouring pixels ? I can understand that for schemes like those for mpeg where the chroma value is "shared" between surrounding pixels but I can't seem to understand that for schemes like 4:2:2 and 4:1:1.Originally Posted by jagabo

-

This depends on camcorder chipset design rather than format. Sub-sampled chroma can be point sampled or horizontally averaged or even 2D/3D averaged. The standard defines chroma pixel placement* not pixel content.Originally Posted by Movie-Maker

Where 4:2:2 or 4:1:1 to 4:4:4 playback conversion is done (e.g. for display or upsampled editing), many levels of sophistication can be applied. Reconstructed intermediate chroma pixels can be simple repeats, H averaged, 2D averaged or 3D (x,y,t) weighted to adjacent frames.

Three sensor digital video starts with 4:4:4 RGB (or pixel shift cheats) converted to various standardized YCbCr schemes for bitrate transmission compression, then expanded back to 4:4:4 RGB for display using local algorithms. Both camera and monitor designers have room to innovate.

* also color space model (e.g. 601 vs 709)Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Is pixel content determined by pixel location?

The IEC 61834-2 standard is quite explicit:

7.4.1 Sampling structure

The sampling structure is the same as a sampling structure of 4:2:2 component television signals which is described in ITU-R Recommendation BT601-5. Sampling structures of luminance (Y) and two colour difference signals (CR, CB) are shown in table 20.

Pixel and line structures in one frame

The sampling starting point in the active period of CR and CB signals shall be the same as the sampling starting point in the active period of Y signals. Each pixel has a value from –127 to 126 which is obtained by a subtraction of 128 from the input video signal level.John Miller -

I'm not sure that implies that the CR and CB values have to be point sampled from the higher color-resolution source. As edDV points out, in practice, cameras don't have 1:1 correspondence between CCD subpixels and image subpixels anyway.

-

I don't know. It says the starting points of the sampling must be coincident with the luma starting point. DV is sampled as D1. I agree manufacturers can play clever tricks with the imaging sensors for camera sources but the output from the sensor should still be sampled appropriately. Few are truly 4:4:4 sensors.

Not that it really amounts to anything significant in the consumer world as far as DV -> MPEG2 is concerned (well, for PAL, at least). -

That would be true if one were uncompressed sampling analog component YPbPr to Rec 601 standard. Most cameras do not use pixel for pixel sensors but interpolate YCbCr from larger (or smaller) RGB sensors or a larger optically filtered single sensor. The interpolation differs for 4:3 or 16:9 modes. The resulting YCbCr rasters appear as Rec 601 but the content of the pixels is the result of proprietary interpolation. That was my point.Originally Posted by JohnnyMalaria

For example a state of art SD Ikegami HK-399W studio camera has 3x 2/3" 520,000 pixel RGB sensors where the prosumer Sony PD-170 has 3x 1/3" 380,000 pixel RGB sensors. A 720x480 (704x480 active) luminance raster contains 345,600 pixels. Chroma rasters contain 172,800 pixels.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Out of curiosity, how much money do you have to part with to get a camcorder with a true ...RGBRGBRGB... pixel arrangement? (I use such 1/3" imaging sensors at work for microscopy etc.) Any (very high end) systems that image, record and edit in RGB? Imaging in RGB, storing/editing/distributing as YUV only to view as RGB again seems a little daft.

-

Most cameras are designed for flexible formats (e.g. 4:3 and 16:9, 1080i, 720p, 576p, 576i, 480p,480i etc) so rely on scalers. Some like JVC 720p HDV cameras specialize to a single standard (16:9 1280x720p in this case) and scale for 480p/480i.Originally Posted by JohnnyMalaria

http://www.hdtvsupply.com/jvcgy72hdcaw.htmlOriginally Posted by JVC

Yes the Sony HDCAM-SR system used for film style production is progressive 4:4:4 RGB to tape (@440 or 880 Mb/s).Originally Posted by JohnnyMalaria

RGB->YUV->RGB is used in broadcasting for recording and transmission efficiency. Even for uncompressed video, 4:2:2 YCbCr reduces bitrate 33% with little human perceptual loss. 4:1:1 or 4:2:0 cut bitrate 50% with minor perceptual loss. Bit depth reduction in the CbCr channels yields more bitrate reduction. This bitrate reduction occurs before DCT compression.

http://www.fourcc.org/yuv.php

http://en.wikipedia.org/wiki/YUV

http://avisynth.org/mediawiki/YV12Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Thanks, Ed.

What I meant about the YUV bit is that prior to distribution, it makes sense to keep the entire process in the same color space as that of the imaging sensor. Obviously, at the consumer level, such a luxury is untenable. In a perfect world, I'd like to record 4:4:4 RGB (uncompressed and at least 10-bit per channel) and display it on a suitable monitor with RGB inputs and direct 1:1 pixel mapping. If only I were a hollywood mogul.... -

Yep, only Hollywood can afford that at 1920x1080p/24 but JVC (plus FCP, AVID, Matrox or Canopus) allow similar workflow in 8bit 4:2:0 1280x720p 24/30/60 for the prosumer little guy. You are in business for under $10K.Originally Posted by JohnnyMalariaRecommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about

Similar Threads

-

when Pal dvd has correct Ntsc audio (Pal>Ntsc conver)

By spiritgumm in forum Video ConversionReplies: 15Last Post: 13th Oct 2011, 13:57 -

Transcoding w/Procoder 3 Chroma Subsampling (lines in the color red)

By Sullah in forum Video ConversionReplies: 0Last Post: 21st Jul 2011, 14:30 -

x264 or other option for encoding Fraps output with no chroma subsampling?

By Cogitation in forum Video ConversionReplies: 12Last Post: 26th Feb 2010, 18:44 -

Playback of PAL DVD at correct speed ?

By MacEachaidh in forum Software PlayingReplies: 2Last Post: 19th Sep 2007, 01:02 -

Is Pyro's S-video output an accurate representation of capture?

By miamicanes in forum Capturing and VCRReplies: 3Last Post: 14th Sep 2007, 17:41

Quote

Quote