http://arstechnica.com/news.ars/post/20081207-analysis-more-than-16-cores-may-well-be-pointless.html

perhaps this explains why sun's T2 processor has one FPU per core (it has 8 cores and each is capable of handling 8 threads, for a total of 64 threads), dual multithreaded 10 Gb ethernet integrated on the processor, integrated cryptographic unit per core (for a total of 8 crypto units) and 8 lanes of pci express i/o expansion integrated on the chip (man, i want of these bad boys), and why both intel and amd want to move to integrated gpu and why intel has said that it's working on using dram for caches instead of sram (once they go to dram they'll be able to put 1 or 2 gigs L2 cache on chip <--man, that will be awesome!!!).The work from the Sandia team, at least as it's summarized in an IEEE Spectrum article that infuriatingly omits a link to the original research, seems to indicate that 8 cores is the point where the memory wall causes a fall-off in performance on certain types of science and engineering workloads (informatics, to be specific). At the 16-core mark, the performance is the same as it is for dual-core, and it drops off rapidly after that as you approach 64 cores.

personally, i never did care much for super fast clock speeds or multi-cores (even though i have a quad core now), the P4 had 3 ALU's, all double pumped (i.e. the ran at twice the clock speed) but they weakened the FPU in order to encourage the use of SSE2, i would much rather if they just took the P4, made it dual core, removed all SIMD capabilities, beefed up the FPU's and had 3 per core (to match the ALU's) and just made the L2 as big as they could. i have a gut feeling such a chip could smoke even the i7's, with 6 ALU's and 6 FPU's crunching away and a massive L2 to store everything.

too bad that there's no such thing as an open source cpu, like a company you could contact, tell them exactly how you want your cpu built and they would do it for you.

+ Reply to Thread

Results 1 to 30 of 50

-

-

Just a personal opinion, and nothing to back it up, but the motherboard and the OS would seem to be the big limitation on using multiple cores, 8 or more.

-

And with other types of workloads the falloff will occur elsewhere.8 cores is the point where the memory wall causes a fall-off in performance on certain types of science and engineering workloads

-

the OS isn't as big an obstacle as you might think, if i remember correctly windows can scale all the way up to 256 cores (logical or physical) and linux doesn't have a practical limit, modern OS thread schedulers are actually pretty good.Originally Posted by redwudz

the motherboard on the other hand is part of the whole "memory wall" that the article is talking about, in all honesty once cpu/gpu hybrid processors start hitting the mainstream that also incorporate dram for cache it will be a thing of the past.

then again once that happens, the multi-core craze will go the way of the mega-hertz craze... -

Computers are a continual process of reducing the bottlenecks.

Want my help? Ask here! (not via PM!)

FAQs: Best Blank Discs • Best TBCs • Best VCRs for capture • Restore VHS -

Here's something that baffles me w.r.t. FPUs. As discussed on AMD's developer site:

Despite its long service in x86 architecture, x87 arithmetic is deprecated in 64-bit mode. To use x87 instructions in 64-bit mode requires no more than a recompilation with the appropriate compiler option. However, the extent to which operating systems will continue to support x87 in the future is unknown.That's just stupid. The floating point capabilities offered by SIMD are, frankly, kindergarten arithmetic compared to the x87 instruction set. If support goes away from 64-bit compilers, just how exactly am I to calculate trigonometric functions (e.g., to change the hue of an image), gamma corrections, etc etc, not to mention non-video stuff such as scientific simulation.The recommendation here is that x87 code should be avoided for new projects and eventually replaced in existing codebases.

If Intel/AMD really want to enhance SIMD then they need to provide fast, parallel equivalents of the x87 instruction set.John Miller -

you hit the nail on the head, what people don't realize is that all SIMD, SSE included, speed up math operations by using approximations, kind of like using linear algebra to estimate the area under a curve rather than doing the actual integral.Originally Posted by JohnnyMalaria

this is why no "real" scientific or business application, such as BLAST or weather predicting or market analysis applications use any form of SIMD to speed up their calculations, they need accurate, precise results.

interestingly enough, back when the itanium was first introduced, intel envisioned abandoning the x86 architecture and switching over to the ia64 ISA, a move supported by most of the major players in the tech industry including HP and microsoft (interesting side note: the HAL introduced with windows 2000 was to make the transition to ia64 seemless, as all one would need to do is swap out the existing HAL for a ia64 one and the OS and all the applications written for it could run unmodified, microsoft even offered a 64 bit win 2000 with the ia64 HAL), the most interesting thing about this was that the itanium doesn't have any SIMD units, it just uses really, really beefy FPU units.

as for amd's seemingly bizarre recommendation, my gut feeling is that the marketing departments, rather than the engineering departments, are dictating processor development at both amd and intel. as i already stated, for reasons unknown to anyone outside of intel, starting with the P4, intel weakened the FPU in order to encourage the use of SSE2, and if you look at processor advances, they never moved away from this philosophy, SSE has always been 128 bit instructions, with the core 2 intel beefed up the SSE unit making it a single cycle (i.e. one SSE operation can be fetched, executed and retired in one cycle (rather than treating it as 2 64 bit operations).

it looks to me like amd is of the same mindset, if you encourage developers to use SIMD instead of FPU their cpu looks better in comparison, it's about appearances rather than end results.

what both amd, and intel, need to be looking at is ibm's cell processor, no SIMD just kick ass floating point performance, that's why the fastest super computer in the world uses slightly modified PS3 processors (the floating point units have double the precision compared to the PS3 processors) for all the computations and just uses dual core athlons to handle I/O (i.e. feed and retrieve the data from the cells).

i remember reading an analysts report that said they don't expect amd to survive much past 2009, i'll make an even more startling prediction: i don't expect either amd or intel to be major players in the cpu market for too much longer, they are dinosaurs, clinging to old business models (i.e. the planned obsolescences and forced upgrade).

the reason i say this is because i believe discrete add-in cards will become more and more prevalent and a better, more reasonable upgrade path rather than this game of new motherboard/memory/cpu every 18 months or so.

upgrade cards like these:

http://www.s3graphics.com/en/products/desktop/chrome_500/

that has GPGPU (general purposes gpu) technology built in for $45, that's alot of processing power just waiting for developers to take advantage of it.

or something like this:

http://www.leadtek.com/eng/tv_tuner/overview.asp?lineid=6&pronameid=447

that can be programmed for a wide variety of applications using C/C++, java or pascal

or how about something like this:

http://www.badaboomit.com/?q=node/192

which is an excellent example of using relatively cheap hardware (it can run on a 8400GS, what does that cost, $60?) and it allows for running one instance per card, meaning 2 cheap 8400's will allow you to transcode 2 1920x1080 H264 MKV's to blu-ray compliant 1280x720 H264 (or vice versa) both at the same time, how the hell is intel (or amd supposed to compete with that).

i see the 2 major players in the consumer cpu market going the route of the u.s. auto industry, namely the big three, old school players, stuck in their old ways, while cheaper, more efficient competitors entered the market and took massive chunks of market share right out from under them.

those that think intel is too big to go down this road just need to remember lehman brothers, GM (who may not survice the year, and that's only a few weeks away), chrysler (i really doubt they'll be around much longer either).

i personally was very disappointed with the i7, as it shows me that intel is still run by people that are hell bent on sticking to the planned obsolescence model, for the i7 i would much have preferred if they forgot about the hyperthreading (let's even forget about integrated gpu and dram for the foreseeable future), removed it's SIMD units and just given us a quad core with really beefy ALU's and FPU's, i would have been happier than a pig in shit (and a pig in shit is one happy animal<--don't know why, maybe because it's a pig).

well, enough of my babbling, i will leave you guys with this interesting experiment i did: using tmpgenc, i transcoded the same file (an mkv 1920x800, h264, ac3) to a dvd compliant mpeg, using the dvd template. all the settings were the same except for one very big difference: under the preferences for one encode i enabled all the SIMD stack available and for the other i disabled the SIMD stack so that FPU was used.

the end results? well, aside from the fact that without using the SIMD unit the encode took forever (i mean over 5 times as long), the encode done using the FPU had a higher quality (i had set the DC precision to 10 for both encodes and the motion estimation to highest with error correction), enough so that if i was producing a commercial dvd, i would have disabled the SIMD extensions for the final product.

just something to think about... -

This is a better article, the Ars article was very light:

Multicore Is Bad News For Supercomputers

http://www.spectrum.ieee.org/nov08/6912

"The performance is especially bad for informatics applications—data-intensive programs that are increasingly crucial to the labs’ national security function."

"For informatics, more cores doesn’t mean better performance [see red line in “Trouble Ahead”], according to Sandia’s simulation. “After about 8 cores, there’s no improvement,” says James Peery, director of computation, computers, information, and mathematics at Sandia. “At 16 cores, it looks like 2.” Over the past year, the Sandia team has discussed the results widely with chip makers, supercomputer designers, and users of high-performance computers. Unless computer architects find a solution, Peery and others expect that supercomputer programmers will either turn off the extra cores or use them for something ancillary to the main problem."

***

The real problem is for the field of informatics, not everyday computer use. So no the sky is not falling. Multi-core processors will be fine for the rest of us since most of us will be in no way pushing their computational and data delivery abilities to their limits.

-

"as for amd's seemingly bizarre recommendation, my gut feeling is that the marketing departments"

MS make the same recommendation, too. I first came across it in the documentation for Visual Studio 2008 when writing a 64-bit dll. Seems they opted to take FP support out of the 64-bit compiler and force SSE2 use. Not sure if that has been reversed.

I just don't understand all this SSSSSSSSSE4 fixation. Since SSE2, barely any new useful functions have a been added. I'd kill for a single instruction 8x8 matrix operation. All these pseudo-telecom friendly instructions strike me as pretty useless. Any hardcore hi-tech telecom needs will use custom, embedded solutions with such things as FPGAs etc, not general purpose mainstream processors. -

that just re-enforces my gut feeling, it's not called the wintel architecture for nothing. when intel was planning on abandoning x86 and going with ia64 microsoft added a HAL to the NT code base and gave us win 2k, when hyperthreading was added to the P4 microsoft released xp that recognized the difference between a logical and a physical core, microsoft didn't release a 64 bit OS until intel released a 64 bit cpu, the DX stack has been SSE optimized since direct x 6 (it might be 5) which coincided with the release of the P3, the first cpu to have SSE.Originally Posted by JohnnyMalaria

it may be that the people at amd see the trend, see intel's continuing focus on improving SSE (almost to the exclusion of everything else), see microsoft encouraging the use of SSE as a favor to intel (and in fact now forcing the use of SSE with their latest compiler) and basically see the writing on the wall.

amd seems to be in survival mode at the moment, they are ripe for a collapse, a decent 3rd player, like VIA, if they were to release a solid, properly done dual core, could put amd out of business very easily.

as for microsoft, they have always been about pushing their own agenda rather than doing what's best for the end user, just look at their J# and C# offerings, their visual basic is pretty good but in all honesty if you want to see BASIC done right look at Free BASIC and Real BASIC.

but i'll tell you what, intel and microsoft are out of the minds if they think they can deprecate x87 floating point operations and replace them with SSE/2/3/4 operations, they think that the end user, the non-commercial users are sheep, i think what they'll find is that they will open the door to competitors they never saw coming.

i would love it if AMD took a step back, released a cpu like i outlined before (no SIMD unit, just some beefy ALU's and FPU's), dual core with lots of L2 AND developed their own linux distro, custom compiled to run like stink on a monkey when mated to a motherboard with an amd chipset and an ati video card.

not going to happen, i know, but i can dream, can't i?

you're exactly right, they don't use intel (or amd) cpus, they use DSP's, again it's what happens when the marketing department is in charge instead of the engineering department, they try and maintain market share by forcing the use of their proprietary technology, regardless of whether or not it's the best tool for the job.Originally Posted by JohnnyMalaria

this strategy eventually fails, all empires fall, the greek empire, the roman empire, the british empire, detroit's big three, the telephone giants, the huge cable giants are on their way out, the biggest and oldest banks in the world, the undefeated patriots lost in the super bowl, iron mike tyson lost, royce gracie lost, ali lost, microsoft and intel, as crazy as it sounds, will one day succumb to their own stupid decisions.

it's inevitable. -

stop rolling your eyes, there is a practical limit where the law of diminishing returns rears it's ugly head and no matter how many cores you add it just won't increase performance any.Originally Posted by RLT69

i don't pretend to be a Ph.D. in comp sci, but i did major in physics and comp sci when i was in college and while i don't have either degree, i do have about 40 credits in comp sci and i am certified as a unix system administrator, as such i have written my fair share of code.

trust me when i say that it's easy to write a single threaded application, it's somewhat harder to write an application with 2 threads and as the number of threads increases the difficulty associated with preventing locks, with keeping track of threads, and just plain old thinking of ways to make a task more and more parallel, and you eventually get into a situation where you either can't parallelize a task an further or you run into a bottleneck where the more threads you launch the less overall resources you have to work with. there are a limited number of registers on a cpu, there is a limited amount of cache, there is limited amount of ram and there is a limited number of floating point units and alu's, and you have limited bandwidth between the cpu and the ram, different applications, different types of threads will result in different upper limits, but make no mistake, the limit is there, if you don't run into the memory wall, you run into the parallelism wall, if you don't run into that you run into the register wall, it's kind of like having 1 person carrying a box walking through a doorway, with a sufficiently wide doorway more people can carry more boxes through at the same time, but as you add more people eventually the door way isn't big enough, if you make the door way wider you may find that even though more people can walk through the door way at the same time, you may find that the hallway at the other end isn't big enough to handle all the extra people, same holds true for adding more doors, or you find that the other end can't supply the boxes fast enough, if you try and make the boxes bigger you may find that now you need 2 people to lift the boxes instead of 1, and so on.

multi-cores are no more the answer than just increasing the clock speed, cpu makers need to start thinking about cpu design in terms other than what they have been accustomed for the past 20+ years. -

The issues isn't really x86 fp vs SSE. There's no reason SSE can't include 80 bit extended precision in the future.

-

Technically no. Commercially, yes. I don't know the cost of adding one more instruction to the design but it must be a heck of a lot.Originally Posted by jagabo

-

you don't seem to understand, one of the things that makes all SIMD faster is the fact that they use lower precision than x87 floating point operations, not to mention they use math "tricks" to estimate values.Originally Posted by jagabo

if you recall an example i used before (the pi example), doing it via the floating point unit is equivalent of calculating pi in the following, correct way:

pi = L n->i (Pn/d)

where L is the limit, P is the perimeter, n is the number of sides of a regular polygon (i.e. a polygon that has enough bran in it's diet), d is the diameter and i is infinity.

where as using SIMD is like calculating pi as:

22/7

and then truncating the result after the 10 decimal place.

now sure it's possible to enhance SIMD so that it calculate pi just like the FPU does but then you have no performance advantage over the FPU.

in a nutshell all SIMD relies on doing less work, if it did the same amount of work as x87 fp then it would be no faster. -

All floating point uses estimated values on computers. The difference is the number of bits of precision. SIMD is faster not only because it uses lower precision. The use of lower precision was a decision based on the practical size of registers and internal data paths of current designs -- and current perceived need. SIMD is also faster because a single instruction can perform operations on several operands and because the more data you can keep in registers the faster the operations will be.Originally Posted by deadrats

-

FP SIMD instructions are very limited in scope, providing the most rudimentary operations. No sine or cosine - important for color correction. No logarithms/exponents - for gamma correction etc.

And the performance overhead (especially latency) for converting to and fro between integer (as used in virtually every video format) and FP is dreadful. I simply use 32-bit fixed point arithmetic where I can since this permits very efficient tricks to be used. Right now, though, to change the phase of a U and V pair, I have to read the data into 32-bit general purpose registers, convert them to FP, do the calculations and reverse the process. My only other option would be to use a look-up table which isn't practical if I am using 16- or 32-bit intermediate precision. -

just an update: Open CL 1.0 specs have been released:

http://arstechnica.com/news.ars/post/20081209-gpgpu-opens-up-with-opencl-1-0-spec-release.html

http://www.khronos.org/opencl/

for those that don't know Open CL is the api that allows a programmer to write portable code that runs on a cell processor, cpu, dsp or gpgpu without regard to what type of processor it is.

basically a developer could write an application for the PS3 and use both it's cell processor and gpu as they were one big cpu or on the desktop they could write a multithreaded application that ran on both a quad core cpu and an sli video card setup as if they were all one massive cpu, using resources from each as he/she saw fit.

what it means for us is that we could go buy the cheapest dual core cpu on the market, the cheapest 2 gigs of ram and the cheapest motherboard, mate it to a a couple of super cheap gpgpu supporting video cards and so long as the application was an Open CL application, we would see performance that surpasses even the priciest cpu only solutions.

i expect to see folding@home, seti and other distributed computing applications be the first to take advantage of Open CL and i don't think it will be too long before we see video editing/encoding software (maybe even games) follow suit. -

Making it work on any CPU/GPU will cost you performance that can be had for creating code for specific architectures...

The code still has to be compiled for a target, though. At the end of the day, an x86 processor can only work with x86 code. If you want true portability that allows something to run on any architecture without specific compilation, you'll have to resort to some kind of interpreter or JIT compiler. -

this is from the pdf:Originally Posted by JohnnyMalaria

if you look through the pdf where they give an overview of the architecture of Open CL it kind of looks like a miniature OS, for lack of a better term. it has a HAL, what looks like a run time compiler, you have to see this framework, it's almost a work of art:Enable use of all computational resources in a system

- Program GPUs, CPUs, Cell, DSP and other processors as peers

- Support both data- and task- parallel compute models

http://www.khronos.org/opencl/presentations/OpenCL_Summary_Nov08.pdf

this could revolutionize computers, i always thought that eventually we would move to platform agnostic applications, but i expected it to be Java that got us there, Open CL looks like it could effectively make OSes as we know them obsolete, it would certainly make monolithic OSes, like windows (yeah, i know, technically it is modular, but not to the end user) kind of redundant.

of course, as history has shown us in the past, the best technology doesn't always win, but you never know. -

unfortunately, this level of programming is beyond my abilities, not to mention i really really hate C/C++, but i can certainly see the potential for this technology.Originally Posted by poisondeathray

-

SO which of those two calculation methods will calculate PI exactly? Sounds to me they see FPU's going away.. or at least the work they do can be farmed out to other (faster) more Gpu-like structures, if available.

The biggest bottleneck in future computing will be your (net to) home bandwidth.. remote disks.. remote video..Corned beef is now made to a higher standard than at any time in history.

The electronic components of the power part adopted a lot of Rubycons. -

Removing a particular capability from the processor would be an unprecendented move. All current desktop/laptop processors can run 8086 code. Backwards compatibility has been maintained with every new generation. Removing the FPU would eradicate whole classes of applications. Spreadsheets, accounting software, statistical software - even humble Calculator (I use it frequently) wouldn't work anymore. There's more to having a computer than games and videos. If you want that kind of specialization, buy specialized hardware. Don't remove something because it is perceived as being geeky and unglamorous.

-

The i87 instruction set will not be removed from processors and Windows support for it will not end in the foreseeable future. As JohnnyMalaria points out, virtually every Windows program would cease working.

-

Pi can't be calculated exactly.Originally Posted by RabidDogICBM target coordinates:

26° 14' 10.16"N -- 80° 16' 0.91"W -

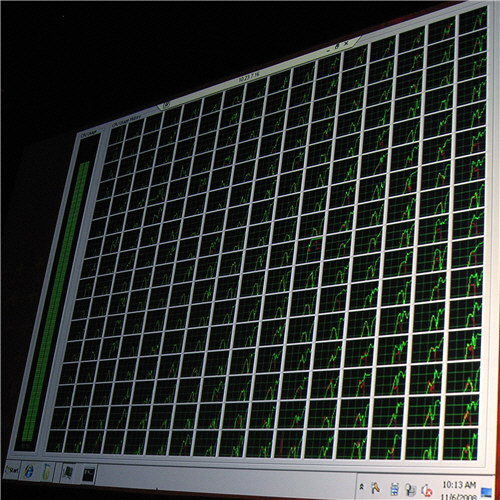

Only 256? Now, if you would have included a scroll bar for the remainder of the CPUs, I would have been impressed... 8)Originally Posted by poisondeathrayHave a good one,

neomaine

NEW! VideoHelp.com F@H team 166011!

http://fah-web.stanford.edu/cgi-bin/main.py?qtype=teampage&teamnum=166011

Folding@Home FAQ and download: http://folding.stanford.edu/ -

this one:Originally Posted by RabidDog

pi = L n->i (Pn/d)

it's a geometry based method developed by archimedes, there are other numerical based methods such as:

pi = 4/1 - 4/3 + 4/5 - 4/7 + 4/9 - 4/11 ...

as for FPU going away, the other possibility may be that amd is further along in the development of gpu/cpu hybrid than we have been led to believe, in which case once we have a gpu integrated with the cpu there's no point in using the FPU for math as the gpu would be much faster, but that doesn't explain why microsoft (and amd) would recommend using SSEFP (and in microsoft;s case actually forcing programmers to do so) as math done on a gpu is at least 10 times faster than doing via SSE.

Similar Threads

-

bulldozer goes BIG? 16 cores

By aedipuss in forum ComputerReplies: 14Last Post: 15th Nov 2011, 06:09 -

Does dvdrebuilder use multi cores?

By the_man_one in forum Authoring (DVD)Replies: 4Last Post: 16th Jul 2010, 01:25 -

At what point is a second pass pointless?

By Arlo in forum DVD RippingReplies: 6Last Post: 8th Jan 2009, 10:38 -

random pointless FYI

By Xylob the Destroyer in forum Off topicReplies: 5Last Post: 28th Dec 2008, 09:50 -

How to use all four cores?

By njsutorius in forum Newbie / General discussionsReplies: 3Last Post: 19th Jan 2008, 19:31

Quote

Quote