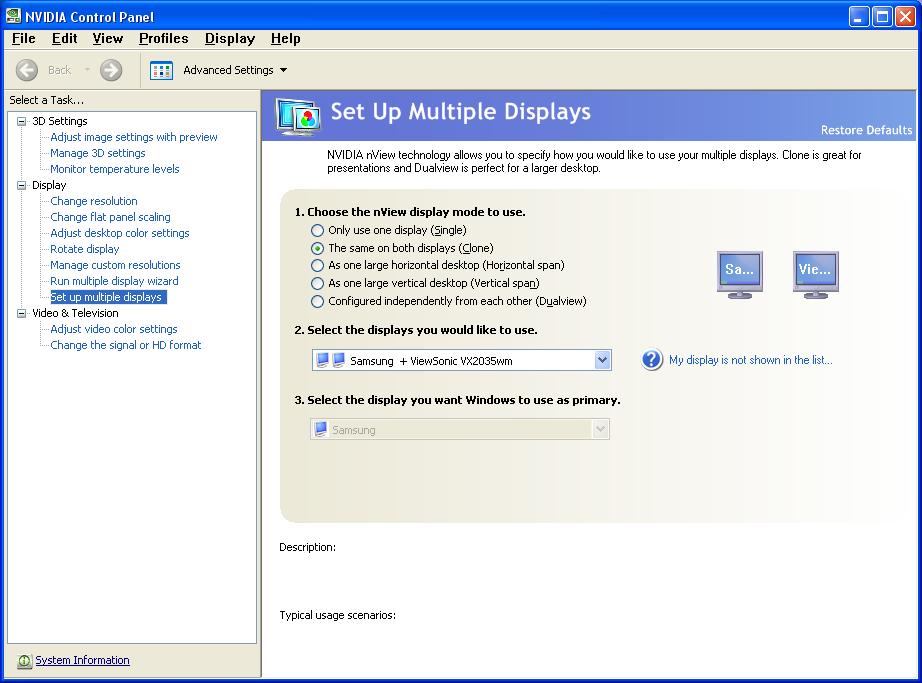

I recently got a Samsung 40" LCD HD and upon getting it, I purchased a DVI/HDMI cable with which to connect my PC so I can watch videos on the big screen without having to go through pain in the ass video converting and such. I connected it and there was "No Signal" displayed under HDMI2 which was the port I connected it to. Evidently it picked something up because it only shows the sources that have something connected to them when you press the source button on the remote. I used the nvidia desktop software and this actually made it work. I used the "clone" mode, and it worked fine, except having to change resolutions and options everytime I connect the PC. Is there a way to connect the PC 'directly' to the TV without having to play with settings and such? When I do connect them, my desktop resolution gets so I have to "scroll" around with my mouse because they size increases to fit the resolution of the TV. Which brings me to my second point. When I initially connected the monitor and it was detected on the PC, it showed up just fine. Now when I switched back to the monitor view, the monitor still works, but the properties under the "Settings" tab under display properties still shows that the TV is the monitor. Even when I physically disconnected the TV it still says that the TV is the main monitor. And when I removed it in System under Control Panel, it still shows " on Samsung" as an option. I find this strange. Also, whenever I tried to straighten that out last night, the monitor came back on and had a bunch of green "noise" pixels on the screen. And then it would black out, come back, black out, like it was changing resolutions or something. It kinda scared me because I didn't want my monitor to try a resolution it didn't like and "blow up". I've been using it with the "clone" option since, and that brings me to point 3. Last night whenever I came in, I tried turning the TV on and it took several tries of the power button on both the remote and TV itself to get it to come on. I was worried that the TV was having problems, but I remembered that I had just installed the DVI/HDMI cable that afternoon. Upon unplugging it, everything went back to normal. The TV comes on with one button press, normal 2-3 seconds waiting time. I'm wondering, is there some sort of interference coming from the PC? (And yes, the PC was on at the time, it's hardly ever off) And is there a fix for it? And the biggie: playing with these resolutions and the PC and TV getting mixed up in the display properties and such, I've heard that you can also break the TV with the wrong res, could the interference factor and switching the display so often effectively break the TV? Oh, also I think it'd go without saying, but I'll say it anyway: the graphics card has 2 video outs and both the monitor and the TV are hooked up at the same time. (I don't know how you'd mirror it without the monitor being connected, but I figured I'd spell it out, I don't mean to insult anyone's intelligence, really) I just basically want to know if there's a simple way of connecting the PC to the TV without having to go through the rigmarole of tweaking the nvidia software everytime and having to mirror the displays thus changing the res everytime, and if the TV is in any danger from doing this through the software so often?

+ Reply to Thread

Results 1 to 8 of 8

-

-

-

That's the one. I was hoping to just plug and the res would match and the TV would pick up that it's connected to the PC. But if I have to use that, so long as neither device is in danger of dying, that's fine. My main concern is that, and the fact that turning on the TV is somewhat of a chore with the HDMI cable plugged in, for whatever reason.

-

My setup is similar to yours. Nvidia 8600 GT to Samsung 46" HDTV at 1080p60 via a DVI to HDMI cable and a Viewsonic DVI monitor in clone mode. I don't have any problem turning on the TV though.

-

I've been running a setup like this for over a year now. First it was a 19" CRT and a 37" 1080p LCD on a 6600GT. Then the CRT was replaced by a 24" LCD. Then I upgraded my card to a 7600GT. Then just a few weeks ago the 37" 1080p LCD was replaced by a 47" 1080p LCD.

Originally the 37" LCD had DVI inputs so I used that to run off the second DVI input on the card. I run my displays in "Dual Display" mode because they have different resolutions. The DVI input recognized my display when I plugged it in and enabled it as 1920x1080. However when I got the 47" it didn't have any DVI inputs so I had to use a DVI-HDMI converter on it. At first it didn't recognize it and the nVidia settings were still showing my old 37" monitor. All it took was a reboot to get the new TV to show up as a second display.

I don't think I'd ever run such a setup in clone mode. Having a second display is so much nicer.FB-DIMM are the real cause of global warming -

My TV and monitor are in different rooms. I use clone mode so I can run the HTPC from either room. The monitor has a slightly lower resolution so it scrolls around the bigger desktop.

-

Yeah, that's what I was asking/saying. I don't want to clone them and I DO want it to be recognized at a native resolution so I can just flip it on and not have to fool with setting in nvidia's interface.

-

I don't always have that TV on even with the dual view enabled. Yeah the mouse can disappear off of one side of the screen but I still know where it is. Your windows remember where they were placed so Media Center is maximized to the TV display as are a few other windows I use when gaming (like TeamSpeak). I only touch the nVidia settings when I install a new monitor, which was only recently. I don't mess with them otherwise. The TV is connected but I power it off because it's too bright next to my other monitor while I'm gaming. That doesn't cause nVidia's driver to think it has been disconnected. It's the same as turning off the power on your monitor.

FB-DIMM are the real cause of global warming

Similar Threads

-

LED MONITOR - HDMI to DVI-D cable, HDMI media player not working?

By krishn in forum DVB / IPTVReplies: 16Last Post: 25th Feb 2012, 17:20 -

LCD image different using DVI and HDMI

By sohaibrazzaq in forum ComputerReplies: 17Last Post: 7th Jun 2011, 16:12 -

Need help with HDMI, DVI-HDMI and "high definition audio device"

By uart in forum Newbie / General discussionsReplies: 7Last Post: 16th Mar 2010, 01:15 -

Resolution mismatch connecting PC (DVI-I) to LCD TV (HDMI)

By anoopaythala in forum Media Center PC / MediaCentersReplies: 4Last Post: 27th Feb 2009, 11:48 -

Problem connecting computer (DVI) to TV (HDMI)

By kizzad in forum DVB / IPTVReplies: 2Last Post: 14th Jan 2008, 21:52

Quote

Quote