Hey, guys! Please, help me with these questions.

I have a video file (MPEG2 640 x 480, 8 Mbps), and I need to transfer it into MiniDV tape to be shown in a local Film Festival (they only accept BETASP, SVHS and MiniDV). MiniDV is a god choice because it’s digital, but I’m not really familiar with DV format. So, here are my actual questions:

1. As far as I know, “native” DV resolution is 720 x 480. Should I convert aspect ratio from 640 x 480 to 720 x 480 using some anamorphic algorithms, or I can leave it 640 x 480 ? If I leave it as is, will a MiniDV player resize picture during playback (which is not a god thing…)?

2. Which software can convert video into AVI DV format with some aspect ratio conversion?

3. Which software do I need to transfer the AVI DV file to a camcorder? Is any camcorder fine for that purpose (cheap ones)?

Thanks!

+ Reply to Thread

Results 1 to 30 of 32

-

-

NTSC DV only supports 720x480 at 29.97 fps. Assuming your 640x480 AVI is square pixel and 4:3 display aspect ratio you should stretch the 640x480 frame to 720x480 and encode as 4:3 DV AVI.

VirtualDub along with a suitable DV encoder like Cidoceda can do the resizing and encoding. Also AviDemux.

If your source is not 29.97 fps you will need more sophisticated handling to get the best results. -

Is your 640x480 16:9? If so you would just convert it to 720x480 with a 16:9 flag. If its 4:3 as jagaboo suggested, if its anything you'll need to either crop or add black bars to the video itself.

Mini-dv uses the same resolution of 720x480 for both 16:9 and 4:3, it can only be 720x480(576 for PAL) using either 16:9 or 4:3. A flag is set in the header of the video. Most software players will respect it and adjust the display aspect accordingly.

Proper aspect for playback from cam to TV opens a can of worms and depends on the capabilities of the TV, camcorder and the specific situation you are in. for example I have a prosumer CanonGL2, playback from cam to 4:3 TV with 16:9 material is impossible because neither the TV I have or the camcoder will adjust it for playback on the 4:3. It simply plays full size and everyone looks tall and skinny. Hook it to 16:9 TV and I'm golden. -

Thanks, jagabo and thecoalman.

My source is 30 fps, not 29.97 fps. Jagabo, what do you mean by more sophisticated handling if I have not a 29.97 fps video?

thecoalman and jagabo: my 640x480 is 4:3. Is there any other ways to change aspect ratio rather than cropping or adding bars at left and right? Any kind of “smart” way? If not, how do I do it in VirtualDub? Is that done by adding a Resize Filter? Which filter mode: Precise Bilinear, or any one of Precise Bicubic? What does Interlaced flag mean in the Resize Filter?

Basically, the video will be shown on a big theater screen through a projector… so I think they will probably copy all the videos into a 35mm film, or use a digital projector... I don’t know. Basically, my questions came down to only one: what the best way to change aspect ratio in my case? I don’t really like the idea of cropping. Any suggestions, please?

One more thing. Can I use any kind of MiniDV camcorder to upload the video to? Cheap ones are fine?

Thanks. -

If your 640 x 480 video is 1:1 PAR, 4:3, then you can resize it to 720 x 480 for mini DV without distortion, as the non-square pixel nature of DV will cater for it.

You cannot convert 4:3 material to 16:9 without either pillarboxing (vertical bars up each side) or cropping.

Projection of 4:3 material will not by a problem. They have done it since film began.Read my blog here.

-

Just stretch the 640x480 frame to 720x480 and encode as 4:3 DV AVI. There's no need to crop anything. Yes, you can use the resize filter in VirtualDub. It's not likely your video is interlaced. Even if it is, you are not resizing vertically so you don't need to worry about interlace (as far as VirtualDub is concerned).

If you know AviSYnth you can use that instead to avoid converting to RGB:

AviSource("path\to\your\file.avi")

LanczosResize(720, 480)

Open that script in VirtualDub and use Video -> Fast Recompress mode with Cedocida. Make sure the audio is set to 48000 Hz, 16 bit, stereo, uncompressed PCM.

Yes, any DV camcorder should be able to accept the DV data from the computer. Assuming you have a firewire port on the computer. -

I've heard of the pass though feature being disabled but this is the first mention I've heard of DV-in being disabled. Makes sense at least as far as the law concerned, from my understanding there is tax on it because it's considered a video recorder. The manufacturers disable these features to make the units cheaper. Glad to see the U.S. doesn't have a monopoly on stupid politicians.Originally Posted by ronnylov

-

Thank you, jagabo and guns1inger. But I’m still having a real nightmare for the past 2 days trying to make this work… and I’m running out of options now. Please, advise!

Here’s what I have: I did my video project in Ulead Video Studio 10 (I know, this is not the best program but I went too far with it, overall it’s Ok for my needs). Original video was shot on a digital still camera in video mode. That is MPEG1 640x480 30fps 10 Mbps. I can render results into a MPEG2 640x480 30fps, 8.5 Mbps and it looks fine. (When I render it to MPEG1, I get some artifacts, but that’s probably issue with playing back; I don’t really need MPEG1 but I’ll write below why I mentioned about MPEG1). By the way, when I render, the option “create non-square pixels…” is always checked. Does that mean that the video is 1:1 PAR ?

Since I need to transfer it into a Mini DV tape, I rendered it into an AVI DV 4:3 right from my Video Studio project. It created a 720x480 29.97fps file with all those DV attributes. But here’s the problem: different software players play that file with different aspect ratio, even though I set them all to show “original” ratio. Most of players show me real 640x480 picture, just like if it was 640x480, while “BS Player” shows 720x480 horizontally stretched picture (with short faces). Why most players show 640x480 while the file is 720x480? Is there any information in the header of the file which tell the player what aspect ratio to set?

I also opened that DV (720x480) file in VirtualDub, applied a “Resize” filter changing resolution to 640x480 and Letterboxing it to 720x480, so that it added vertical bars to the video. Then I saved the file with Cidoceda DV encoder. When this file is played by most players, I get 640x480 screen with long faces (stretched vertically) with bars on left and right. But “BS Player” shows 720x480 with bars and right aspect ratio. Again, it seems like most players try to make a 640x480 out of it. Why? I also opened rendered MPEG1 file in VirtualDub, added vertical bars and saved in 720x480 DV AVI. And I get same results: it plays back 640x480, stretched vertically with bars.

So, I need to transfer either of these 2 files to a Mini DV tape, which will be played on a Mini DV Deck connected to a projector (probably through a mixer or a video selector of some kind). Which file should I transfer: 720x480 or 720x480 with bars where real picture is 640x480 ??? Is there going to be any resizing like software players do by reading actual resolution from video stream? When a Mini DV plays back, say, from a camcorder or standalone deck, does it send information on what is the actual resolution/aspect ratio to a TV/monitor/projector?

Please, educate me on this one. I afraid my video will be screwed on the festival; either played with long faces with bars, or short faces... I don’t want to crop the video. I want it either 720x480 with side bars, or a normal 640x480 with no bars – just like software players handle that 720x480.

Thank you!!

Stan. -

The AVI format doesn't really support aspect ratios -- there is no Display Aspect Ratio in the AVI headers. Players which only examine the header data don't see the DAR information and end up displaying the video with square pixels (unless manually overidden). A program that does this will end up with a DAR that matches the relative frame dimensions. So 640x480 becomes 4:3 DAR, 720x480 becomes 3:2 DAR.Originally Posted by stason99

Since NTSC DV only supports one frame size (720x480) and two DARs (4:3 and 16:9) players that respect the DV DAR will use one of those aspect ratios to display the video. As far as I know, Cedocida always flags its output as 4:3. You do not want to keep your source 640x480 and add borders to the sides to sides to make a 720x480 4:3 DAR DV AVI. Players that display this correctly will do exactly what you are describing -- squish the 720x480 frame to 640x480 (or some other 4:3 frame size) leaving pillarbox bars at the sides and a horizontally squished looking video.Originally Posted by stason99

This is the case where the player is ignoring the DV codec's internal DAR setting and displaying with square pixels. The AR of the picture looks right because you have encoded the video incorrectly (adding black pillarbox bars instead of stretching the source) and the player is displaying it incorrectly.Originally Posted by stason99

The former -- stretch 640x480 to 720x480, no pillarbox bars.Originally Posted by stason99

Yes, the DV camcorder or VCR will format the video to 4:3 or 16:9 DAR, whichever is flagged in the DV stream.Originally Posted by stason99

Not exactly. Standard definition NTSC video only supports one DAR, 4:3. So the camcorder will output 4:3. If the DV DAR is 16:9, the camcorder will squish the video vertically and add letterbox bars to make a 16:9 picture inside a 4:3 frame.Originally Posted by stason99

Once you have transfered your video to the DV camcorder, plug it into a standard definition TV with a composite or s-video cable and verify that the aspect ratio is correct.Originally Posted by stason99

There is still one possible source of error: if the the projector they use is 16:9 it might be set up to stretch 4:3 material to fill the 16:9 screen. Most HDTVs have the option to do this because many people prefer to see the video fill the screen even if the AR is wrong (everybody looks fat). All you can do is encode correctly and hope they display it properly. -

Thank you, thecoalman and jagabo.

jagabo, I got it. Thanks. I will assume that my DV AVI (stretch 640x480 to 720x480, no pillarbox bars) is the correct fine and will be played back right. Now I’m trying to get a Mini DV camcorder from someone to dump the file to it and check it on a non-HDTV, as you have suggested. I will post my results here as soon as I get that done.

!!! But let me ask you this. You have mentioned that if there’s no DAR information present then players will display video with square pixels. When I was doing all my renderings, the box “Perform Non Square Pixel Rendering” was always checked. Does that make any difference or can that cause any of the issues I have? Do you recommend square or non-square pixel rendering? Can that affect the aspect ratio when playing back on either players?

Here’s what ULEAD VS manual says about non-square pixels:

“Select to perform non-square pixel rendering when previewing your video. Non-square pixel support helps avoid distortion and keeps the real resolution of DV and MPEG-2 content. Generally, the square pixel is suited for the aspect ratio of computer monitors while the non-square pixel is best used for viewing on a TV screen. Remember to take into account which medium will be your primary mode of display.”

One more thing. When you say 4:3, do you mean 640x480 or 720x480? I understand that 4:3 is actually 640x480. But why 720x480 is called a 4:3 ? That is confusing. When a 4:3 AVI stream tells a player that there is a 4:3 stream coming, how does a player know if there’s any video beyond side borders of 640x480? In other words, how does it know if it’s a “real” 4:3 or an “extended 4:3” (720 instead of 640 ?). Let’s say there’s some video taken by a Mini DV camera and played back on a 4:3 non-HD TV, will it be played cutting sides off the 720x480 picture and only fitting the 640x480 in the screen? I’m just trying to understand the difference between these two 4:3 formats: 640x480 and 720x480.

thecoalman, Thanks for suggestion. I would like to try what jagabo suggests and go ahead and transfer the videos to a camcorder and see what happens – that makes a perfect sense. If that resolves the issue then I will post screenshots here and ask your help to figure what’s wrong. -

This is refering to the way the program displays your video while working with it or preveiwing it. If you turn on non-square pixel rendering the frame you see while working will represent the final display aspect ratio of the video. The program will squish or stretch the picture as necessary. With square pixels you will see the picture at its storage aspect ratio, not the display aspect ratio. This has its advantages when you're working because resizing digital video always leads to some artifacts. Seeing your video with each pixel mapped to a pixel on the screen lets you see exactly what the source pixels look like.Originally Posted by stason99

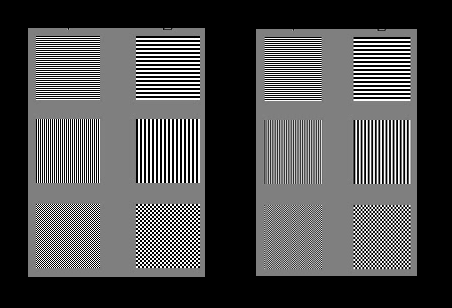

Here's an example with unresized content on the left (cropped from a 720x480 frame), a resized (to 640x480 with a common precise bicubic filter) version on th right. Notice the moire artifacts in the resized portion:

(One word of warning: your computer may be resizing the above image so you might not see it properly. The unresized version has single and double pixel wide alternating black and white lines, and single and double pixel black and white checkerboard patterns.)

When using DAR flags the player is told both the frame dimensions and the DAR at which it is to be displayed. It is then the player's responsibilty to stretch or squish the frame to match the DAR. So if the player receives a 720x480 frame (3:2 storage aspect ratio) and is told to display it at 4:3 it will resize the frame for the display. It may resize to 640x480, 1024x768, 1600x1200 or something else depending on how big it's told to display (windowed, full screen, etc).Originally Posted by stason99

In general

DAR = SAR * PAR

where:

DAR is the display aspect ratio (final shape to be displayed on-screen)

SAR is the storage aspect raio (ratio of frame dimensions)

PAR is the pixel aspect ratio (ratio of the width and height of individual pixels)

With a 4:3 DAR DV AVI file you have:

1.33 = 1.5 * 0.89 -

jagabo, Thank you so much for a detailed explanation. Seems like you have a great understanding of the subject. But some things remain unclear to me:

So, if my storage aspect ratio equals the display aspect ratio equals VS preview aspect ratio, does that mean that I better leave square pixels?Originally Posted by jagabo

If my storage AR is same as the display AR and I render it with non-square pixel rendering, will there be any artifacts or distortion on the rendered video?

In my situation, where I want the video to be played correctly on most hardware equipment from a Mini DV tape, is it better to render with square or non-square pixels?

Why does Ulead recommend choosing non-square pixel rendering when the video is intended to be displayed on TVs? Is that because most videos are shot on a real 720x480 (2:3) cameras while most TVs are still 4:3 and choosing non-square pixels will squish the 2:3 video to 4:3 ?

Originally Posted by jagaboIn the first line you wrote: “stretch or squish”, and in the second: “it will resize the frame for the display”. So, will it stretch (or squish) distorting picture or just resize (adjust) the frame to video? This is an important point. If a player receives video at 3:2 storage aspect ratio and is told to display it at 4:3, will it squish video to fit in 4:3 or cut the edges off? In my case it cuts off the edges and displays my 4:3 as 4:3. Seems like this is the way it works. Is that right?Originally Posted by jagabo

Is there same algorithm when dealing with TVs? If a 4:3 TV gets a 2:3 stream flagged as 4:3 it cuts off sides to display it in it’s native 4:3, but if it gets 2:3 flagged as 2:3 it fits whole picture into it’s 4:3 and leaves bars at top and bottom. Is that right? -

First let me state that I have not used UVS in many years. Probably not since version 6 or 7. But from the description of the non-square pixel rendering setting you quoted that setting only effects what shape (AR) the preview takes while editing. It has no effect on the saved file.Originally Posted by stason99

They recommend the non-square setting so that the shape (AR) of the picture you see while editing on the computer matches the shape (AR) of the picture as it will be displayed on the TV.Originally Posted by stason99

The player should squish the image back down to 4:3, not crop it. If you are watching on a TV it may seem cropped because TVs overscan the frame -- you don't see the outer ~5% on all four edges. Software players usually don't emulate overscan. You should see the entire frame. As noted before software players may or may not correct the AR.Originally Posted by stason99

The TV never receives a 3:2 frame. The video is formatted to 4:3 (by the player) before it is sent to the TV. Standard definition NTSC TV supports only one aspect ratio: 4:3. Everything it displays is displayed at 4:3. Every source feeds it a 4:3.Originally Posted by stason99 -

By squish, do you mean like make long skinny faces? Of so, then how do people watch on a 4:3 TV whatever they shot on their 3:2 camcorders? Or, do you mean like fitting 3:2 inside of 4:3? But that would be keeping the 3:2 aspect ratio and just fitting 3:2 inside of 4:3 with bars on top and bottom.Originally Posted by jagabo

Well... I should get a Mini DV camcorder on Tuesday or so, and I will see what happens. But I’m still uncertain on whether a theater projector would show same AR as my non-HDTV… All these thousands of different formats they made… it’s really confusing and upsetting… But thanks for your support!

But thanks for your support!

-

No everything will look normal. Just like viewing the original 640x480 image on a square pixel computer monitor. The 720x480 frame is squeezed horizontally to look like it is 640x480 on the TV.Originally Posted by stason99

-

Ok, I got a camera and checked the video playing on 2 non-HDTVs and one HDTV. The camera only had a composite video output. Aspect ratio is correct on all 3 TVs when the original, no-bars-video played. So, you were right jagabo and thecoalman. Thanks!

The only thing is that I do see some artifacts on TVs, but I guess that’s either bad quality camera or inevitable artifacts caused by conversion to DV AVI. There must be some distortion on HDTV because the camera was connected via composite output which should not give 640x480, should it? But non-HDTVs also give some artifacts, i.e. some hard-to-see lines on fast changing pictures or transitions… It doesn’t look really bad though… but I don’t know how it’ll be on a big projector. I also tried playing a file which was rendered with Square Pixels Rendering, and I got same results. Should I worry about it? -

It should give a 4:3 image pillarboxed on the 16:9 display. Unless the HDTV is set up to stretch the image out to 16:9 or zoom in to fill the display (lopping off the top and bottom). You should stop thinking in terms of frame size but rather in relative width and height of the image. The HDTV scales the incomming signal to match its native resolution (typically 1024x768, 1366x768 or 1920x1080) so there is no "640x480" frame anymore. It's a 4:3 image pillarboxed in a 16:9 frame.Originally Posted by stason99

DV AVI is pretty clean. You shouldn't really see any artifacts from DV compression. They may have been in your MPEG source. Bad colorspace conversion can make macroblocks more visible in dark areas. Or -- lines on fast changing pictures indicates the source may have been interlaced? And what frame rate was it? Post a GSpot screen cap if you can.Originally Posted by stason99 -

I think you didn’t understand my question. What I meant is the quality (bandwidth) of the composite video output is low, it’s even lower than S-video. I believe it’s 320x240 MAX, isn’t it? Composite video output should not be able to pass through 640 lines. So, the video played from the tape was 640x480 but the bandwidth was reduced by the composite output of the camera and then the HDTV had to display it back at 640x480, and that could cause some artifacts. That’s what I meant. Do you agree? Also, I think the HDTV (Sony) made some upconversion from the low resolution 640x480 to it’s native resolution. Could the artifacts be caused by upconversion circuits of the TV?Originally Posted by jagabo

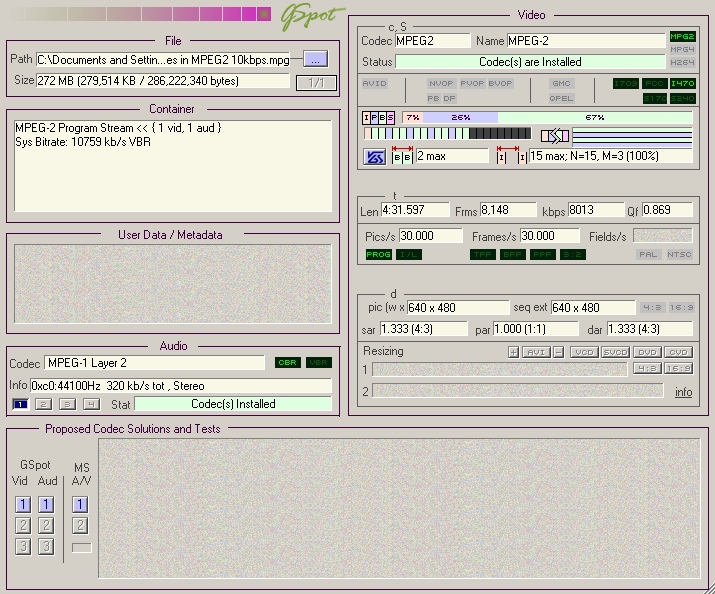

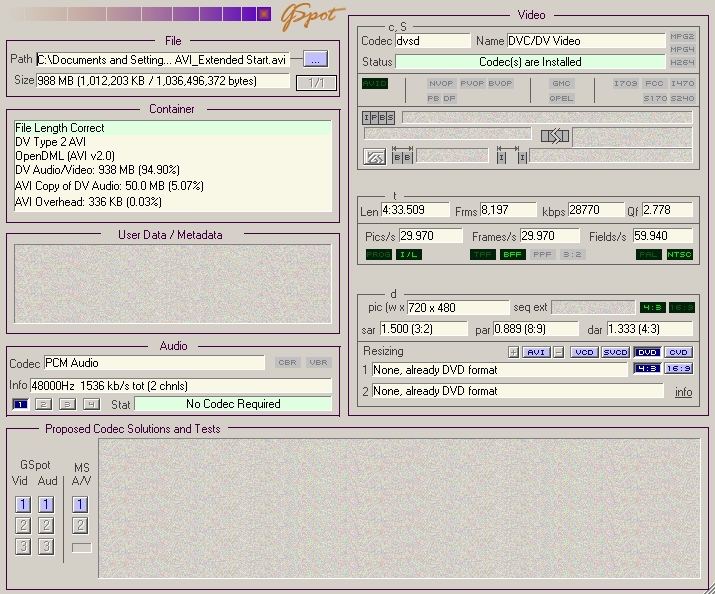

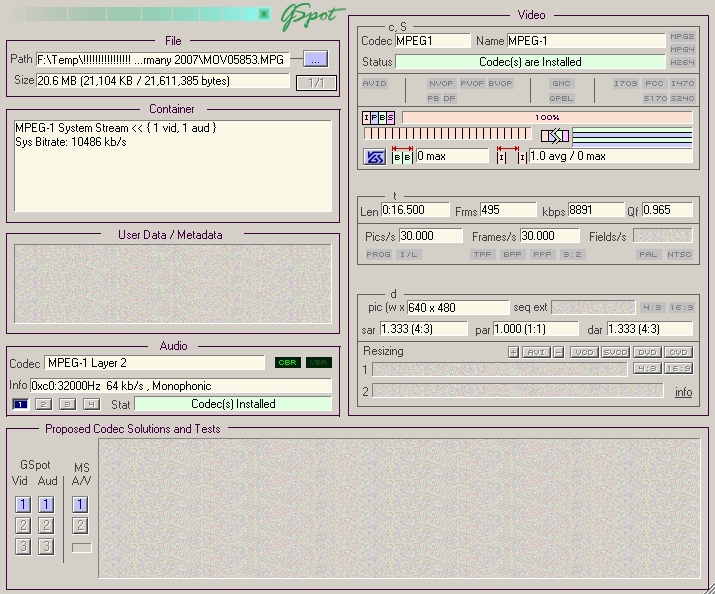

Original MPEG2 video plays fine on computer: no lines or anything. I don’t know if the source is interlaced. How do I check that? Original video was MPEG1 30 fps. DV AVI, created straight from Video Studio was, of course, 29.97 fps. Here are GSpot caps:Originally Posted by jagabo

Original MPEG-1 (one of clips straight from camera):

-

Composite video has exactly the same vertical resolution as s-video (480 lines for purposes of this discussion). Horizontal resolution is about the same for the luma channel (b/w portion of the picture) but significantly less for the chroma channels (color information).Originally Posted by stason99

The vertical resolution of the composite and s-video signals is exactly the same. The horizontal resolution of the luma channels are about the same. The multiplexing of the luma and chroma channels to transmit a composite signal, and the subsequent demultiplexing to separate the channels, reduces the color resolution (and can cause the colors to shift to the right), and can create chroma artifacts like dot crawl and rainbows:Originally Posted by stason99

http://www.doom9.org/capture/chroma_artefacts.html

Better HDTVs have 3D comb filters to reduce dot crawl and rainbow artifacts.

In short, yes, s-video would have looked a little better than composite!

All HDTVs upconvert SD signals (composite, s-video, and component) to the display's native resolution. This process can lead to many types of artifacts including stair-steps on nearly horizontal edges and interace comb artifacts (from imperfect deinterlacing).Originally Posted by stason99

Open the source video in VirtualDubMod (don't use VirtualDubMPEG2 or the regular VirtualDub). Move to a part of the video where there is significant motion and step through the frames one by one (use the left and right arrow keys). Do you see interlace comb artifacts like this (from http://www.100fps.com/ ) on at least some frames:Originally Posted by stason99

If so, the video is interlaced. Conversion to DV AVI may have resulted in the wrong field order. As the discussion of fixing the field order is rather involved I'll wait to hear if the video is interlaced before explaining how to fix it. -

Ok, I followed your instructions and checked video files in VirtualDubMod. Here’s the thing. There are absolutely NO interlace comb artifacts on source MPEG1 and rendered final MPEG2. But there ARE interlace comb artifacts on the DV AVI video. These artifacts present ONLY on “flashback” transitions and other effects, and they very noticeable. There are no interlace comb artifacts on the video itself, only on effects. But for some reason I don’t see these artifacts when the file is played on most software players (except one).

Just to remind you, I was unable to open the MPEG2 in VirtualDub to convert it to DV AVI. So I figured it would be better anyway to render it into DV AVI straight from Video Studio, and so I did. There are no options to set a field order upon saving as DV AVI in Video Studio. The only thing I can do now is to render my project as MPEG1 and open the video in VirtualDub and… and how do I avoid interlace artifacts when saving it as DV AVI? -

At which step did you add the transition effects? When converting from MPEG2 to DV AVI? That would explain why there are comb artifacts in the DV AVI.

You don't see those artifacts with most players because Microsoft's DV decoder performs a BOB deinterlace to hide them from you. Most people get upset when they see "lines all over the screen".

By the way, you can render to DV AVI with VirtualDubMod. Or you can get the newest VirtualDub beta and this MPEG2 source plugin:

http://home.comcast.net/~fcchandler/Plugins/MPEG2/index.html

I see one other possible way the comb artifacts might have been introduced. The MPEG2 file is 30 fps. The DV AVI file is 29.97 fps. It's possible that Video Studio converted the frame rate by dropping one field every now and then. But this should have introduced comb artifacts in both the transitions and the original video -- some of the time. I would expect to see the video alternating between ~16 seconds of progressive frame and ~16 seconds of interlaced frames. -

Well, I opened clips from camera (MPEG1) in Video Studio, edited them, adding effects and transitions, and rendered as DV AVI. And there I got artifacts on effects. (There are no artifacts if I render as MPEG1 or MPEG2).Originally Posted by jagabo

Ok, I think I will re-render the project as MPEG1 (same algorithm as original video from camera) and then open it in VirtualDubMod and render as DV AVI using the Cidoceda codec with no resizing. So, couple of questions here: is it better to render from VS into MPEG1 (same as source video) or uncompressed AVI? (Or maybe MPEG2?...). I do have enough disk space for uncompressed AVI. So which way is better?

In VirtualDubMod, what is the difference between "fast processing"; and "full video processing" ? (don't remember exact phrasing since I'm not home now). -

Uncompressed AVI (or losslessy compressed with HuffYUV or Lagarith) will be better. Each time you use lossy codecs like MPEG and DV AVI you lose quality.Originally Posted by stason99

Fast recompress leaves the video in its native colorspace (typically YV12 or YUY2) and sends it to the compression codec. Normal recompress converts to RGB and sends that to the compression codec. Full Processing converts to RGB, performs any filtering you have selected, and sends RGB to the compression codec.Originally Posted by stason99

If Video Studio saves as uncrompessed RGB the three modes will be equivalent. -

What is the right way to stretch 640x480 to 720x480 in VirtualDubMod? Should I use the Resize filter? If so, which resize algorithm is better? (In VirtualDubMod, I can't save a 640x480 file as DV AVI without stretching it to 720x480). Thanks.

-

Yes, use the resize filter. I usually use Lanczos3 (for upsizing and downsizing). It is the sharpest with minimal moire artifacts (an issue when downsizing). The Precise Bicubics are also good for upsizing with the three varations varying in sharpness.

Yes, DV must be 720x480 29.97 fps (NTSC) or 720x576 25 fps (PAL). -

One more question, if you don’t mind. I’m using VirtualDubMod. I thought since I chose Cedocida codec, it will convert frame rate to 29.97, but it didn’t. Do I have to change frame rate manually? Is this dome through the “Video Frame Rate Control Menu”? If so, there are 3 portions in that menu: “Source rate adjustment”, “Frame rate conversion” and “Inverse telecine”. Which one do I change: the “frame rate conversion”? I can just go ahead and choose “covert to” and input 29.97 ?

Similar Threads

-

Change Aspect Ratio

By wulf109 in forum DVD RippingReplies: 1Last Post: 7th Feb 2011, 19:12 -

MiniDV to DVD to .wmv Aspect Ratio Playback Problems

By 2therock in forum Video ConversionReplies: 15Last Post: 12th Jul 2010, 13:17 -

Aspect Ratio Change

By VicSedition in forum Video ConversionReplies: 9Last Post: 17th Feb 2010, 12:01 -

Whats going on? miniDV, software, aspect ratio, dimensions

By vid83 in forum Newbie / General discussionsReplies: 2Last Post: 12th Dec 2008, 08:25 -

How to change aspect ratio?

By crt in forum Video ConversionReplies: 2Last Post: 9th Oct 2008, 11:49

Quote

Quote