Source: VHS and VHS-C tapes (NTSC US)

Capture devices:

1. JVC SRMV45 S-VHS player -> Canopus ADVC110 via S-Video -> PC via Firewire

2. Old 4 head VCR -> Canon Elura 50 DV camcorder via RCA -> PC via Firewire

Capture software: winDV

Question 1:

I am confused about IRE and how it relates to my capture process. The ADVC110 allows me to toggle between 0 IRE and 7.5 IRE. I have tried capturing with both settings and the 7.5 IRE picture is a bit dark. It isn't clear to me how the two modes differ. Here is how I understand it...

"0 IRE" selected means the video signal will not be modified during the analog->digital conversion. Any superwhite and superblack data will remain where it is in the waveform. A luma value of 4 for example will remain 4. Digital spectrum runs from 0-255.

"7.5 IRE" selected mean the video signal will be clamped and all superwhite and superblack data will be crunched into the 16-235 range. A luma value for example of 4 will be bumped up to 16. However, since DV is full range [0-255] the levels are then stretched from 16-235 to 0-255. Basically it makes anything below 7.5 IRE in the analog world appear pitch black (0) in the digital world.

Is this correct?

If this is correct then I would be better off capturing at "0 IRE" to preserve the superwhite and superblack details right? Visually this seems to be the case. The 0 IRE capture has more black/white detail and looks more crisp on my LCD even if it technically will be out of range on an old tube TV.

Question 2:

There is a big image quality difference in the resulting capture files between the JVC VCR->Canopus->PC capture and the Older VCR->Camcorder->PC capture. The latter is much brighter and seems to have more detail (less "smeared"). I have turned off all image processing in the JVC (other than the TBC) and there is still a noticeable difference. I can't imagine my cheapo VCR/Camcorder has a better analog->digital conversion than a professional SVHS deck and dedicated converter. Could something else be at play here? Does analog->digital processing differ that much between products?

Question 3:

Some of the VHS tapes I am capturing are over 20 years old. I noticed that after the first playing the film literally started to fall apart... black chunks missing from the film. Subsequent plays shows the damage. Is there a less damaging way to play these?

Thanks!

Try StreamFab Downloader and download from Netflix, Amazon, Youtube! Or Try DVDFab and copy Blu-rays! or rip iTunes movies!

+ Reply to Thread

Results 1 to 6 of 6

Thread

-

-

Originally Posted by binister

Not quite correct.

Analog NTSC video has black at 7.5 IRE and white at 100. Excursions are allowed over 100IRE but analog recording equipment will auto gain control back to 100 IRE white with only minor excursions above.

ITU-Rec601 8bit video (e.g. DV,DVD,DVB,ATSC, etc.) all place black at digital 16 and white at 235. White excursions are allowed in the 236-255 region. Level 255 corresponds roughly to 108 IRE.

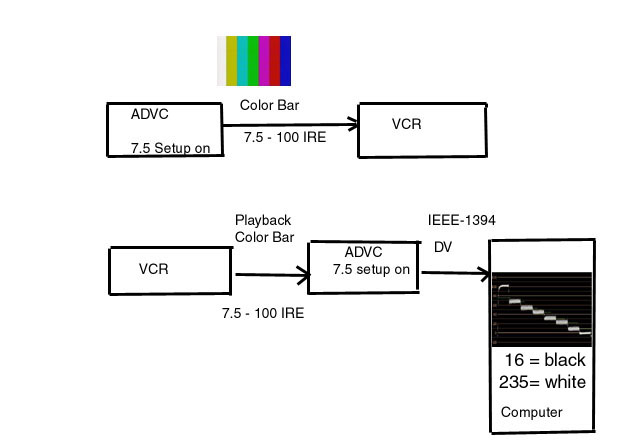

A properly adjusted NTSC VCR will output video at 7.5-100 IRE. If the ADVC-110 is switched to 7.5IRE, black will be captured to level 16 and 100IRE white will be captured to level 235. The ADVC also outputs a 7.5-100IRE color bar in this switch position so you have a calibration path through the VCR to the timeline of your editor. It helps to use a waveform monitor to check levels.

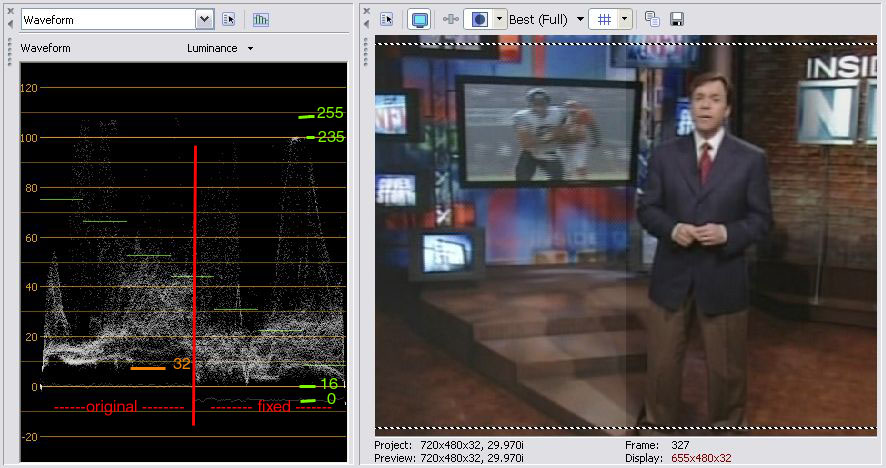

This is how a typical VCR (a JVC HR-S4800U) looks playing back the reference color bar.

If the ADVC-110 switch is in the 0.0 IRE position 0.0IRE is captured to level 16 and 7.5 IRE black is captured to level 32. This causes the blacks to look washed out like this. Note that PAL video and Japanese NTSC use 0.0 IRE for black.

See this JVC tutorial for digital vs. analog black issues. Unfortunately most DV camcorders capture black incorrectly from "pass-through" inputs* producing washed out blacks. These can be fixed in post but the best solution is to use the ADVC-110 for capture set to 7.5 IRE.

JVC Tutorial

http://pro.jvc.com/pro/attributes/prodv/clips/blacksetup/JVC_DEMO.swf

* Note that this washed out black issue only relates to the analog pass through camcorder inputs. Video recorded from the camera and passed over IEEE-1394 will have proper 16 level blacks. Pro camcorders will output nominal white at level 235. Many consumer camcorders cheat whites up to 255 causing clipping.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Above we are talking about getting the input side calibrated. First one should calibrate the TV monitor for accurate observation.

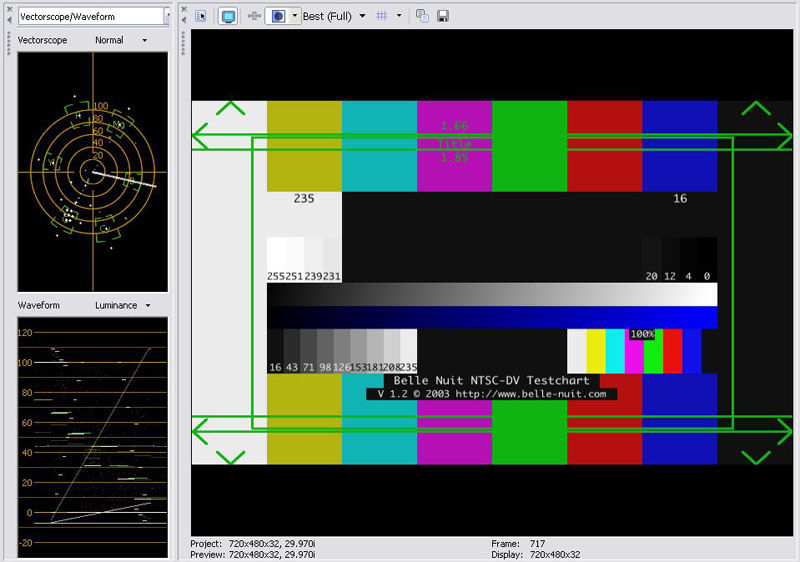

The Bell-Nuit Color bar is good for timeline monitor adjustment.

http://www.belle-nuit.com/testchart.html

Editing programs like Premiere and Vegas allow monitoring off the IEEE-1394 port. You use your ADVC-110 to connect your monitor, then calibrate brightness, contrast, saturation and hue to the chart. When the ADVC-110 is in 7.5 IRE mode, DV video in is converted to proper 7.5-100 IRE analog video out. A camcorder can also be used for this but it will output 0-100 IRE thus requiring non-standard adjustment of monitor brightness (black level).

Adjust monitor black so that you see no difference between level 16 and 0, 4, 12 blocks but level 20 shows a lighter gray.

PS: At the white end you should adjust the monitor so that differences in levels from 235 to 255 are observed. The highest contrast setting should be where levels 255 and 254 blend together then back off so you see a difference.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

edDV,

Thank you very much for the explanation and the JVC tutorial. It definitely helped me understand IRE and black levels.

After switching the ADVC110 to 7.5 and comparing waveforms I do see the difference.

One lingering question I have is about "superblacks" with Japan NTSC or PAL. Since digital black (16) is used for 0 IRE in those formats can you really have a superblack? How can you have lower than 0 IRE in an analog world?

I noticed in my US NTSC VHS tape captures there are superblacks in the digital waveform (0-16). I assume this is because my source had sections with an IRE level lower than 7.5.

On a related note, I have seen that waveform screenshot that you used in a few forum posts. What program is that?

Finally, is there any way to accurately filter my captured video on my PC without viewing it on an NTSC monitor? I don't have access to any tube TVs as all of the TVs in my house are either LCD or PDP. -

Lots of questions. I'll try to answer each later as I am busy with yard work. But clarify what you mean by "superblacks". Info below level 16 usually means incorrect capture. The reason ITU-Rec601 used the 0-15 buffer levels was to allow recovery from an incorrect capture or A/D D/A conversion. But the term "superblack" is also used during production to allow for a poor mans alpha to define keys for graphics or titles. The edges of the graphic can be burried in the 0-15 levels for keying and stripped off later. The term was also used during the early days of DVD players when everyone was getting levels wrong in consumer hardware and/or encoding.Originally Posted by binister

If you are working with PAL or Japanese tapes/laserdiscs you will need to use the 0.0 IRE switch position. Otherwise default to 7.5 IRE for NTSC sources.

The waveform monitors are from Vegas. I think I was using v5 when I made those measurements. I'm using v8 pro now.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

In analog PAL or Japanese NTSC black is defined as zero IRE. Composite PAL looks like this for luminanceOriginally Posted by binister

In other words, all luminance is contained between 0-100 IRE with white excursions up to 120 IRE possible. Since analog video is usually clamped to blanking level, excursions below 0 IRE for luminance are not possible.

American NTSC has black defined as 7.5 IRE. While it is possible to place luminance between 0-7.5 IRE this would be considered non-standard and would look like crushed black on a properly adjusted TV.

In the digital domain, all standards have black defined at level 16 and nominal white at level 235. The 0-15 levels are there primarily to allow correction for transmission level errors without clipping. Any video placed down there will be below black level for a properly adjusted TV monitor so will be "crushed". Most DVD players will clip anything below level 16. Some players passed the 0-15 levels and called this feature "superblack". It was mainly a compensation for incorrectly encoded video raising crushed blacks to 7.5 IRE analog.

If the source was broadcast or cable TV, either the recording VCR was maladjusted or the source was in error. Over the air broadcasts are usually correct and black level is regulated by the FCC. Cable channel to channel black level errors can be quite dramatic (+/- 3 IRE for old systems). You see a lot of crushed or bright blacks on analog and digital cable TV. Ideally you would adjust for this during recording with a proc amp. A VCR will automatic gain control (AGC) white but records black as it was sent.Originally Posted by binister

Vegas (full version)Originally Posted by binister

Computer LCD monitors use 0-255 RGB and differ for gamma, phosphor, color balance and are progressive. Without adjustment, correct video will look dark on a computer monitor due to gamma differences. Display cards provide some compensation in the overlay settings but these are only approximate.Originally Posted by binister

Plasma and LCD-TV sets are slowly moving toward TV gamma and color response replication. Expensive broadcast LCD monitors have correct response. Your best bet is to use an analog monitor for quality assessment or at least adjust your LCD-TV to match the Bell Nuite color chart. Get black and white correct then adjust gamma to give equal gray scale steps. The THX adjustments are also useful.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about

Similar Threads

-

YCbCr 16-235 to RGB 0-255 and PAL/NTSC differences (7.5 IRE)

By intracube in forum Video ConversionReplies: 11Last Post: 5th Sep 2011, 04:39 -

questions on using "pass through", converting analog to digital

By tadd5181 in forum Video ConversionReplies: 4Last Post: 27th Apr 2010, 17:59 -

Analog vs Digital TV

By J. Baker in forum Newbie / General discussionsReplies: 9Last Post: 10th Jan 2009, 03:21 -

IRE, capturing and some really strange stuff

By binister in forum CapturingReplies: 19Last Post: 5th Jun 2008, 00:52 -

Capturing Analog Video: Device Options (Survey/Questions)

By SHVideo in forum CapturingReplies: 6Last Post: 12th May 2007, 20:19

Quote

Quote