Ok, so I finally got the unit and i'm quite excited. It's most important task will be capturing from VHS but I need it to occasionally record Satellite TV from my receiver.

So, I initially tried recording TV and have the following questions:

1. The video captured has a very pronounced interlaced look to it (i'm suspecting its because the source is NOT interlaced). So does this mean that I need to capture using a de-interlace filter.

2. If i burn this interlaced looking video to DVD, will it still appear interlaced on my TV....what about my computer???

3. If I'm recoring a VHS source will I notice the same pronouced interlace look?

Oh, I'm capturing the DV using Sony Vegas (but am open to using VirtualDub if I have to use a de-interlace filter. Well thats it...any responses are greatly appreciated!

Thanks!

+ Reply to Thread

Results 1 to 30 of 50

-

-

Virtual Dub won't capture DV, wrong type of video for it.

You can edit DV in VD, but you need to install a DV codec first. Panasonic DV Codec is one.

You can edit DV in VD, but you need to install a DV codec first. Panasonic DV Codec is one.

DV is interlaced, don't deinterlace it if you plan to use it in a MPEG or on a DVD. Interlaced DV will not look good on a computer monitor. Watch it on a TV if you want to see how it will look. You may also be able to watch it on a PC software player that can properly handle it. I believe VLC is one. -

wellll... not sure where you got a progrssive source to capture, but vhs & sat both deliver interlaced at the outputs for the tv (co-ax, rca, or s-vid). If you've got a regular crt TV, chances are it'll only take interlaced per your broadcast specs.

So yes, you probably have interlaced video, & if you want to play it on a regular TV, you want it to stay interlaced. If you want to play it on your PC, then deinterlace is cool, but usually not done till later, perhaps best when encoding (that way only messing with the content once). -

If you want to play a DVD on the computer, you would want a software player like PowerDVD that can properly handle interlaced video. Then it would look fine.

If Vegas doesn't have a way to increase the audio level, you can strip out the audio and adjust it, then put it back in during encoding or authoring. But try it on a TV first after you encode it to DVD format, it may be fine. A few DVD-RWs may be handy for fine tuning your process.

A few DVD-RWs may be handy for fine tuning your process.

-

Ok...sounds great, I will try that...I'm still a little concerned with the whole interlaced looking DVD on a computer issue becasue the DVD will be used commercially and I'm assuming most ppl. would view the DVD with windows media player (which doens't seem to de-interlace).

Also, I have a CRT-TV set up beside me to my computer as a secondary dispay, do you think fine tuning the color settings and viewing the result on the TV will actually translate into the same color quality when its encoded, burned and watched on the TV again? -

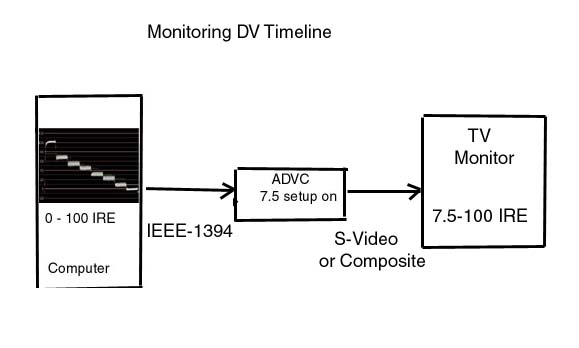

You can view the Vegas timeline back through the ADVC-100 to your TV. Use the SMPTE color bar in the Vegas Media generator to adjust the TV levels. Make sure the ADVC-100 Sw2 is on. That path will be an exact duplicate of what will be fed to the encoder and you can view what you are doing realtime without having to look at both fields on the computer monitor.Originally Posted by tarrickb

Set up your monitoring that way and you are doing it like a pro.

See Vegas Help "Monitor, External"Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

I seem to recall reading one of the changelogs at one point and seeing that capture via firewire was indeed supported now.Originally Posted by redwudz

* Capture: Capture from DV sources to type-2 DV AVI using the DirectShow DV driver is now supported. If in doubt, Google it.

If in doubt, Google it. -

Thanks for the tip about VD, jimmalenko, I'll have to try that. Probably VD 1.6.

-

"I'm still a little concerned with the whole interlaced looking DVD on a computer issue becasue the DVD will be used commercially and I'm assuming most ppl. would view the DVD with windows media player (which doens't seem to de-interlace). "

You're right in that you can't control a user's setup, though you can influence it perhaps using the sort of separate programming they have on many retail DVDs, with autostart & player software etc.

Maybe trivia, but as far as wmplayer is concerned it can't play DVDs, relies on a separate decoder that often is provided by something like PowerDVD. While it is possible to just buy the decoder portion for wmplayer, personally think it's far more common to have separate, DVD Player software, & personally wonder how many folks would actually use wmplayer instead, what with the lack of DVD interface & functionality & all.

That said, a retail DVD often contains a film that was shot at 24 p. Pulldown is normally added to fake a spec broadcast signal. You may want to look at IVT, & see if that works for you -- you'll probably want to do some filtering of the vhs content anyway -- depending on the original source recorded to vhs.

"I have a CRT-TV set up beside me to my computer as a secondary dispay, do you think fine tuning the color settings and viewing the result on the TV will actually translate into the same color quality when its encoded, burned and watched on the TV again?"

Yes & no... It'll look the same (or close anyway) on *your* TV, depending to an extent on your DVD player. It's better then not doing it, but it's always best to stay a bit on the safe side with colors, saturation, & levels, including audio (generally in my experience somewhere around -6).

It'll look the same (or close anyway) on *your* TV, depending to an extent on your DVD player. It's better then not doing it, but it's always best to stay a bit on the safe side with colors, saturation, & levels, including audio (generally in my experience somewhere around -6).

-

Just wondering...what does passing the video through the Cannopus ADVC-100 do that is different from hooking the TV directly up to the computer???You can view the Vegas timeline back through the ADVC-100 to your TV. Use the SMPTE color bar in the Vegas Media generator to adjust the TV levels. Make sure the ADVC-100 Sw2 is on. That path will be an exact duplicate of what will be fed to the encoder and you can view what you are doing realtime without having to look at both fields on the computer monitor.

-

I guessing it bypasses the video card. And whatever influence it would have on the video output.

-

Few PC graphics cards can correctly output interlaced video via their tv-out connections. Using the ADVC-100's tv output function guarantees that the interlaced video will be output exactly as it was input to the device in the first place.

If your sources were originally film you may be able to inverse telecine back to progressive film frames. This will usually play without any interlace artifacts on a computer as well as a TV.

If you video is from a camcorder it is fully interlaced. You will have to deinterlace if you want to guarantee that anybody watching on a computer will see a picture free of interlace artifacts. Of course, the picture will be full of deinterlacing artifacts for everyone everywhere. -

Ok...well i have it set up the recommended way through my ADVC-100 without any probs, but I have one more question.

So this means I'm supposed to adjust TV levels according to the SMPTE color bar shown in Vegas?Use the SMPTE color bar in the Vegas Media generator to adjust the TV levels. -

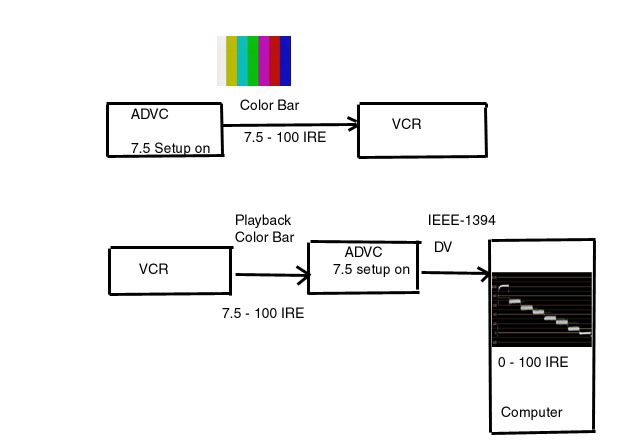

Well first, when you captured with the ADVC-100, was SW2 (7.5 IRE setup) in the on position? If so your NTSC input was captured into DV format with black at 16 and white at 235. In that case, adjusting the TV to the DV "NTSC" SMPTE color bar will give you an accurate view of what the encoder will see. All of your levels and filtering decisions should be done off that TV, not the computer monitor.Originally Posted by tarrickb

These links will show you how to calibrate your TV to the SMPTE color bar.

http://www.videouniversity.com/tvbars2.htm

http://www.indianapolisfilm.net/article.php?story=20040117004721902

If the ADVC-100 SW-2 was off during capture, your black will be at level 32 instead of 16. Better to go back and start over with Sw-2 on, but if you can't, the entire video will need black level correction (e.g. Sony levels filter).

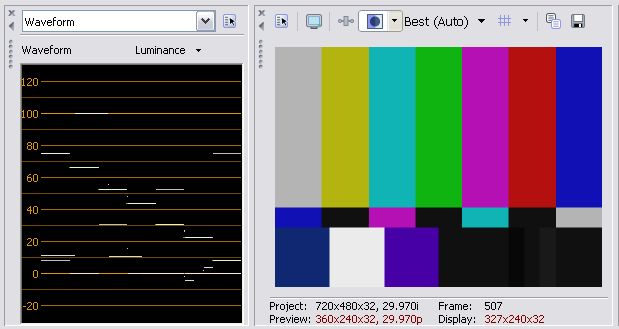

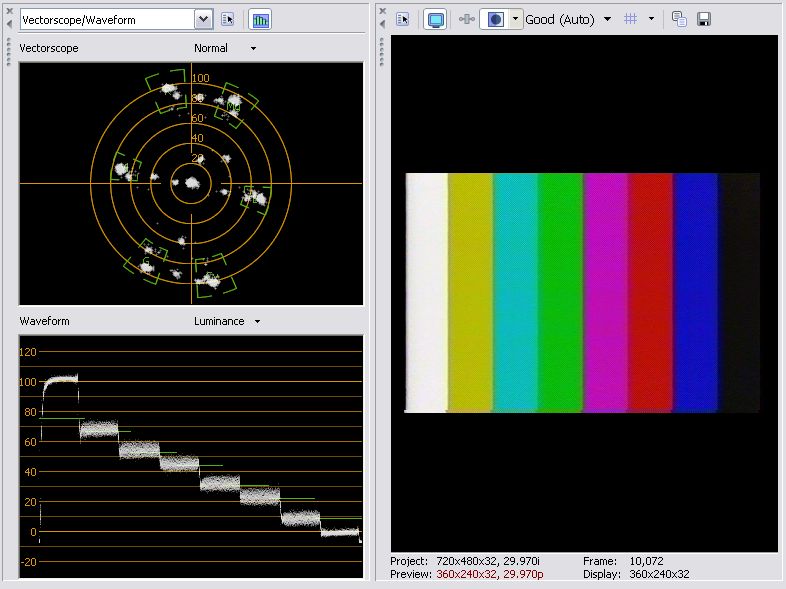

Here is what the Vegas NTSC SMPTE color bar will look like on the DV timeline. 0 = digital 16, 100 = digital 235.

Here is what a calibrated VHS playback will look like if Sw-2 is on. In this case I recorded the ADVC color bar to VHS and then captured the VHS playback with the ADVC-100.

This shows the path. I wasn't using a TBC or procamp here, just a VCR.

Recommends: Kiva.org - Loans that change lives.

Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Wow thanks EdDV...there is certainly lots of info there which i will sort through when I'm done studying for my Java test!!!!

I have been trying to read some of the differences between 0.0 IRE and 7.5 IRE, and what I have concluded is that if i'm capturing VHS 7.5 IRE is best, but any digital source 0.0IRE. Does this seem correct?

Also, anything else about 0.0IRE and 7.5 IRE i should know about? -

The great thing about the ADVC-100 is the proper conversion from NTSC (7.5 IRE) to DV and back. So long as Switch2 is ON, the levels will be handled as I show them above.Originally Posted by tarrickb

Note: DV camcorder passthrough normally does not do this correctly. They capture NTSC black to level 32.

http://pro.jvc.com/pro/attributes/prodv/clips/blacksetup/JVC_DEMO.swfRecommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

so switch 2 should be left on all the time for me seeing as I'm only recording from a VCR and digital cable source...?

Also, is it still the same if i record HDTV from my HD receiver (S-Video to the ADVC-100)

Oh btw...congrats to all of you who understand this stuff, because it really confuses me. The more I read it seems, the less and less and less i know

-

sorry forgot to metion....i will be encoding and burning to DVD (although i don't think it makes a difference)

-

My HD cable box (Motorola 62xx) has 7.5 IRE setup on the downscaled letterboxed S-Video output for all channels including HD. Sw2 should usually be ON for NTSC or OFF for PAL.

DV and DVD operate from 16-235 black-white digital levels for NTSC or PAL. Once you have captured to correct DV levels, encoding to DVD is direct.

A NTSC player will read 16-235 levels and add 7.5 IRE setup for analog 7.5-100 IRE out.

A PAL player will read 16-235 levels and output analog 0-100 IRE.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

OK Mr. edDV thank you for your patience and posts, however:

I have been reading and reading and this IRE 0/7.5 setting has confused me SOOOOOO much.

Could you please clearly explain why to use 7.5 IRE setting instead of 0 on my ADVC-100 when capturing either a VHS source or from a satellite receiver source?

I captured with the 0 IRE setting today not knowing any better but didn't really notice anything terribly bad.

When would I use the 0 setting and when would i use the 7.5 setting (explained clearly, because remember i'm beyond confused). -

Generally, you would use 0 IRE if you are in Japan or a PAL region.Originally Posted by tarrickb

If you use 0 IRE in an NTSC area (other than Japan) you will capture NTSC black to DV/DVD dark gray (level 32). When you play back a DVD authored that way on a NTSC DVD player, black will be at 15 IRE (very gray).

You would need to adjust your brightness every time you played that DVD and adjust it back for a commercial DVD or TV broadcast.

The idea is to produce a DVD that doesn't need TV readjustment every time you play it (i.e. the same adjustment you would use for a commercial DVD or TV broadcast).

http://pro.jvc.com/pro/attributes/prodv/clips/blacksetup/JVC_DEMO.swfRecommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Ok great...things seeem to be a bit clearer to me now...off i go to switch my ADVC-100 t0 7.5 IRE.

I'm actually going to record footage using both 0 and 7.5 to check out the differences first hand.

ty for all the help. -

How do I overcome it looking interlaced on a LCD TV???but vhs & sat both deliver interlaced at the outputs for the tv (co-ax, rca, or s-vid). If you've got a regular crt TV, chances are it'll only take interlaced per your broadcast specs.

So yes, you probably have interlaced video, & if you want to play it on a regular TV, you want it to stay interlaced. If you want to play it on your PC, then deinterlace is cool, but usually not done till later, perhaps best when encoding (that way only messing with the content once).

If filters need to be used which program do you think could do the best job (Vdub or Vegas?) -

Every LCD TV accepts interlaced input and will either ITVC to progressive or use its internal hardware deinterlacer. Unless you go to great lengths, the TV deinterlacer will outperform anything you can do.Originally Posted by tarrickb

For computer playback a deinterlacing software DVD player is used. Normally WinDVD or PowerDVD comes packaged with new computers or DVDR drives. Another free one is VLC.

If you know your VHS recordings are telecined film, you can manually IVTC with software or let encoding software try to do it automatically. The result is likely to have errors but will create a smaller file or higher average bitrate.Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Go step by step

Recommends: Kiva.org - Loans that change lives.

http://www.kiva.org/about -

Not trying to contradict & in case it helps anyone...

The original reason for the 7.5 Volt stepup in the US was reserving lower voltages in the broadcast signal. As such it's often ignored for non broadcast. That's not to say ignoring it is without peril, but if you google you'll find quite a bit of info on over-shooting both white and black values, how it might improve pictures and so on. Can also hopefully find info on what your camera shoots, how well it conforms to specs etc.

DVD's are one area where going outside of bounds is fairly common -- on retail releases anyway -- and some players for a while advertised superblack, whereas I believe many more just do it. Was/is a problem on some TVs needing recalibration as posted, but from other threads here & at doom9.org, gather it isn't necessarily a problem with many, newer TVs. If you're not broadcasting, there are no NTSC police that'll knock on your door for not sticking to the letter of NTSC spec. :P

Editing is one place you have to be careful, as a LOT of software handles color-range differently. Vegas generally leaves it alone, but, other soft/hardware can map to full range or clip range (oftentimes without telling you).

Editing on a PC is definitely doable without the benefits of the firewire out to TV monitor. It's nice, but there's a lot to be said for properly calibrating a crt PC monitor to handle graphics & video. Just as much to be said about the physical environ, room lighting etc. for both crts. IMO the most important thing is a bit of constraint. Calibrating your setup should be done -- no question -- but it's important to realize if your work isn't just for you, most TVs are out of calibration, many folks listen to their TV's blown out 3" speaker, most TVs are not located ideally re: lighting. And there is no set-in-stone standard (that's adhered to anyway) of what any DVD player will output regarding color specs or range. Calibration is to make sure you don't add to (or cause) problems.

Just as much to be said about the physical environ, room lighting etc. for both crts. IMO the most important thing is a bit of constraint. Calibrating your setup should be done -- no question -- but it's important to realize if your work isn't just for you, most TVs are out of calibration, many folks listen to their TV's blown out 3" speaker, most TVs are not located ideally re: lighting. And there is no set-in-stone standard (that's adhered to anyway) of what any DVD player will output regarding color specs or range. Calibration is to make sure you don't add to (or cause) problems.

Also be careful not to believe software tools as if they are gold standard. For example, Vegas docs themselves say their measurement tools are to assist in reducing differences between scenes. Yes it's better then nothing -- no it's not as accurate as hardware monitoring. Google to get various opinions from industry pros. -

Ok here are some video scopes showing some of my results....:

1. Shows video capture at the correct 7.5 IRE setting

2. Shows video captured at the incorrect 0.0 IRE setting

3. Shows video captured at the correct 7.5 IRE settings and with some levels adjustment in Vegas:

I thought these pics are a good indication to ppl what is happening.

Please tell me which looks the best (picture 1 vs. 3)! -

Also wondering why my whites seem to peak before 100? SHould I be worry about this?

Similar Threads

-

ADVC 100 and ADVC 300 non recognized in Windows

By scardi in forum Capturing and VCRReplies: 4Last Post: 28th Feb 2010, 02:23 -

a couple of sub questions

By Kanyeeeze in forum SubtitleReplies: 1Last Post: 1st May 2008, 18:03 -

ADVC-300 vs. ADVC-100

By DeadLily in forum Capturing and VCRReplies: 11Last Post: 19th Sep 2007, 09:15 -

couple questions

By mainegate in forum ffmpegX general discussionReplies: 2Last Post: 31st Aug 2007, 12:22 -

Questions: TV in/out, ADVC-100, or Graphics cards

By Mahime in forum Newbie / General discussionsReplies: 0Last Post: 13th May 2007, 12:37

Quote

Quote