Just tried a 40 second DV clip to HD with modified settings from above:

- Hybrid took 30 minutes to output the file. Is this a usual sort of time?

Also, I can't get H264s to export using any settings I've tried. It crashes every time, even if I manage to get no errors when adding to the queue. ProRes is fine, but would be nice to only need one step to create a final file to send to clients. Any advice please?

Try StreamFab Downloader and download from Netflix, Amazon, Youtube! Or Try DVDFab and copy Blu-rays! or rip iTunes movies!

+ Reply to Thread

Results 121 to 150 of 576

Thread

-

Last edited by Fryball; 3rd Feb 2021 at 05:29.

-

Hybrid has a cut option which uses trim in Vapoursynth.

You can enable it through "Config->Internals->Cut Support" (make sure to read the tool-tips), this enabled additional controls in the Base-tab.

No clue what you are doing, no clue about the error.Also, I can't get H264s to export using any settings I've tried. It crashes every time, even if I manage to get no errors when adding to the queue. ProRes is fine, but would be nice to only need one step to create a final file to send to clients. Any advice please?

-> read https://www.selur.de/support and either post here in the Hybrid thread or over in my own forum with details.

CU Selurusers currently on my ignore list: deadrats, Stears555 -

There is no real "GUI" for vapoursynth; the closest thing would be vapoursynth editor (vsedit), where you can preview scripts and play them. Also you can benchmark scripts and determine where bottlenecks are

You can use Trim in the script to specify a range

eg. frames 100 to 300 inclusive

clip = core.std.Trim(clip, first=100, last=300)

It seems slow . What is your CPU and GPU % usage during an encode?

It might be your GPU's openCL performance causing a bottleneck. Is it a discrete GPU or Intel GPU on the Macbook ? If it's Intel GPU, I suspect znedi3_rpow2 might be faster in your case than OpenCL using nnedi3cl_rpow2. When i check on a windows laptop, it's a few times slower using iGPU than discrete GPU or CPU (in short, iGPU OpenCL performance is poor)

You can use vsedit's benchmark function to optimize the script. You have to switch out filters, play with settings, remeasure. Ideal settings are going to be different for different hardware setups

I don't use hybrid, but the ffmpeg demuxer also makes a difference -f vapoursynth_alt is generally faster than -f vapoursynth when more than 1 filter is used

Also "DV" is usually BFF, and you set FieldBased=1 (BFF), but you have TFF=True in the QTGMC call. Usually the filter argument overrides the frame props

Also, there is no 601=>709 matrix conversion. Usually HD is "709" by convention, otherwise the colors will get shifted when playing back the HD version

I would post in another thread or selur's forum, because this is the wrong thread to deal with those topics -

This thread was hilarious to read

but you nailed the point right there. You either spend $200 to get 80%+ of the result with one click, or you spend countless hours doing trial-and-error in order to get 100% of the result for free. That's all there is to it. I don't think "AI" was ever meant to do anything better that a human can manually. At the end of the day, what would I prefer to do? Spend 2 hours learning about kubernetes deployment or spend that time producing multiple clips and mucking around with Avisynth plugins? Yep, I paid $200 and spent that extra free time deploying plex on a kubernetes cluster, which is more fun and interesting.

but you nailed the point right there. You either spend $200 to get 80%+ of the result with one click, or you spend countless hours doing trial-and-error in order to get 100% of the result for free. That's all there is to it. I don't think "AI" was ever meant to do anything better that a human can manually. At the end of the day, what would I prefer to do? Spend 2 hours learning about kubernetes deployment or spend that time producing multiple clips and mucking around with Avisynth plugins? Yep, I paid $200 and spent that extra free time deploying plex on a kubernetes cluster, which is more fun and interesting.

-

Only if you didn't flag your output correctly and/or the playback device ignores the playback flag.Usually HD is "709" by convention, otherwise the colors will get shifted when playing back the HD versionusers currently on my ignore list: deadrats, Stears555

-

Flagging does not change the actual colors in YUV, but you should should do both and flag it properly too - that's best practices

When you upscale SD=>HD, typically you perform a 601=> 709 colormatrix or similar conversion . When you perform a HD => SD conversion, you typically perform a 709=>601 colormatrix (or equivalent) conversion . -

No, that's not what I wanted to imply. If your colors are correct in 601, you didn't change the colors the output is correctly flagged as 601 then the player should still display the colors properly.Flagging does not change the actual colors in YUV,...

Yes, it's best practice which was introduced since a lot of players did not honor the flagging of a source but either always used 601 or 709 or if you were lucky at least used 601 for SD and 709 for HD. (Some players also did not always honor tv/pc scale flagging which is why it was 'best practice' to stay in tv scale or convert to tv scale at an early stage in the processing of a source in case it wasn't tv scale.)..that's best practices.

When you upscale SD=>HD, typically you perform a 601=> 709 colormatrix or similar conversion . When you perform a HD => SD conversion, you typically perform a 709=>601 colormatrix (or equivalent) conversion .

-> I agree that:

a. flagging according to the color characteristics is necessary

b. it is 'best practice' to convert the color characteristics (and adjust the flagging accordingly) when converting between HD and SD.

But it this conversion is not needed if your playback devices/software properly honors the color flags.

Cu Selur

Ps.: no clue whether topaz know properly adjusts the color matrix and flagging,... (an early version I tested with did not)users currently on my ignore list: deadrats, Stears555 -

Not what I said...

Flagging is best practices - it's not necessary, but ideal.

Applying the colormatrix transform is far more important today and yesterday. It's critical.

If you had to choose one, the actual color change with colormatrix (or similar) is drastically more important than flags; You can get away with applying colormatrix transform without flagging (undef) and it will look ok in 99% of scenarios. But the reverse is not true, SD flagging 601/170m/470bg/ etc.. with HD dimensions is problematic. It might be ok in a few specific software players. It's easy to demonstrate with colorbars

You should cover your bases, because SD color and flags for HD resolution will cause trouble in many scenarios - web, youtube, many portable devices, NLE's, some players... Flags today are still much less important than the 709 for HD assumption

Topaz fixed a bunch of bugs with the RGB conversion, it should be ok now for 601/709 . I didn't check about the interlaced chroma upscaling bug to see if it was fixed -

Just found this interesting colab project: https://github.com/AlphaAtlas/VapourSynthColab

Please do not abuse.Originally Posted by VapourSynthColab -

Gigapixel and Video Enhancer use settings derived from machine learning to perform the scaling of images. The difference is in how they re-implement the same process afterward.

Gigapixel works with single images by creating multiple tests and comparing, which is very similar to the original machine learning process used to generate the initial settings used (statistical mappings for tonal and shape areas that are detected). I know because I ran tests, and monitored my system. It also used a DAT file, or a basic data file, which I can only assume was marking the different identified areas of the image before applying the algorithm for each detected area. You can still adjust the blur and the noise level generated in Gigapixel, which really just determines how it weights motion edges and the depth of contrast noise removal, not color noise. With two sliders, you adjust how the final output is handled, not the algorithm itself, which is handled only by the machine-learning-based process.

Video enhancer uses similar starting points but only makes one or two comparisons before applying the adjustment. It also only applies an adjustment to the areas of the data that exist in the frame, since most frames have a little data from earlier frames and a little that matches the next few frames. If you set your output to an image type, it uses detected tonal areas from earlier frames to apply the adjustment to tonal areas, to keep it consistent. If the data in the frames changes rapidly, however, you may be getting some artifacts appearing in the motion blur. That's why there are different whole algorithms to use in the Video app. The first part is the decoder, which affects input quality, the next is the processing style (how much weight to before and after frames), and the last part is how to re-encode the image data generated. Even TIFF mode gives you a compressed file, so the manner of compression must be respected. If you have a lot of motion in your file, you might be better off processing the file in another encoder, double the frame rate, and use a FRAME BLENDING algorithm to create intermediate frames. For me, on videos of up to 2 minutes at 30i or 30p as input, running through Media Encoder and re-encoding with frame blending for double the frame rate did create a sad motion effect, but afterward, I just re-encoded again to the original rate and there were very few artifacts, if any at all. For interlaced media, I've found it more effective to do an old school deinterlace by dropping half the fields then run the app. YES, it treats all video like an interlace, as part of the algorithm, but not in the same fashion as an interlace. It uses the alternate field to "Guess" the data between the two for the upscale. This is why you are better off re-coding the video in a lower resolution as progressive frame data before you put it into the app. Apple Compressor used to do a similar comparison to track interlaced motion so that it could then REFRAME the video as Progressive. It took a long time to run, but the results were good. After that, a slight edge blur in after effects removed the aliasing edge, keeping true to the fuller resolution. Using a blend mode to apply it to the data only in the dark areas kept it to most of the edges, however, an edge detection with a black and white wash, turned into a mask for the same effect on an adjustment layer set to darken or multiply also had great results. I'm thinking of testing this app again soon, with one of those videos, if I can find them. -

This repository collects the state-of-the-art algorithms for video/image enhancement using deep learning (AI) in recent years, including super resolution, compression artifact reduction, deblocking, denoising, image/color enhancement, HDR:

https://github.com/jlygit/AI-video-enhance -

Thanks.

From the looks of it it only contains short summaries (in Chinese), but it also contains links to the original papers.

Cu Selurusers currently on my ignore list: deadrats, Stears555 -

I just created a new post querying comparisons between Topaz and DVDFab Ai, but thought I'd ask something here, having tried Hybrid a few days ago.

One thing that Hybrid does that Topaz doesn't do is allow users to double the frame rate all the time if they so wish. Topaz only does so with a few of its presets. I love Topaz and the way it does things automatically and gives you an option to preview, but I also like to double the frame rate of my lower quality videos to achieve smoother motion, which is another asset with hybrid.

However I also have Vegas Pro and have tried doing something similar with a not so recent version of Twixtor. With Twixtor every time I have tried to find info on how to double the frame rate to achieve a less jerky playback all I can find is people talking about using it for slow motion, and usually with relevance to anime and gaming, which is not what I want! I would maybe consider buying the latest version if it did what I want of it, which is just to get that smooth interpolation, however if Hybrid is just as good...? -

Never used Twixtor, but to me it sounds like the only difference seems to be whether the output frame rate is changed or not after interpolating frames.Twixtor every time I have tried to find info on how to double the frame rate to achieve a less jerky playback all I can find is people talking about using it for slow motion, ...

Doubling the frame count by interpolation + adjusting playback speed (fps) -> smooth(er) motion during playback.

Doubling the frame count by interpolation + keeping the original speed (fps) -> slow(er) motion during playback.

Cu Selurusers currently on my ignore list: deadrats, Stears555 -

Just discovered:

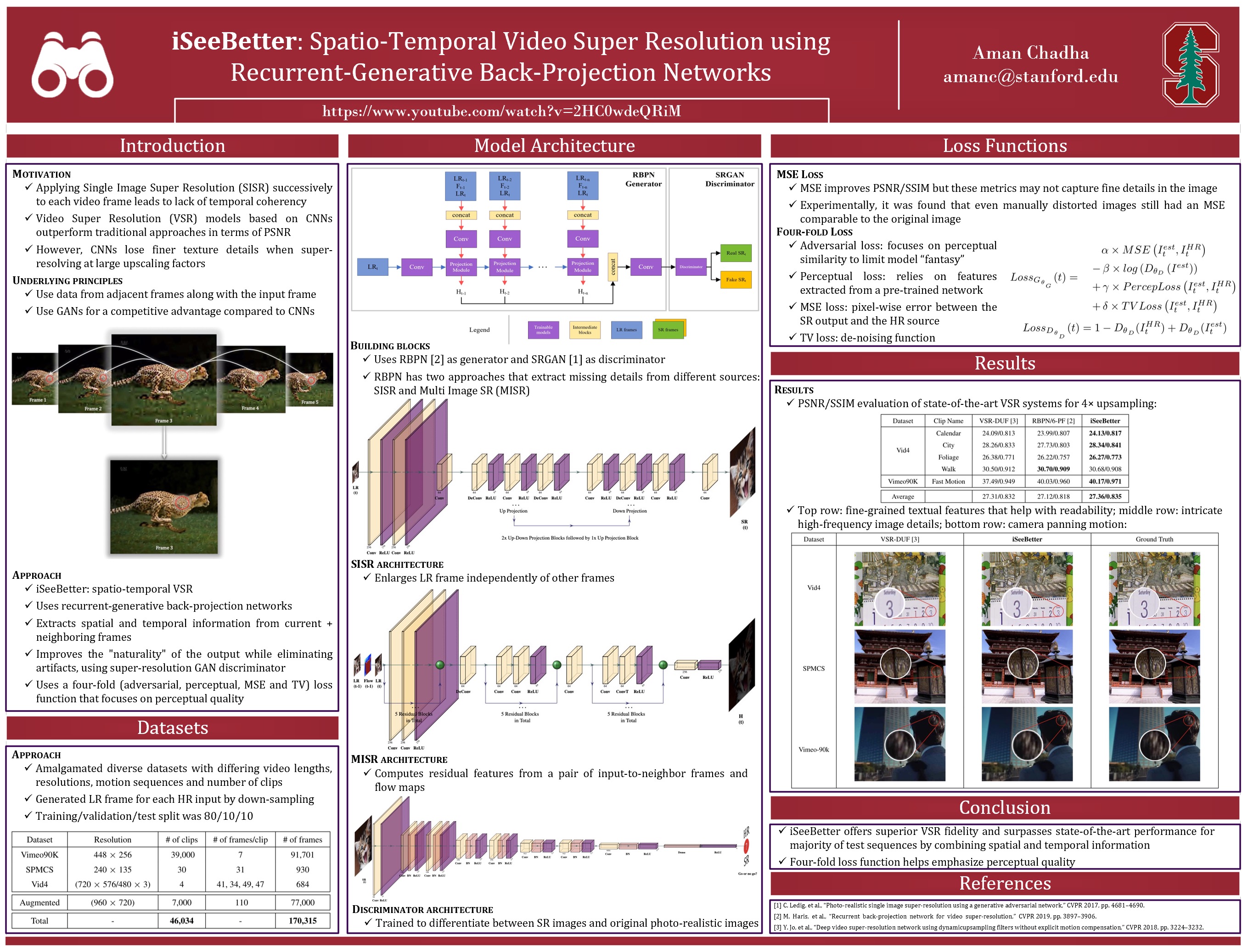

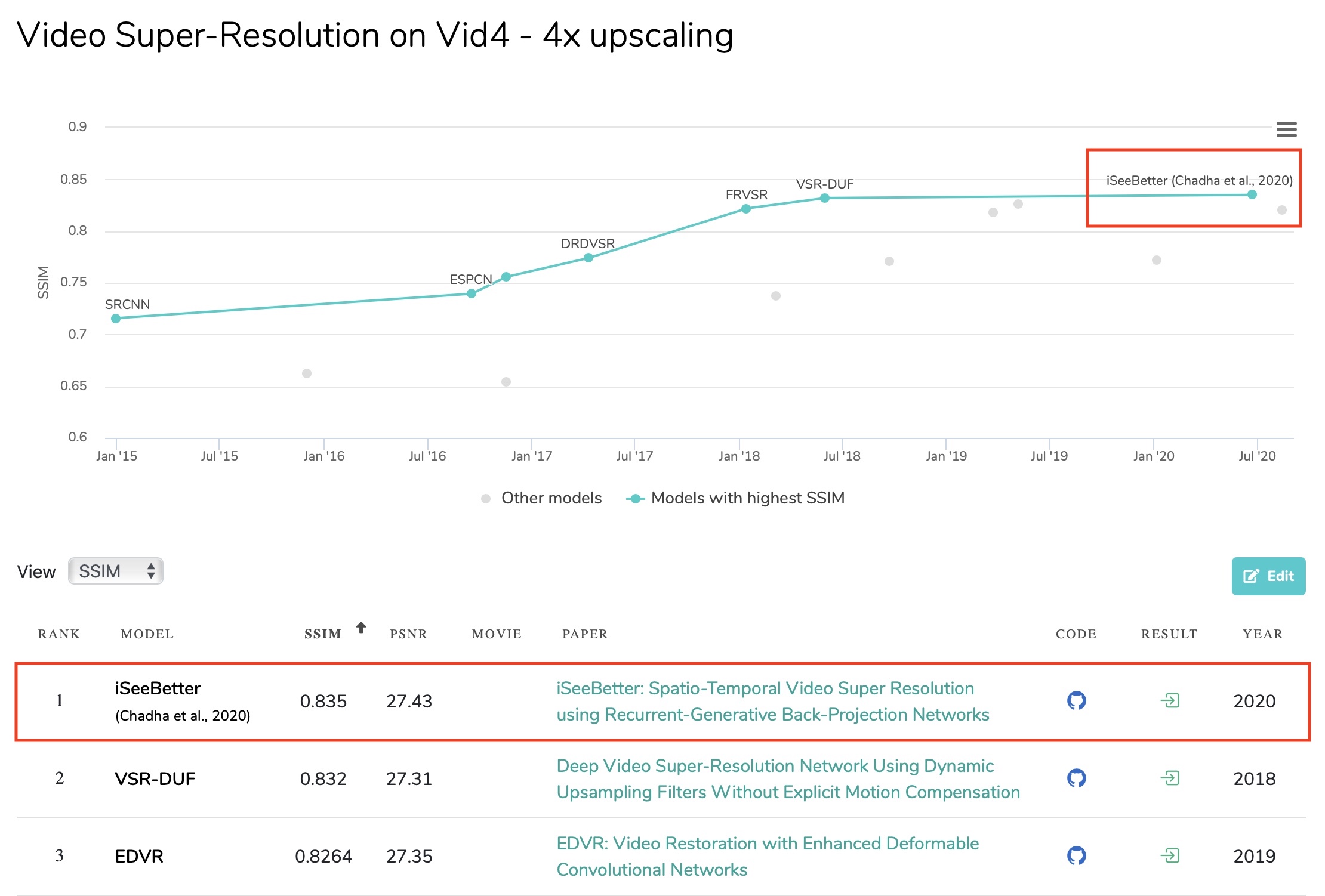

iSeeBetter: Spatio-Temporal Video Super Resolution using Recurrent-Generative Back-Projection Networks | Python3 | PyTorch | GANs | CNNs | ResNets | RNNs | Published in Springer Journal of Computational Visual Media, September 2020, Tsinghua University Press

https://github.com/amanchadha/iSeeBetter -

https://youtu.be/GkJUSbZfamw

i know the video hasn't been detelecined, but the progressive frames prove my point -

Ok, here's another interesting (ISB vs TGAN vs Topaz) video comparison:

https://www.youtube.com/watch?v=m0funrdshXc -

Source: https://www.engadget.com/pornhub-ai-machine-learning-erotica-remaster-4k-180016611.htmlPornHub used AI to remaster the oldest erotic films in 4K

Several algorithms were used to restore the films with "limited human intervention," according to PornHub. The process started by reducing noise and sharpening and contrasting images. The videos were boosted to run in 4K at 60 frames per second, and audio was either remastered or a new soundtrack was added. -

gonna read all this tread probably. I'm deep into Topaz VEAI, trying to deal with it. The main thing that I'm fighting is "plastication" effect. Yes, Topaz can restore details and sharpen but everything looks terribly unnatural if you have small resolution and want to make it look professional. Hope there are some other ways.

-

-

@krykmoon, yes, need to fix myself here: Topaz VEAI can recreate visibility of details and sharpen that visibility. And made everything plastic. So I use it in small doses, using those presets that do not plasticize everything.

-

-

OK, here's another interesting (but unfortunally for still images only and sometimes buggy) git+colb: https://github.com/titsitits/open-image-restoration

Hope that stimulates the progress.Open-Image-Restoration Toolkit: A selection of State-ot-the-art, Open-source, Usable, and Pythonic techniques for Image Restoration -

This is exactly the problem I had with it. It would make all low rez video look plastic, with no real way to adjust it. I tried every setting within Topaz AI and quickly realised it was not suitable for low resolution video. Maybe its ok for higher rez, but it sucks with SD.

That's when I did more research and found DigitalFAQ and Videohelp forums. It has taken dozens of hours of reading to even get a rudimentary level of knowledge though, as everything here is script based, and all workflows differ from user to user. -

Yes, that's the downside of machine learning based filtering. You need differently trained models, there is not multiple parameters which allow you to change the outcome.with no real way to adjust it

So you would need tons of differently trained models to get some more options and you would still not have as much control over it as you do with normal algorithms.

btw. I upscaled the suzie clip from 176x144 to 704x576 using VSGAN and a BSRGAN model and uploaded the file to my GoogleDrive in case someone is interested. (Taking into account that bsrgan is meant for image not video upscaling I think the result is impressive.

(Would be nice to have support for some additional ml based video upscaling support in Vapoursynth and a faster gpu to use it.)

Cu Selurusers currently on my ignore list: deadrats, Stears555 -

Recent related Topaz threads

https://forum.videohelp.com/threads/401919-Topaz-s-video-enhance-ai-is-rubbish

https://forum.videohelp.com/threads/401910-Topaz-products?highlight=veai

Some have good info not overlapping with this thread.

Note that none of these are Topaz love fests. Topaz is crap, and more people are finally saying so.Want my help? Ask here! (not via PM!)

FAQs: Best Blank Discs • Best TBCs • Best VCRs for capture • Restore VHS -

OK, not so much of plastic, only a bit, hand lines are similar to those enhanced with VEAI.suzie clip

-

Yes, so right now you have to pre and post process properly for NN scaling (including VEIA) , or chain together several different models trained for different purposes.

BSRGAN seems to trained for some noise input and smooths over spatial details. As you mentioned it's a single image scaling algo => flickering details and temporal inconsistencies for video, such as hair flickerbtw. I upscaled the suzie clip from 176x144 to 704x576 using VSGAN and a BSRGAN model and uploaded the file to my GoogleDrive in case someone is interested. (Taking into account that bsrgan is meant for image not video upscaling I think the result is impressive.

(Would be nice to have support for some additional ml based video upscaling support in Vapoursynth and a faster gpu to use it.)

To be fair, if you used NNEDI3_rpow2 or any traditional scaler like Lanczos, Spline(x) , etc... - you get flickering and aliasing as well. The flicker is more clear with a NN scaler, because fine details are more clear. You can add additional processing like temporal QTGMC in progressive mode to reduce the flickering at the expense of smoothing over fine details - it's counter productive for upscaling but some post processing is almost always necessary for 4x single frame upscalers for video

For low resolution, fairly clean sources, you can use Tecogan - it's probably the most temporally consistent upscaler that is publically available. Significantly less frame to frame flicker in things like hair detail . VEAI has Artemis-HQ model, which smooths things over temporally as well. Artemis-HQ resembles Tecogan in terms of temporal consistency. YMMV. Both shift the picture slightly, so you have to counter shift. In the suzie example, Tecogan looks ok, less plastic, but there is some sharpening haloing around the phone speaker. The publically available pre-trained generic Tecogan model is not the same one from the paper - some of the training sources were taken down and no longer available. And I haven't seen a public repository for Tecogan models, but it has good potential for video. The public model was trained on low resolution sources, and the ground truth were SD as well, so Tecogan does not work as well on higher resolution video. Compare to ESRGAN or other frameworks that had HD ground truth

Compare these for temporal consistency to BSGAN (or any ESRGAN model)

suzie - tecogan+pp

suzie - veia_gaia_hq+pp

"plastic" generally occurs when over denoising and sharpening occur together.

Tecogan in this example has more fine details, and you could argue looks less "plastic" . Another general processing method to reduce "plastic" appearance is to fine grain or noise, but it's better to not denoise or sharpen as much in the first place

"hand lines" - did you mean the flickering or line thinning ?

Similar Threads

-

how can i restore an enhance audio?

By enable in forum AudioReplies: 4Last Post: 21st Feb 2021, 16:26 -

DVDFab Video Upscaling--Enhance DVD from SD (480p) to Full HD (1080p) video

By DVDFab Staff in forum Latest Video NewsReplies: 2Last Post: 6th Aug 2020, 03:31 -

Is format factory can enhance video ?.

By mrs.faith in forum Video ConversionReplies: 1Last Post: 21st Apr 2017, 14:15 -

How Enhance video quality in potplayer?

By asiboy in forum Software PlayingReplies: 5Last Post: 1st Jan 2017, 15:01 -

Enhance this image to get the license plate

By thestolz in forum RestorationReplies: 7Last Post: 18th Jul 2016, 12:47

Quote

Quote