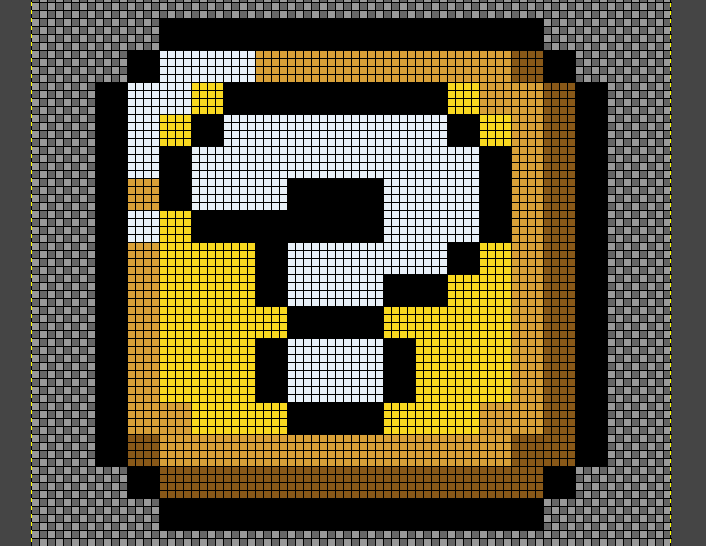

I'm using this as a source:

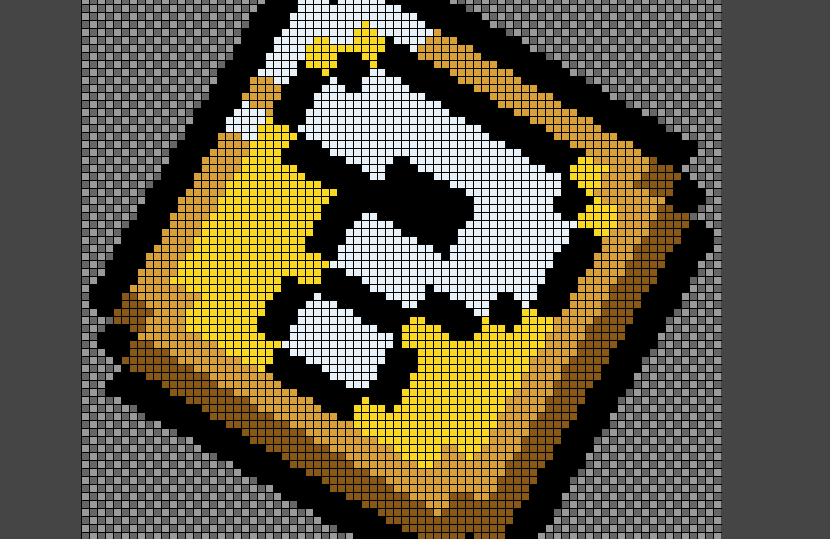

I'm trying to upscale this in a raw pixel shape as possible, but it always generate pixel distortions:

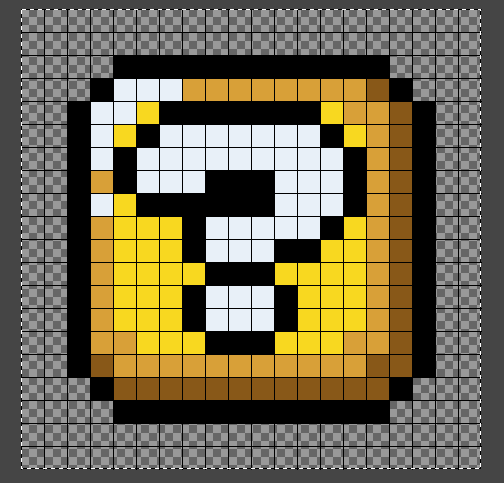

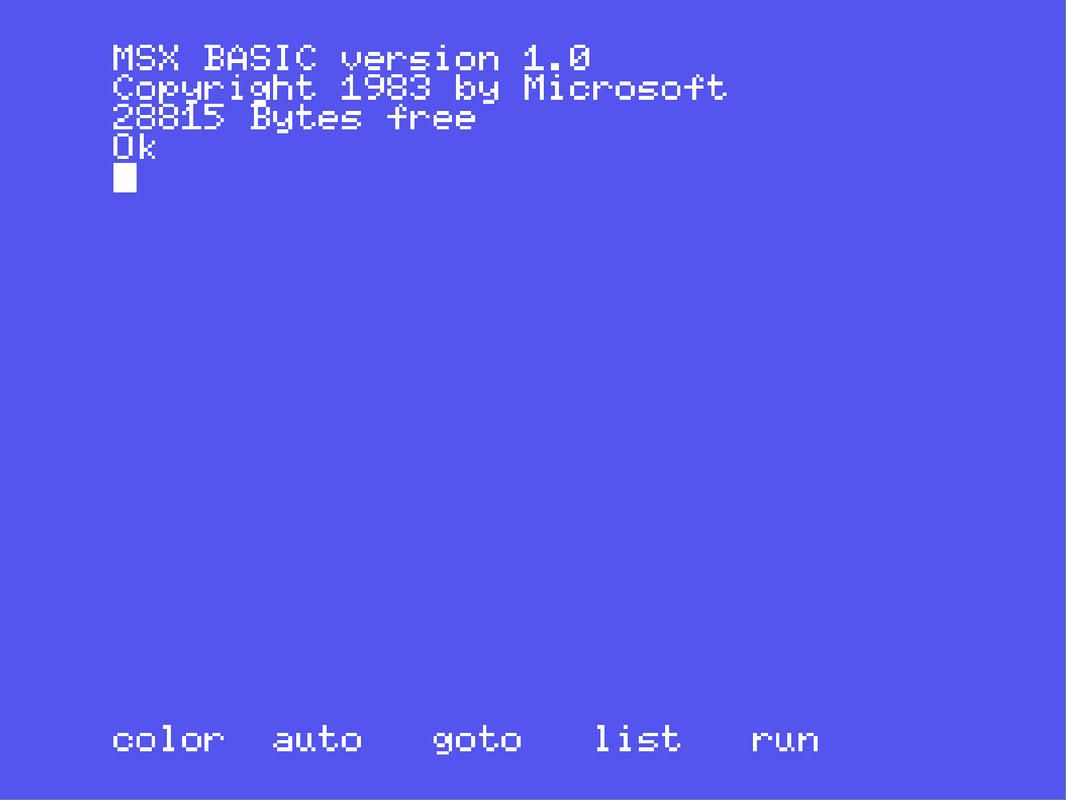

Is there a better option out there to upscale the scree like this:

The above screen was generated from the emulator it self and it look much crisp than this.

I did look over the internet and the best I could find was depoint:

It's a great function but I can't get the whole screen to work out right:Code:# https://forum.doom9.org/showthread.php?t=170608 function depoint(clip c, int target_width, int target_height) { # c.PointResize(w, h).depoint(c.width(), c.height()) gives exactly the same pixels as c c Assert(target_width <= width() && target_height <= height(), "depoint cannot be used for upscaling") x = width() == target_width ? 0 : width() / (2.0 * target_width) + 0.5 y = height() == target_height ? 0 : height() / (2.0 * target_height) + 0.5 PointResize(target_width, target_height, x, IsRGB() ? -y : y) }

Code:Import ("depoint.avs") ImageSource("msx.png") PointResize(1400,1080) depoint(624,480)

Any suggestions?

Thank you.

Try StreamFab Downloader and download from Netflix, Amazon, Youtube! Or Try DVDFab and copy Blu-rays! or rip iTunes movies!

+ Reply to Thread

Results 1 to 18 of 18

Thread

-

-

Point Resizing only gives perfect results with integer multiples: 2x, 3x, 4x, etc.

Code:PointResize(width*4, height*4)

-

-

It gave a pixel perfect upscale:

Code:# https://forum.doom9.org/showthread.php?t=170608 function depoint(clip c, int target_width, int target_height) { # c.PointResize(w, h).depoint(c.width(), c.height()) gives exactly the same pixels as c c Assert(target_width <= width() && target_height <= height(), "depoint cannot be used for upscaling") x = width() == target_width ? 0 : width() / (2.0 * target_width) + 0.5 y = height() == target_height ? 0 : height() / (2.0 * target_height) + 0.5 PointResize(target_width*4, target_height*4, x, IsRGB() ? -y : y) }

All we need it's to control the frame size.Code:Import ("depoint.avs") ImageSource("msx.png") PointResize(1400,1080) depoint(624,480) -

It's impossible to use arbitrary dimensions and get "perfect" results . Only point resize (nearest neighbor) with integer multiples . That's pure pixel duplication

Otherwise you have some type of interpolation going on. Interpolation involves compromises.

Consider this example 2x1 . white square 1x1 next to a black square 1x1. I want to resize it to 3x1 . It's impossible to render it "perfectly". If you round to nearest, you will get uneven dimensions like 2 white, 1 black, or 1white, 2black. Or interpolation might give you 50% grey for the middle, or something else -

There is special group of resizers dedicated for so called Pixel Art https://en.wikipedia.org/wiki/Pixel-art_scaling_algorithms .

Personally frequently using ffmpeg with xbr filter as a crude antialiasing (works OK in many cases for low res target).

How to deal with uncommon video resolution when your target is standard video res (i.e. something like 1280x720, 1920x1080) - i can imagine two ways.

- first you can use pointresizer and enlarge video quite massively (for example 4 times bigger than target size) and downresize with some decent regular resizer (spline or some other non-ringing kernel based downresizer) or you can resize your video to lower size than target with pixel art resize/pointresizer and later pad remaining area with some solid color.

In past i've experimented a bit with this and i also get nice results with largely oversampled (pointresize) image and median filter applied - results scaled down with regular, non ringing resizer.

I should also mention about Waifu2x

Alternatively you can try to perform vectorization and later perform rasterization to required target (but i don't expect good results in real time).Last edited by pandy; 11th Mar 2019 at 04:22.

-

The 2496x1920 image is not perfectly upscaled. To achieve that ~8.9x upscale most pixels have been enlarged by 9x, but some only by 8x. Look at the ends of the letter S in MXF. The single white pixel at the top right has become an 9x9 pixel block, the single white pixel at the bottom left has become an 8x8 block. You didn't notice it because the difference between 8 and 9 is harder to see than the difference between say, 2 and 3, with a 2.? upscale.

-

Thank you poisondeathray

You got it!

Yes, I'm trying to render some screens to create some pixel art and render a compilation to use it as a screen bezel. I've done some tests of my own, I've found that using some fake scanlines over the video is possible to somehow mask it off some of the distortions, I'm uploading a video sample of it and a mosaic rendering.

Man, I thought it would be much more easier

Thank you for the links

This will not break the DAR?

This is what my screen shows

-

Simulating CRT artefacts is usually performed in real-time by dedicated pixel shader (i assume from already released details that this is not possible as target is some mobile app?).

This thread's may be interesting for you http://eab.abime.net/showthread.php?t=95969 or for example https://www.reddit.com/r/emulation/comments/5jh75w/accurate_crt_shaders/ http://emulation.gametechwiki.com/index.php/CRT_Shaders -

Yes, thank you!

I use pixel shaders myself:

I'm attaching a sample using that pixel shader.Code:bgfx_path bgfx bgfx_backend d3d11 bgfx_debug 0 gamma 0.800 shadow_mask_alpha 0.25 gamma 0.8 shadow_mask_alpha 0.25 bgfx_screen_chains crt-geom-deluxe shadow_mask_texture shadow-mask.png shadow_mask_x_count 12 shadow_mask_y_count 6 shadow_mask_usize 0.5 shadow_mask_vsize 0.5 scanline_alpha 0.50 scanline_size 1.0 scanline_height 1.0 scanline_variation 1.0 scanline_bright_scale 2.0 defocus 0.55,0.0 saturation 1.06 scale 0.90,0.90,0.90 bloom_scale 0.35 # Example for BT.601 525-line Color Space chroma_mode 3 chroma_a 0.630,0.340 chroma_b 0.310,0.595 chroma_c 0.155,0.070 chroma_y_gain 0.2124,0.7011,0.0866

The problem I see with shaders is that I could not mimic the looks of the RGB monitor I had, it looks like a Sony PVM-20M2MDU like the video below but it was gray with Japanese SCART input.

https://www.youtube.com/watch?v=rzXvyid9FVg

The monitor performance was very similar to that Sonic video, it's super sharp, vibrant colors and you can see the lines on the whole screen, the white levels were like a soft milky white, it was gorgeous. Some people don't like it, I'm using this scanlines now just for looks. -

-

I didn't knew Retroarch.

https://www.retroarch.com/index.php?page=shaders

Thank you Claudio. -

Will this ever come as a video upscaling filter?

Reference:

Distortion:

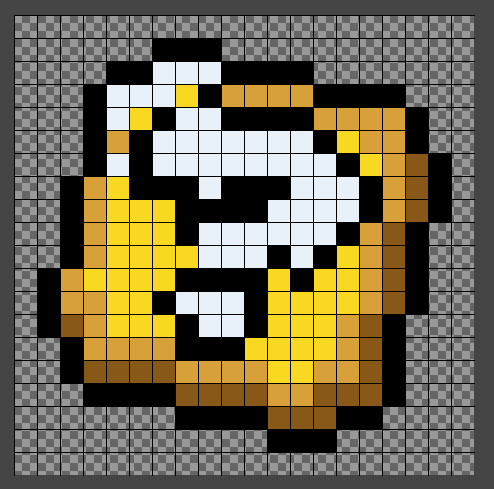

New solution?

In action:

https://www.youtube.com/watch?v=6VrzJ6Y1kjQ

Samples:

http://www.framecompare.com/image-compare/screenshotcomparison/EB9CNNNU

https://forum.videohelp.com/images/imgfiles/a/aApu3Ba

Source:

https://www.reddit.com/r/emulation/comments/bdltmo/hd_mode_7_mod_for_bsnes_v1071_beta_1/ -

Just point resize before rotating.

Starting image:Code:function MyRotate(clip c, float angle) { c.Rotate(angle) Subtitle(string(angle)) } ImageSource("tiny.png", start=0, end=360, fps=30.0) PointResize(width*24, height*24) ConvertToRGB32() Animate(0, 360, "MyRotate", last,0, last,360)

Resulting upscaled animation:Last edited by jagabo; 16th Apr 2019 at 18:03.

-

It's only possible in-game, because there is data missing once you render out an image (ie. don't expect to apply it to a generic, already rendered image)

It knows which areas to treat differently (e.g. overlays, HUD vs. texture areas like ground, foreground vs. background layers) . Notice how the foreground character or player isn't antialised or treated any differently. That would not be accurate or precise in an image already rendered in automatic mask areas

http://www.framecompare.com/image-compare/screenshotcomparison/EB9MNNNU

(framecompare.com is a good web based comparison tool - it can use number keys to swap like avspmod)

It's the same idea for 3D applications, renders . Internal render engine processing for scaling, denoising, antialising etc...any type of processing - can be much better internally than afterwards - because you have access to exact data and measurements, such as internal motion vectors, normals, albedo, object / mask areas . (Although you can usually render out that info in separate passes in 3D applications to be used in post processing in other applications , I doubt a SNES emulator would be able to)

Similar Threads

-

DVD to Wistia, best option?

By sdsumike619 in forum Video ConversionReplies: 1Last Post: 2nd Feb 2019, 03:49 -

is neat video my best option

By hdfills in forum RestorationReplies: 9Last Post: 25th Aug 2017, 12:57 -

What is my best option for iPad

By Tafflad in forum Video ConversionReplies: 9Last Post: 20th Jun 2017, 15:44 -

Which option do i use for the best video?

By john1967 in forum Newbie / General discussionsReplies: 15Last Post: 4th Mar 2015, 18:37 -

DVD to MKV Best option

By efiste2 in forum Video ConversionReplies: 5Last Post: 13th Jan 2015, 23:49

Quote

Quote