What is the best way to enhance the contrast/black level while staying above 0 IRE on the waveform scope? Is the levels filter the best to use?

How to keep above 0 IRE and still enhance black level/contrast? Also if camcorder fades-to-black, should this be set at 0 IRE?

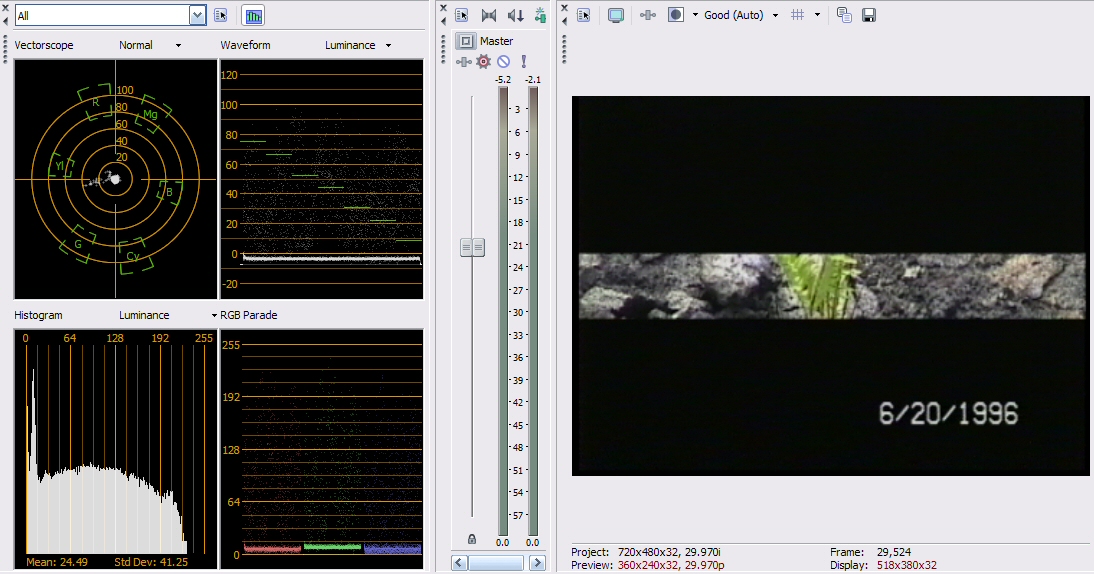

Original capture

Levels Filter lower black level

camcorder fade to black

camcorder fade to black lowered

Try StreamFab Downloader and download from Netflix, Amazon, Youtube! Or Try DVDFab and copy Blu-rays! or rip iTunes movies!

+ Reply to Thread

Results 1 to 11 of 11

Thread

-

-

The waveforms look correct. Are you sure your monitor (TV not computer monitor) is calibrated?

If you still don't have adequate dark areas on your display TV, the adjustment needed is gamma. Gamma weights the gray scale to the dark or light areas. Test your gamma adjustments on various displays. Your problem could be with that monitor only. -

When starting to color correct a piece of footage, the first task is to make sure that black and white levels are set properly. When I say "correct" levels, I mean appropriate for the particular footage being corrected. I do this before trying to take out any color cast, match the color to other footage, or do any "creative" color correction. By first standardizing the black and white levels of all footage it becomes much easier to do subsequent color correction consistently since you're always starting from the same point.

-

I agree. Luminance must be fixed first (levels and gamma) but before that, you must trust the calibration of the monitor.Originally Posted by Marvingj

-

If you have it in 4.0, look under View for VideoScopes.Originally Posted by Knightmessenger

-

How is that best achieved? Color Corrector, low and high droppers? What I'm assuming is that you would "color correct" black point, white point with color corrector, do levels, then add another color corrector to do actual color corrections. Is that what you mean?Originally Posted by Marvingj

Cheers! -

Yes....

-

What are you looking for with the levels setting? When I mess with levels I'm usually looking at the histogram and getting as even a spread as possible while eyeballing for the best picture. Sounds like I *should* be looking at the luminance waveform?Originally Posted by Marvingj

-

To make brightness consistent, early TV engineers established minimum and maximum levels for the brightness of on-screen pixels and created tools to standardize the entire television industry. The original analog measurement standard uses a fundamental brightness unit called an IRE (Institute of Radio Engineers).

For NTSC television in the US, the bottom limit of picture brightness was set at 7.5 IRE. This defines the black level or pedestal of a video signal as seen on a waveform monitor . At the top of the scale, 100 IRE means full brightness, full white or the white level (Note: not white balance).

When video went digital, the range of brightness was divided into 256 digital steps, from zero to 255. Our fundamental goal when exposing a scene is to make sure that whats bright stays bright, whats dark remains dark and that everything in between makes good use of the luminance bandwidth, those 255 levels the system provides. The key to success with all of this is in the standards. Then tweeting it as a matter of taste. -

If the video was recorded from TV, 90% of color correction issues are solved with setting levels correctly. The signal looked good at the TV station. Level correction is step one.

Analog NTSC is broadcast with black at 7.5 IRE and and nominal white at 100 IRE with excursions up to 120 IRE. Pal uses 0 IRE for black.

Capture devices vary on screwing up levels. Older cruder cards set black to 0 and average white to 255 thus chopping off the 101-120 highlight excursions. Back in the mid 80's, broadcasters quickly determined that 8bit luminance needed overshoot ranges at black and white to stop all sorts of video artifacts. CCIR-601 defined digital component Y,Cb,Cr video with black set to level 16 and white to 235. The range from 236-255 was reserved for overshoots. YCbCr at these levels continued into all major video standards including DVD MPeg2, MPeg4 and Digital Broadcasting.

Meanwhile computer dudes grew up on 0-255 RGB in 640x480 frame buffers and weren't the wiser. The collision in values happened in the 1998 to 2005 period and the broadcasters won the war. In the 8 bit digital video world black is 16 and nominal white is 235. End of story.

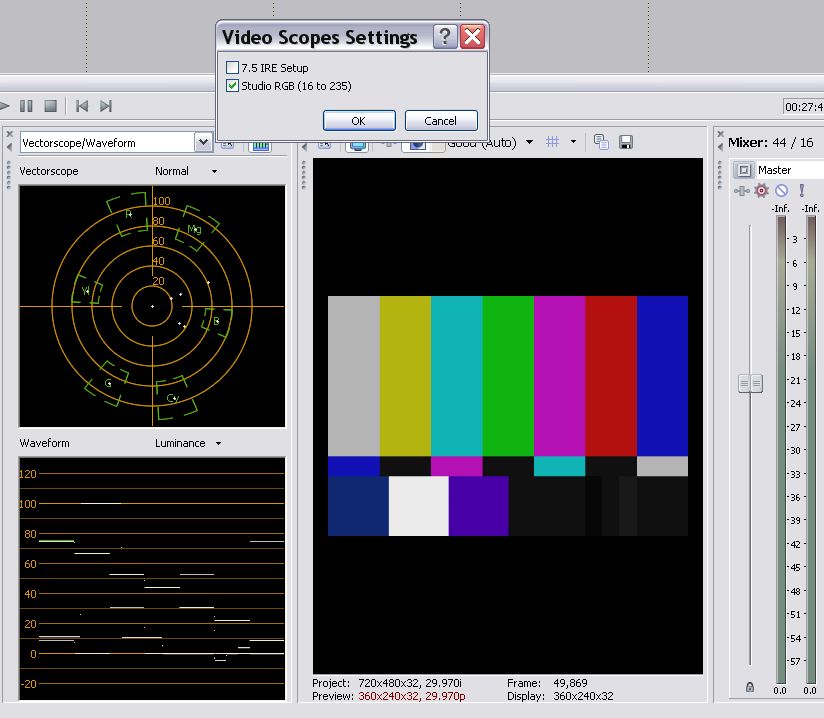

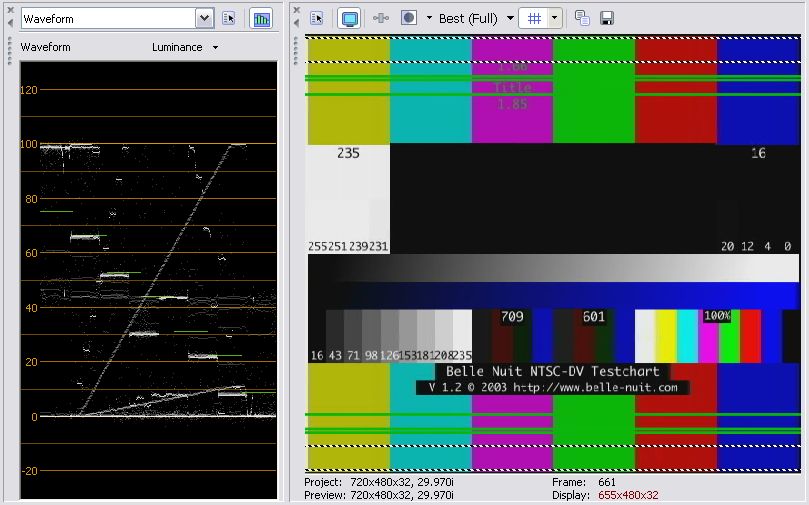

When you work in ITU Rec-601 space such as DV or DVD MPeg2 an analog NTSC (or PAL) capture should look like this in the 8 bit digital space. This is Vegas. 0% is digital 16 and 100% is digital 235.

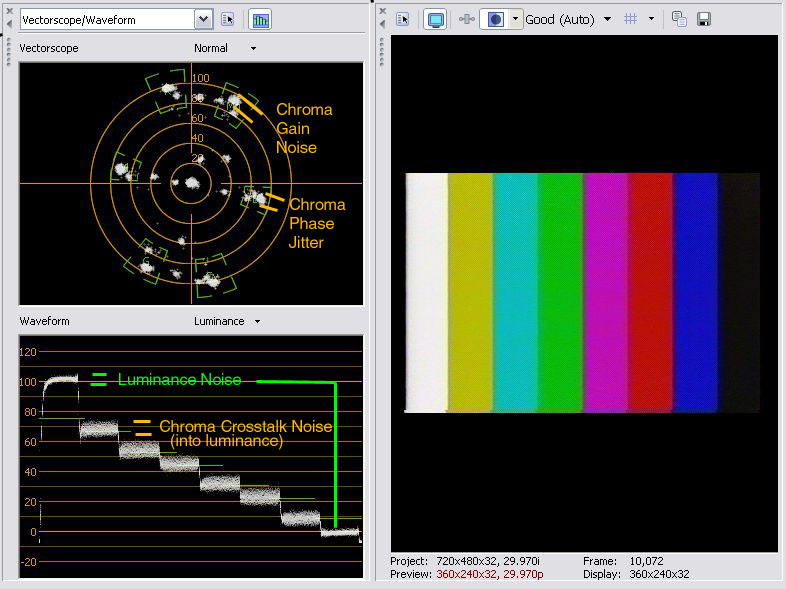

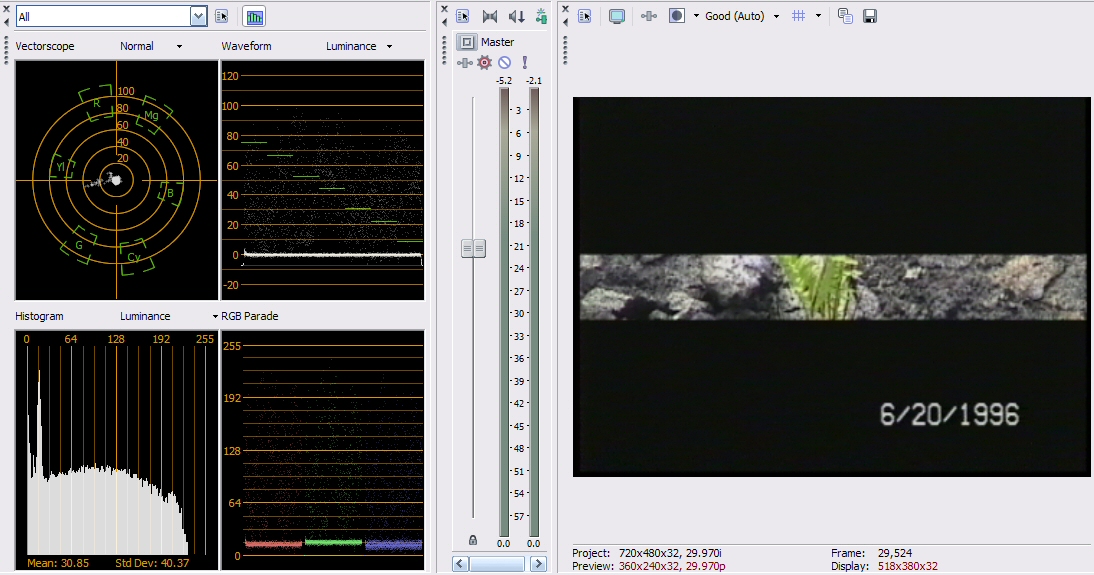

VHS or analog TV captures should look something like this if capture levels are correct.

Watch this JVC tutorial on black levels for more. Once luminance levels are set, then advance to color correction if needed.

http://pro.jvc.com/pro/attributes/prodv/clips/blacksetup/JVC_DEMO.swf

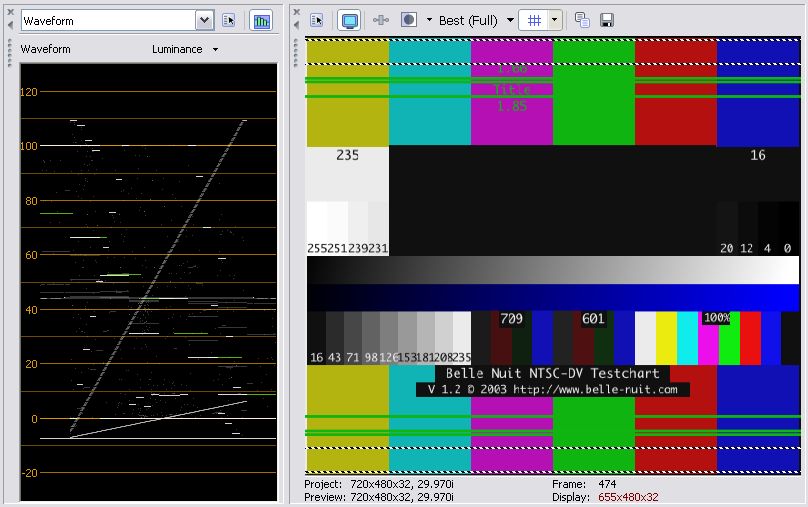

The BelleNuit test pattern is good to use for system calibration and illustrates what is supposed to be happening in the black and white areas.

If you capture to 0-255 RGB, the white and black detail are clipped. Note the clipping of whites. An example would be sky going to white loosing cloud detail.

Similar Threads

-

Sony Vegas is rendering black screen

By LoveMyslf in forum EditingReplies: 20Last Post: 24th Jun 2015, 07:59 -

Will the Sony Hi8 Miniplayer/MiniDV players (w/ TBC) work for VHS capture?

By DaveP56 in forum CapturingReplies: 1Last Post: 6th Feb 2011, 15:38 -

Sony Vegas pro 9. Rendering Black screen

By jolehoz in forum EditingReplies: 0Last Post: 15th Nov 2010, 11:02 -

Black Screen of Death in Sony Vegas Pro 9.0

By sonyvegaspunk in forum EditingReplies: 2Last Post: 29th Sep 2010, 21:37 -

vhs to dvd and the dreaded black level

By buckethead in forum CapturingReplies: 26Last Post: 9th Aug 2008, 12:21

Quote

Quote